Get Advanced Insights on Any Topic

Discover Trends 12+ Months Before Everyone Else

How We Find Trends Before They Take Off

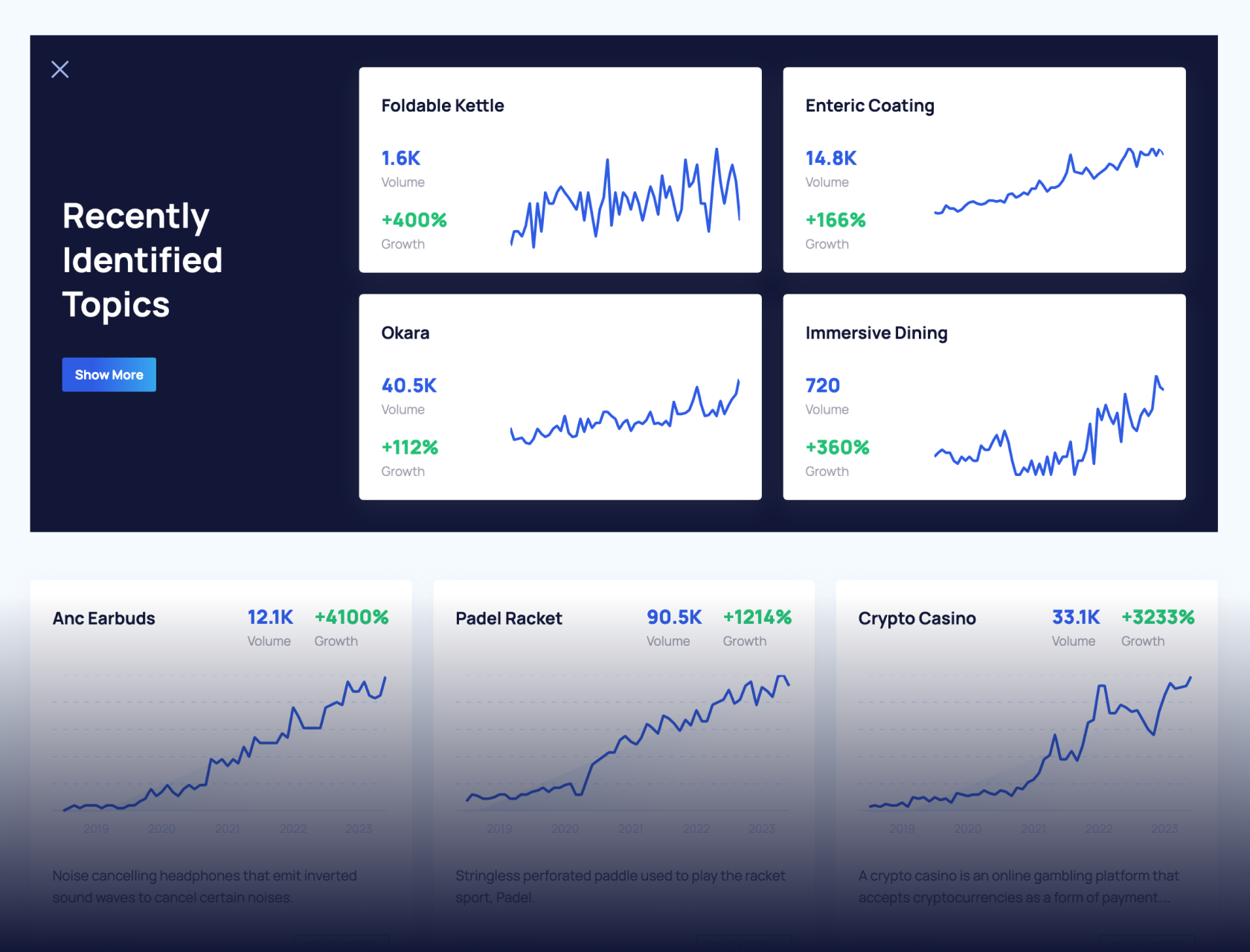

Exploding Topics’ advanced algorithm monitors millions of unstructured data points to spot trends early on.

Keyword Research

Performance Tracking

Competitor Intelligence

Fix Your Site’s SEO Issues in 30 Seconds

Find technical issues blocking search visibility. Get prioritized, actionable fixes in seconds.

Powered by data from

5 Key Big Data Trends (2024 & 2025)

3.5 quintillion bytes — that’s the amount of data that was created every day in 2023.

And, that number is on the rise.

Organizations that can harness the power of big data have the opportunity to launch new business initiatives and jump ahead of the competition. But processing, storing, and analyzing big data presents an incredible challenge.

Read this list of five top data trends to see what companies are doing today to overcome these obstacles and put big data to work in accelerating revenue growth.

1. Stream Processing Enables Real-Time Big Data Insights

Big data constantly streams out of IoT devices like sensors, smart home devices, mobile devices, social media feeds, and the like.

Until recently, many businesses have been unable to process these huge amounts of data in real-time. Faulty analysis, increased latency, and unused data were the likely outcomes.

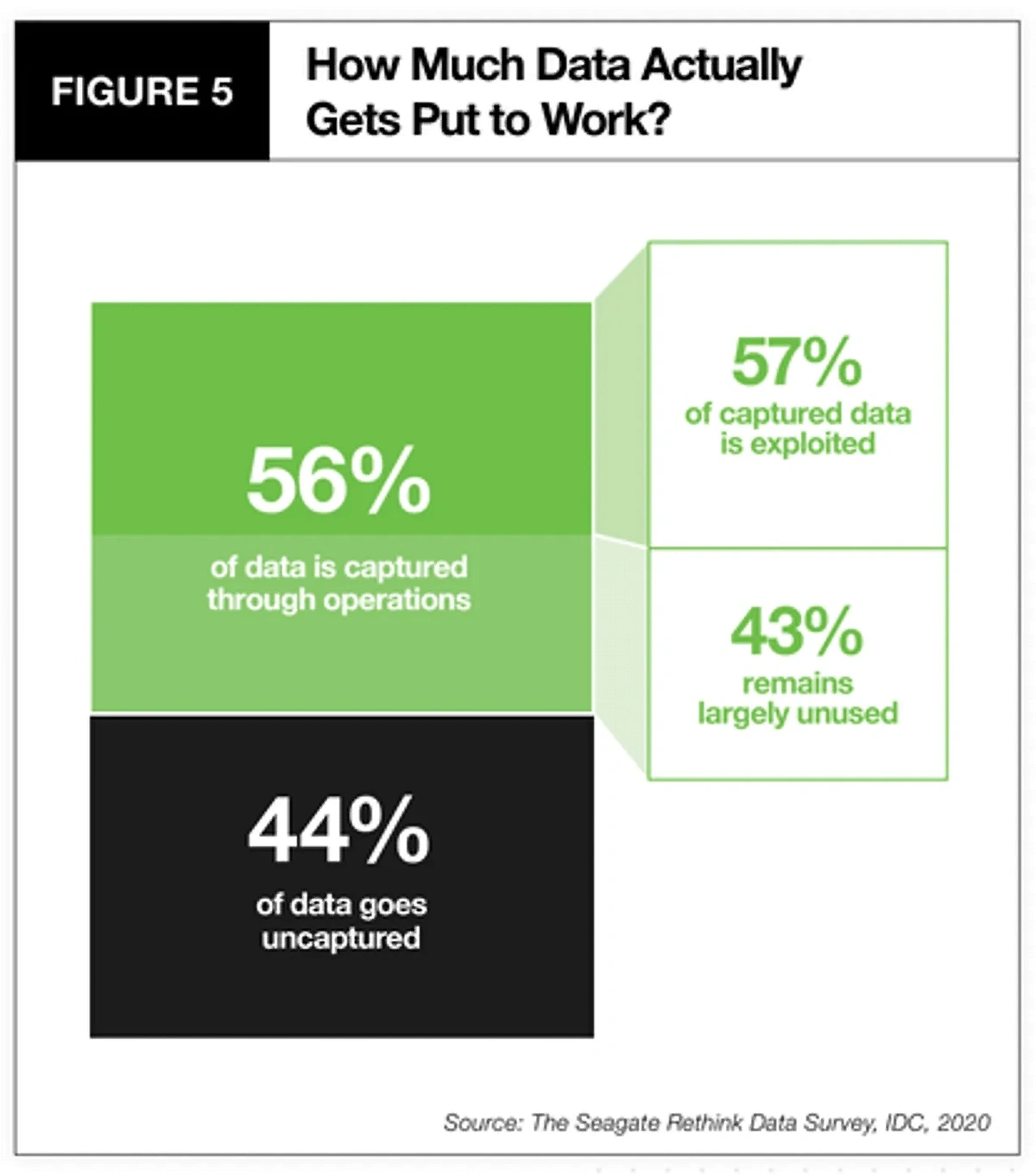

One survey found businesses only utilize 57% of the data they collect.

The other 43% of data remains unleveraged.

The survey showed more than 40% of organizations’ data sits dormant.

However, with new tech solutions, real-time stream processing is now possible.

This allows businesses to capture and analyze data right away instead of leaving it to be stored and later analyzed in batches.

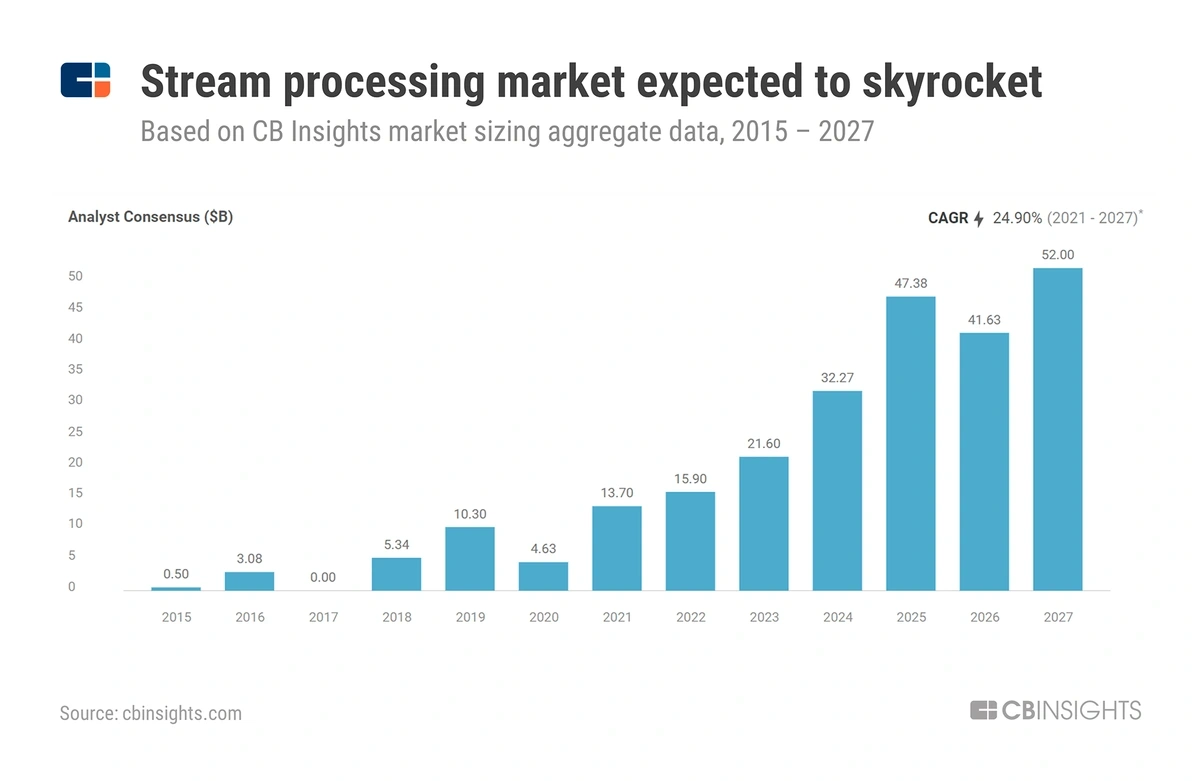

CB Insights predicts the steam processing market will be valued at $52 billion in 2027. Compared to 2024, that’s an increase of around $20 billion.

The stream processing market is expected to see tremendous growth in the next five years.

In 2022, Gartner predicts that more than half of new business systems will incorporate real-time data analytics, something they refer to as “continuous intelligence”.

The financial industry is one sector in which real-time big data insights are critical.

In one example, a bank was able to incorporate real-time data into customer interactions at ATMs.

Based on customer history, the ATM would offer a low line of credit to specific users. As a result, the bank increased its total loans by 400%.

One big data streaming solution is Apache Flink. The open-source solution can process live streams of data in mere milliseconds.

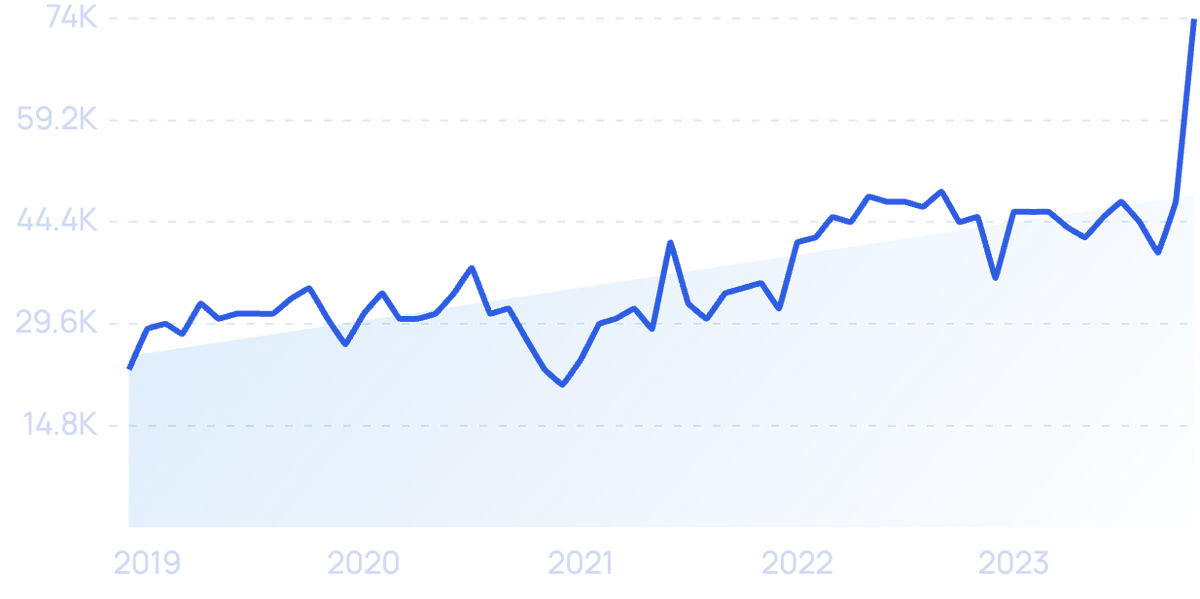

Search volume for “Apache Flink” is up 108% over 5 years.

Businesses are drawn to the high performance, fault tolerance, and low latency of the platform.

However, this solution is usually reserved for big corporations because it requires a great deal of expertise to run it.

Immerok, a startup founded just months ago, is making the technology available to SMBs.

Immerok’s leadership team includes seven Apache Flink experts.

Their solution is serverless, providing a fully managed version of Apache Flink that’s maintenance-free for customers.

The company recently wrapped up a $17 million seed funding round. They expect to hire 30 new employees to fuel growth in the coming year.

Confluent is another stream-processing vendor that’s seen tremendous growth recently.

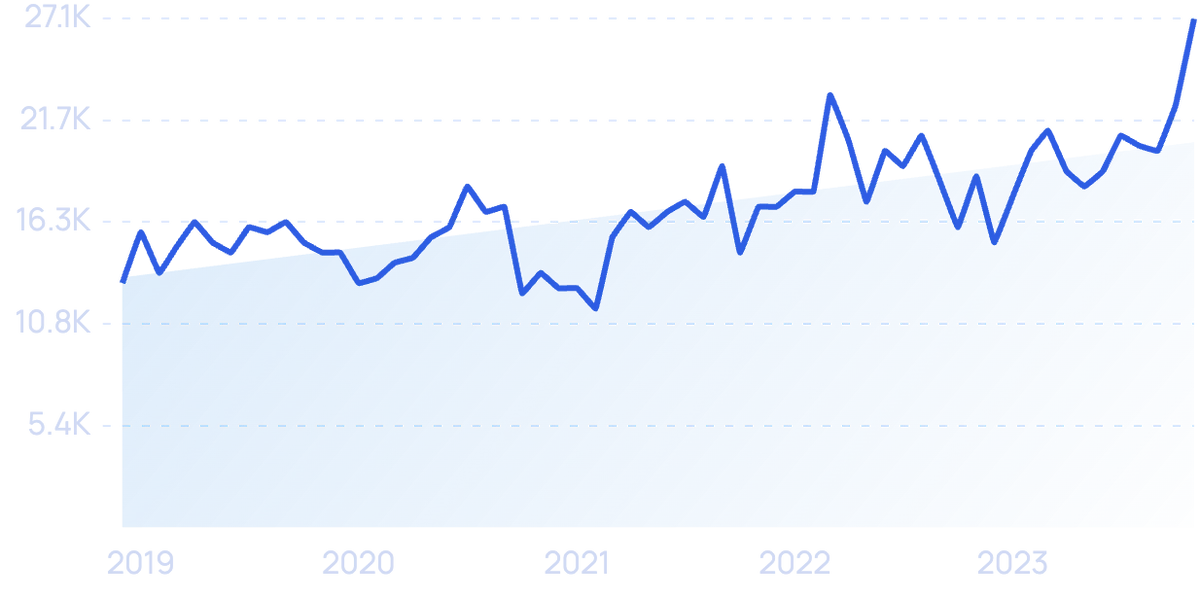

Search volume for “Confluent” shows an increase of 223% over 5 years.

In mid-2021, the company went public at a valuation of $9.1 billion.

They also reported 66% sales growth in 2021.

2. AI and ML Power Automation and Analytics

More than 60% of IT leaders say they’re planning to increase spending on artificial intelligence and machine learning (AI/ML) solutions.

Search volume for “automated machine learning” is up 116% in 5 years.

Organizations are using these types of solutions to analyze big data and create actionable insights faster than ever before.

In one example from the medical field, human researchers can spend between four and 24 hours analyzing a 30-minute video and watching for specific neuron activity.

When the video data is analyzed with a machine learning-based algorithm, the same process can be completed in less than 30 minutes.

Businesses are using AI/ML to automate big data processing, filtering, cleansing, analysis, and more.

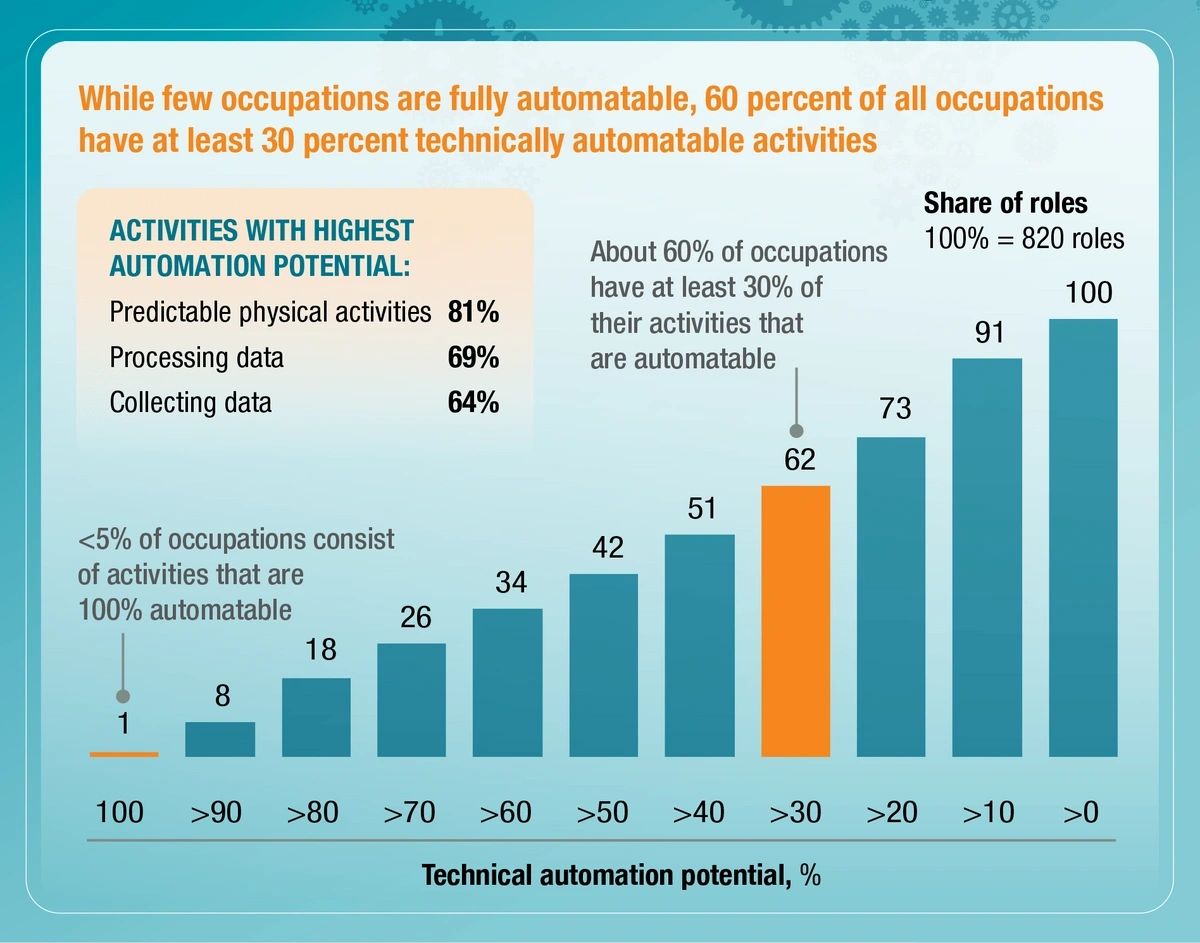

In fact, AI solutions can automate close to 70% of all data processing work and 64% of data collection work.

AI solutions have the potential to automate more than 60% of the work associated with collecting and processing big data.

In turn, machine learning can automatically identify patterns in the data, make predictions, and create decision-making algorithms.

These automated solutions are a critical piece of the big data puzzle because nearly 40% of businesses say they’re not confident they’ll be able to handle the big data influx of the future.

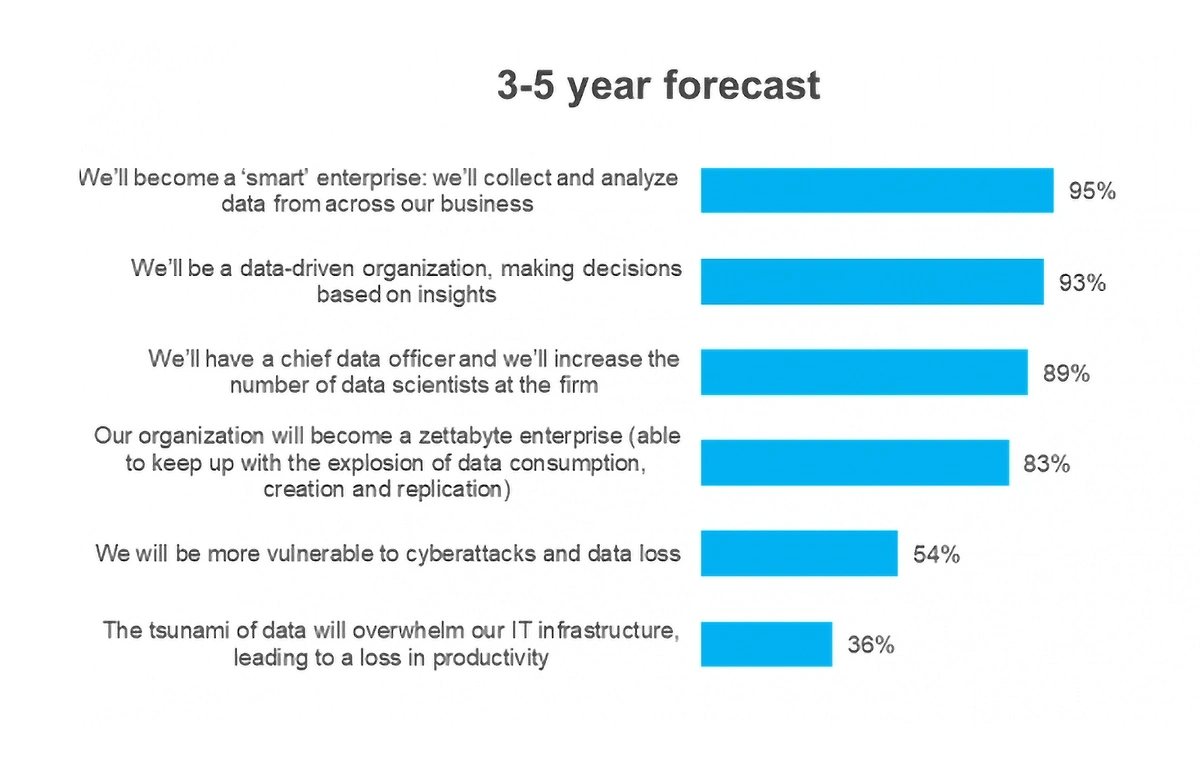

By 2025, more than 80% of organizations believe they’ll be handling zettabytes of data, but 36% of organizations believe they won’t be able to handle all that data.

One emerging trend is that these tools have become more viable for businesses of all sizes and in a wide variety of industries in recent years.

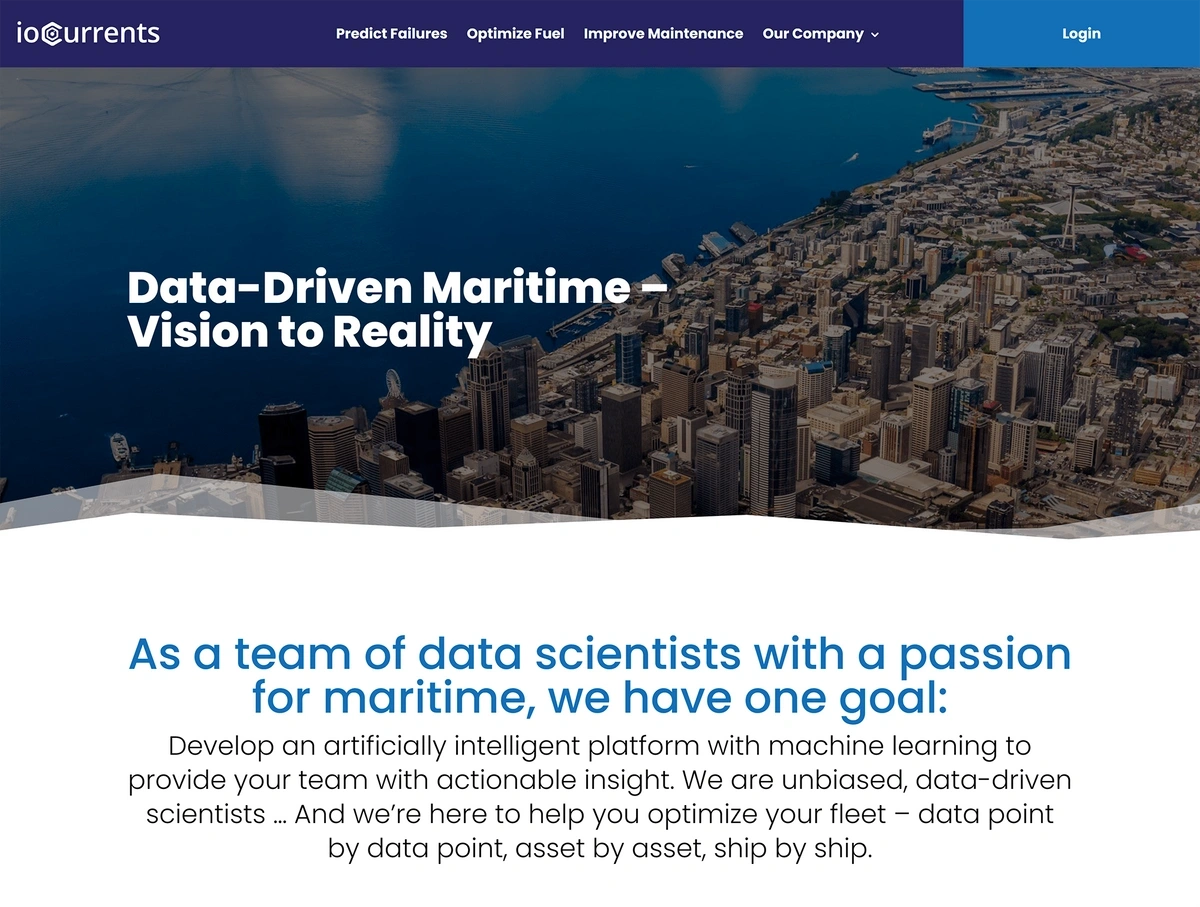

ioCurrents is a startup that’s bringing AI/ML solutions to the maritime industry.

ioCurrents’ AI/ML solution is fed data from an onboard IoT device.

Their solution aims to predict failures, optimize fuel efficiency, and extend the maintenance schedules of vessels.

The company reports the solution is able to have machine learning models up and running after less than a month of data collection.

One popular way in which businesses are using AI/ML analysis of big data is by studying their target audience.

In the coming years, we expect to see these tools provide companies with an increasingly accurate picture of their target audience, which they wouldn’t be able to see with human analysis alone. They’re able to see detailed preferences and behaviors, as well as predictions for future business outcomes.

Uber is one consumer-facing company that’s utilizing the power of big data — several hundreds of petabytes of data.

The company uses automated AI/ML solutions to make decisions like estimating large-scale demand, matching drivers with riders, and setting fares.

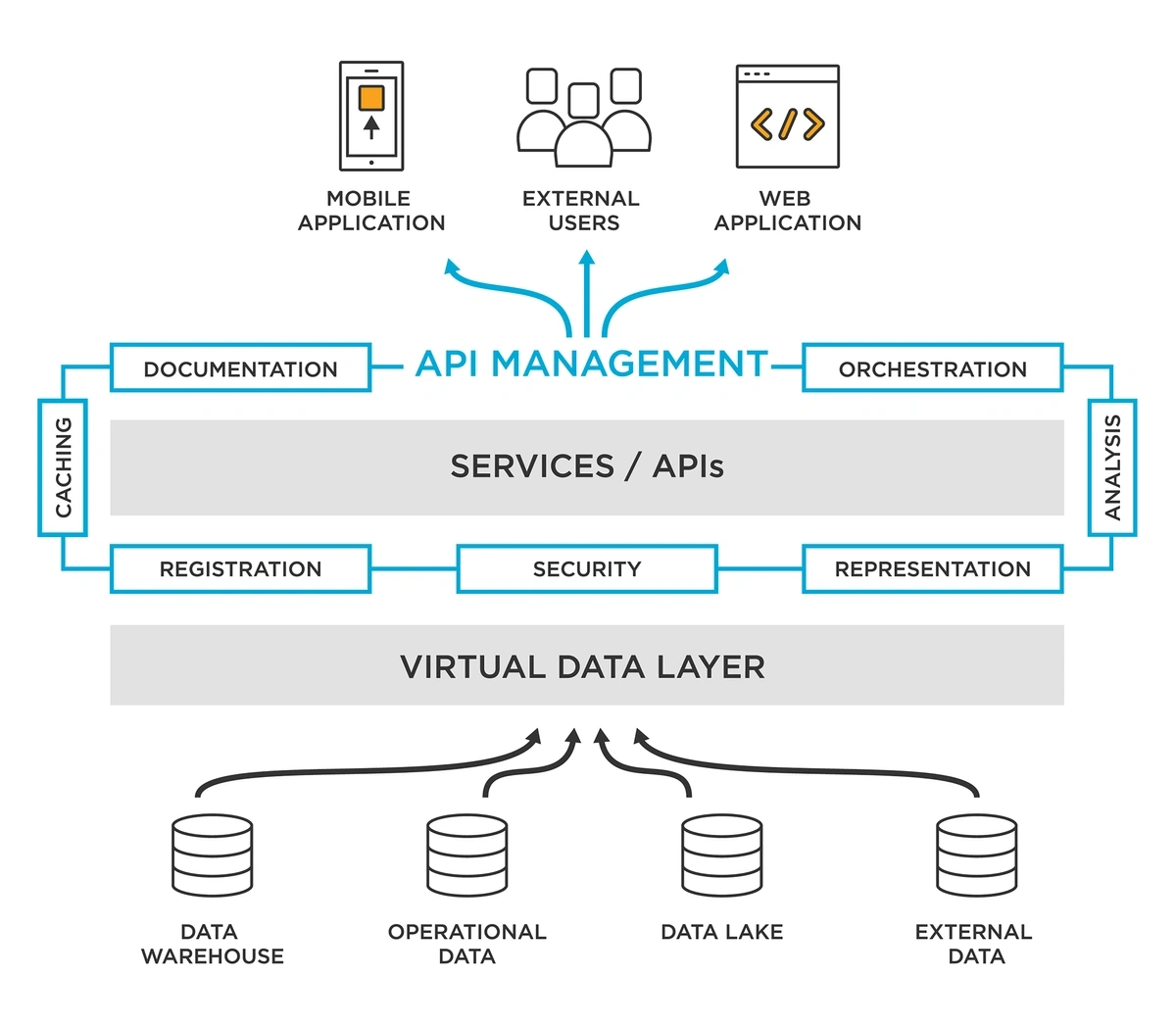

3. Data-as-a-Service Offers Scalable, Cost-Effective Management

The data-as-a-service (DaaS) market was estimated to hit $10.7 billion in 2023.

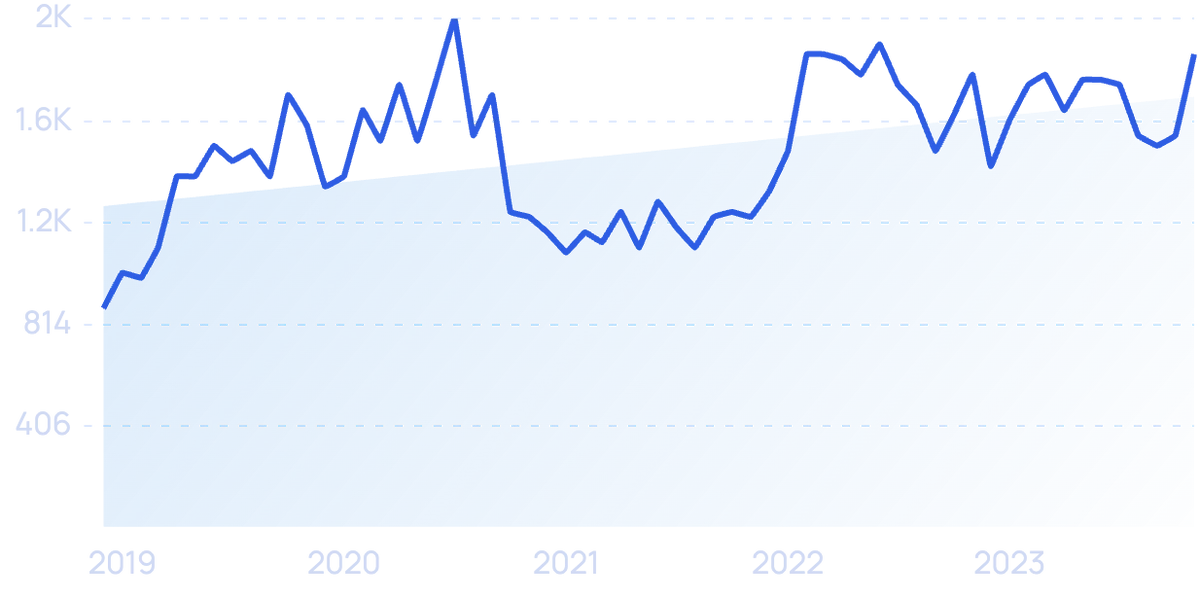

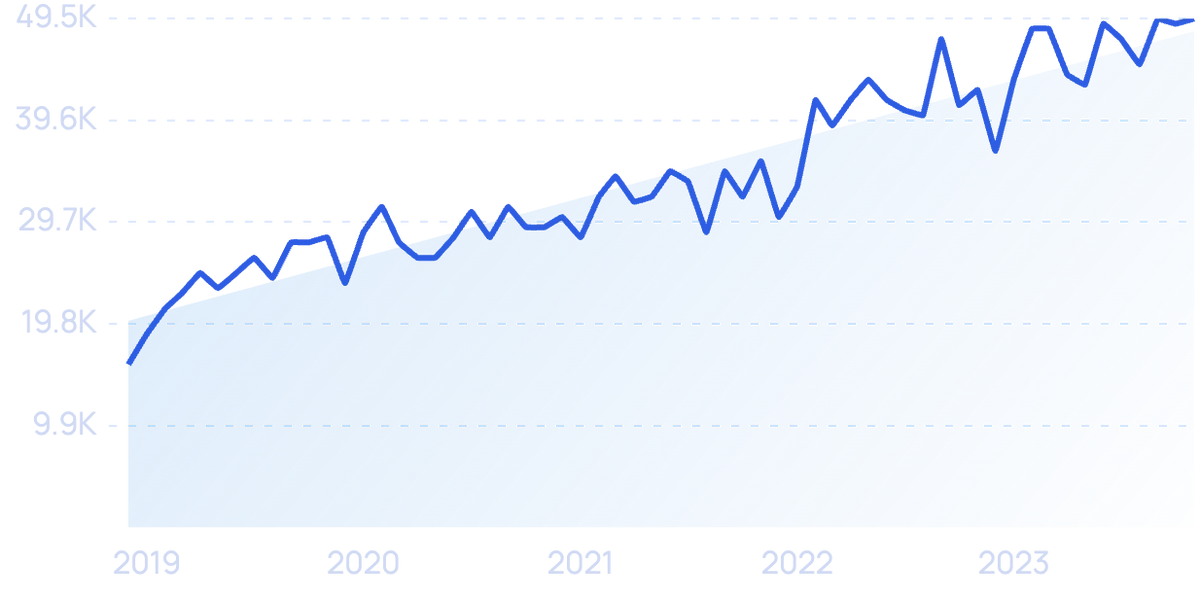

Search interest in “Data as a Service” is up nearly 300% in the past 5 years.

This market includes cloud-based tools used to collect, analyze, and manage data.

By utilizing DaaS, companies can reap the benefits of big data without having to build their own data collection solutions or expensive storage platforms.

DaaS platforms can lower costs and enable agile decision-making for organizations that would otherwise struggle to manage big data.

Using a DaaS provider is the most cost-efficient, strategic way for many businesses to manage their big data needs.

Nearly 40% of IT professionals say their data storage and backup is run on an as-a-service platform.

AWS, Microsoft Azure, and Google BigQuery all offer DaaS options.

But startups in a few unique sectors have launched big data management options too.

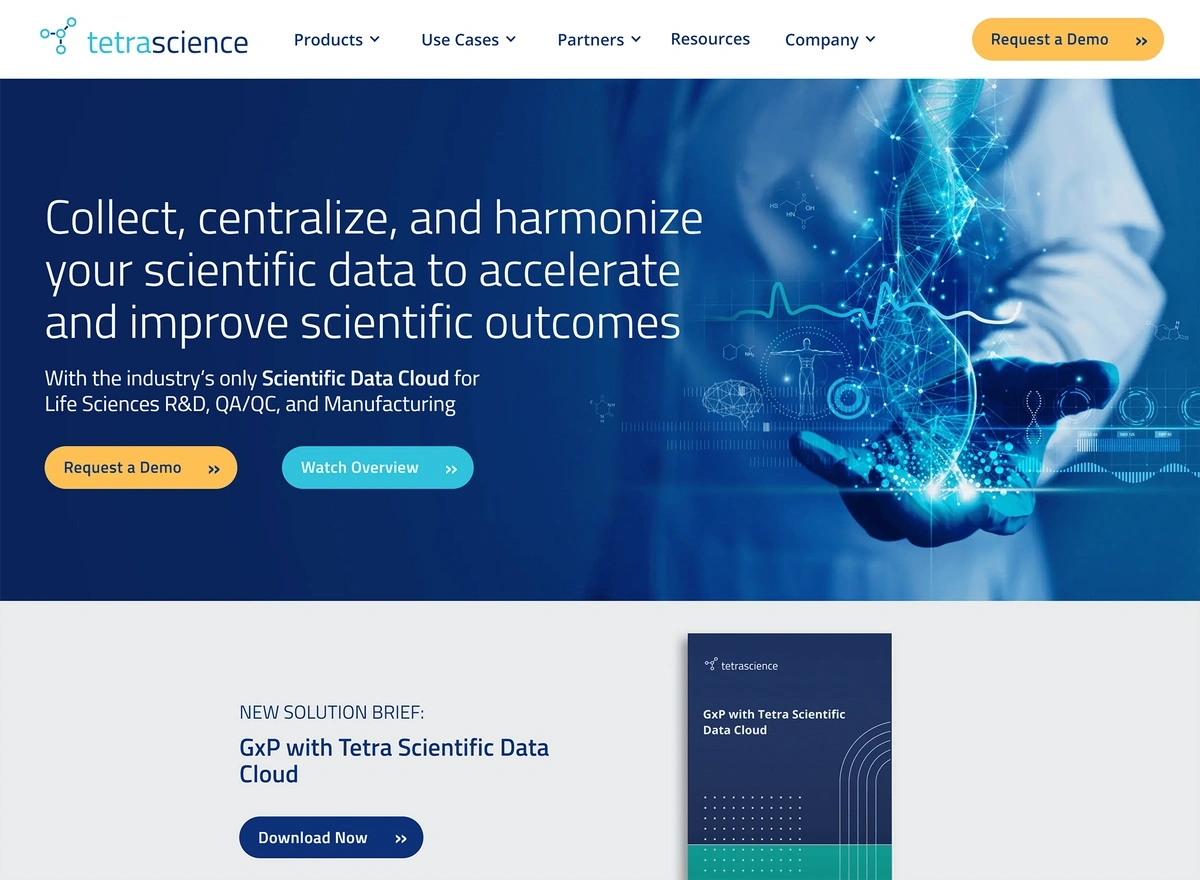

Tetrascience is a data cloud provider offering the opportunity for scientific labs to harmonize all of the data from their instruments and systems.

Tetrascience is a cloud-native data platform built specifically for the scientific community.

Tetrascience posted a 111% increase in annual recurring revenue in the first half of 2022.

According to company executives, 13 of the top 25 global drug manufacturers use this DaaS platform.

The company recently released a new platform expansion that allows the kind of cohesive data management that industry leaders say can lead to AI/ML-based pharmaceutical developments.

DaaS leans into another big data trend: democratization.

Search volume for “data democratization” has climbed nearly 3,100% over the last 5 years.

This means that the power of big data, which was once only reserved for data scientists and engineers, is in the hands of employees throughout the company.

Through DaaS platforms, non-technical staff have access to user-friendly tools and applications, giving them the opportunity to gain insights and work more efficiently.

In one survey, 90% of business leaders say data democratization is a priority.

4. Data Lakes and Lakehouses Provide Optimized Storage

Businesses that want to run deeper analyses on their big data are investing in data lakes or data lakehouses.

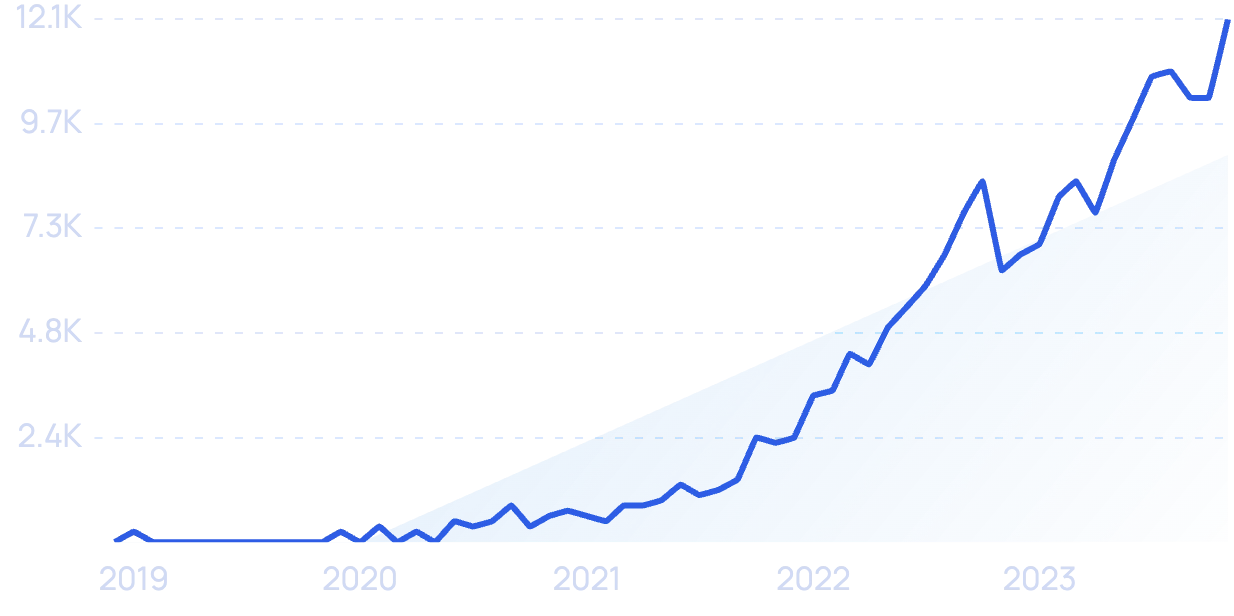

Search volume for “data lakehouse” is up 99x+ since 2018.

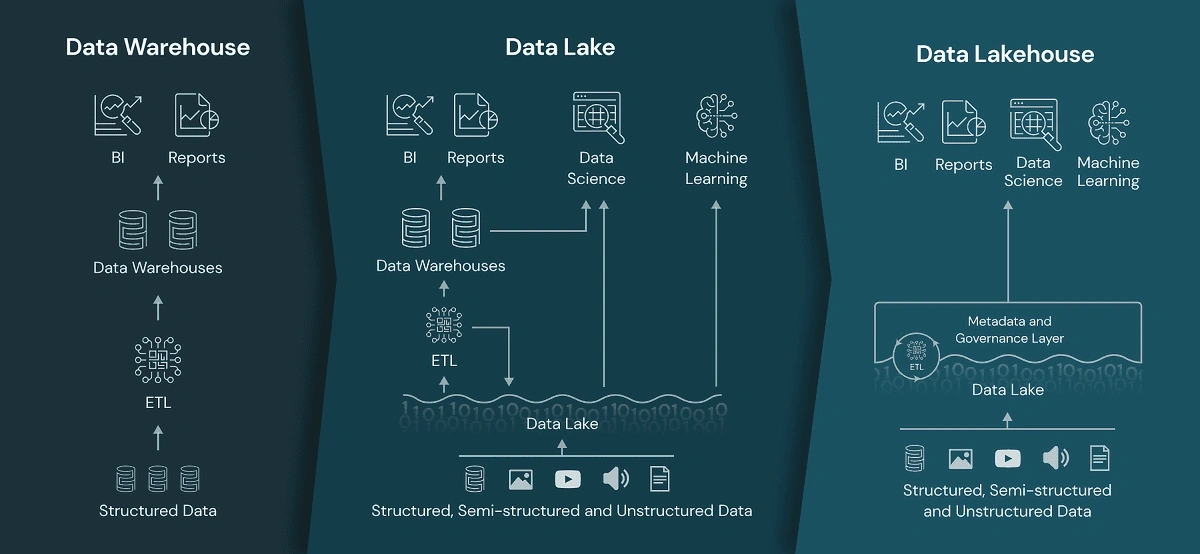

Data lakes allow organizations to store raw, semistructured, or structured data with a “store now, analyze later” premise. There are no size limits and the platform can take on data from any system at any speed.

These platforms can simplify data management, improve security, and speed up data insights.

Data lakehouses are a combination of data lakes and data warehouses.

Data warehouses are typically databases with clean, structured data. They’re often used for repeatable reporting like sales reports or website traffic.

So, data lakehouses combine the best of both options: the scale and flexibility of data lakes with the data management capabilities of data warehouses.

Data lakehouses give teams the opportunity to access up-to-date data without needing to tap into multiple systems.

However, the concept of data lakehouses is still quite new and the technology remains immature.

As for data lakes, Modor Intelligence predicts the market will grow at a CAGR of nearly 30% through 2026.

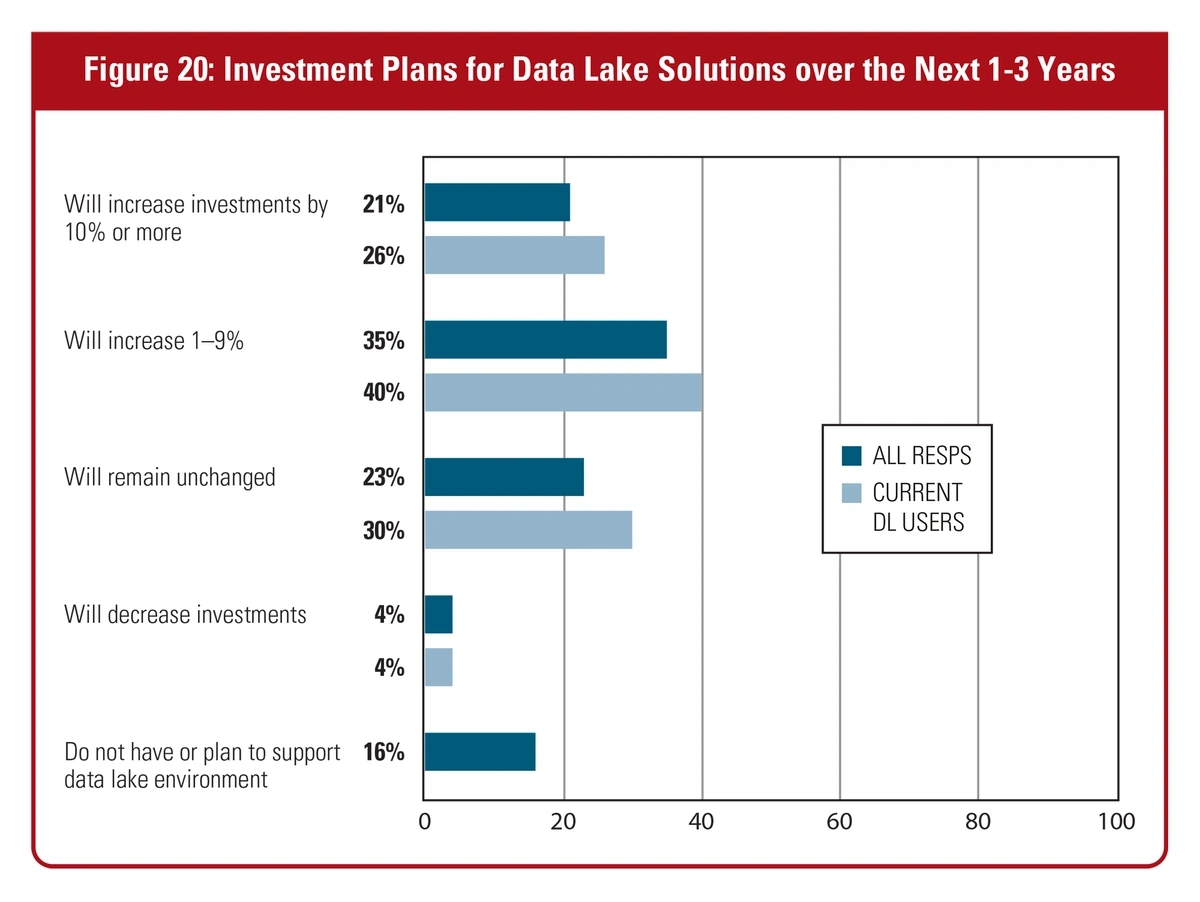

A 2022 survey showed that 21% of respondents said they plan to increase their data lake investments by 10% or more in the next three years and 35% plan to increase their spending by up to 9%.

IT leaders are looking for data lake solutions to provide the flexibility and scalability they need.

A large portion of this growth is coming from the fact that small businesses are now generating petabytes of data and require an affordable storage solution.

Hadoop has long been the go-to data lake solution. However, the platform can be a multi-million dollar expense for businesses.

New cloud options are making data lake technology widely available.

Snowflake is a cloud-based data lake provider that gives businesses of all sizes the ability to load and optimize data without any management or infrastructure.

In 2022, the company posted 67% year-over-year growth for the third quarter.

At that time, the company reported more than 7,000 customers and $523 million in product revenue.

Snowflake’s data lake solution gives companies the ability to access structured, semi-structured, and unstructured data all from one platform.

5. Transitions in Big Data Governance

With privacy, bias, and other regulatory concerns surrounding big data, experts predict that companies will be paying special attention to data governance in the coming months.

Search volume for “data governance” is up 213% in recent years.

In 2020, only 10% of the global population had their personal data protected by privacy laws.

Gartner predicts that number will surge to 75% by 2024.

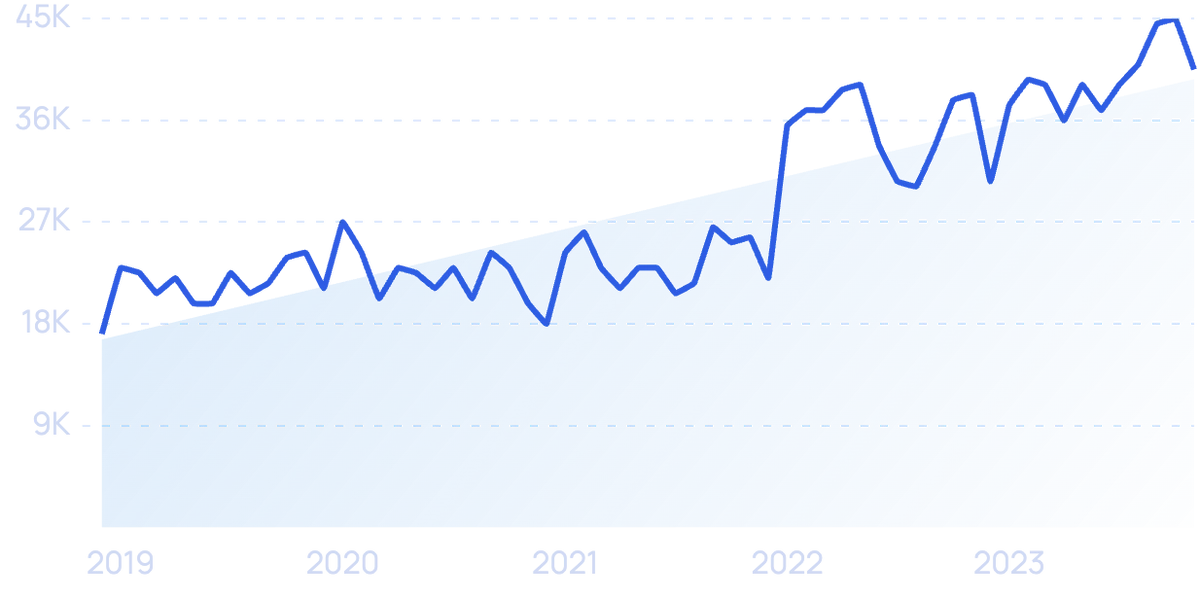

Search interest in “data privacy” continues to grow.

While big data is regulated, at least in part, by the European GDPR, Canadian PIPEDA, and Chinese PIPL, there are no specific federal regulations on big data in the United States.

US lawmakers point to the Computer Fraud and Abuse Act (1986), the Health Insurance Portability and Accountability Act (1996), and the Children’s Online Privacy Protection Act (1998) as being the main ways in which to ensure data privacy.

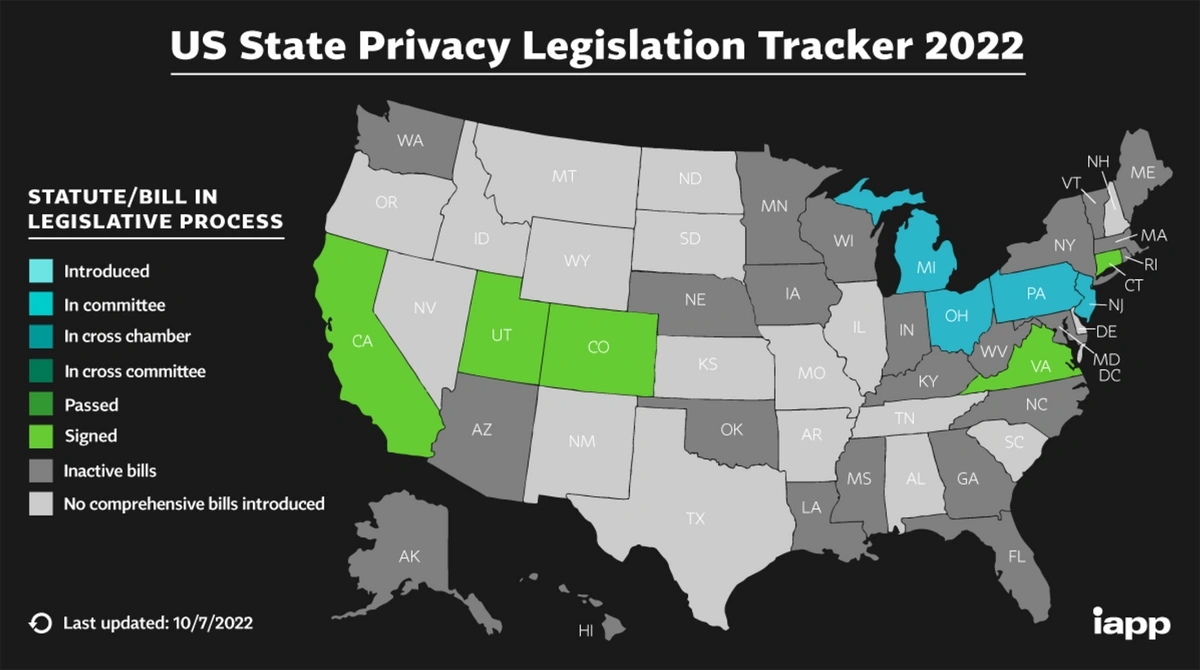

In addition, many states are beginning to pass privacy-based legislation.

As of October 2022, most states do not have comprehensive data regulation laws yet.

Some experts in the tech industry warn that the regulation of big data will soon be impossible.

They cite the sheer scale and complexity of the data as reasons why individuals will never really be able to take control of their personal data.

As of now, California has the country’s most stringent laws applying to big data.

Individuals there have the right to sue a company if they’ve faced certain types of data breaches.

They can also opt out of sharing data via a browser-based or device-based action instead of having to do so on every site they visit.

Corporations are carefully watching legislative developments as well as consumer sentiment. Both of these things could drive future privacy and data governance solutions.

In November 2022, Google agreed to pay $392 billion for illegally collecting location data from individuals.

As part of an effort to reassure users, the company pledged to offer more privacy controls and be more transparent with how it collects data.

In addition to regulating privacy, there are concerns that the use of big data and machine learning can lead to gender and racial bias.

Recent examples come from the medical and law enforcement sectors.

Conclusion

That wraps up our list of the top five trends related to big data.

Organizations from a variety of sectors are already seeing the tangible business benefits of big data like improved efficiency, accelerated insights, and improved customer targeting.

However, in many ways, the collection and management of big data is becoming more complex and challenging for businesses, especially SMBs. Many are collecting data without knowing how to store it or what to do with it. In addition, organizations of all sizes are seeing a need to devote additional attention to the privacy and regulatory concerns surrounding big data.

The emergence of DaaS providers and AI/ML platforms may be the exact solutions these businesses need.

Stop Guessing, Start Growing 🚀

Use real-time topic data to create content that resonates and brings results.

Exploding Topics is owned by Semrush. Our mission is to provide accurate data and expert insights on emerging trends. Unless otherwise noted, this page’s content was written by either an employee or a paid contractor of Semrush Inc.

Share

Newsletter Signup

By clicking “Subscribe” you agree to Semrush Privacy Policy and consent to Semrush using your contact data for newsletter purposes

Written By

Josh is the Co-Founder and CTO of Exploding Topics. Josh has led Exploding Topics product development from the first line of co... Read more