Get Advanced Insights on Any Topic

Discover Trends 12+ Months Before Everyone Else

How We Find Trends Before They Take Off

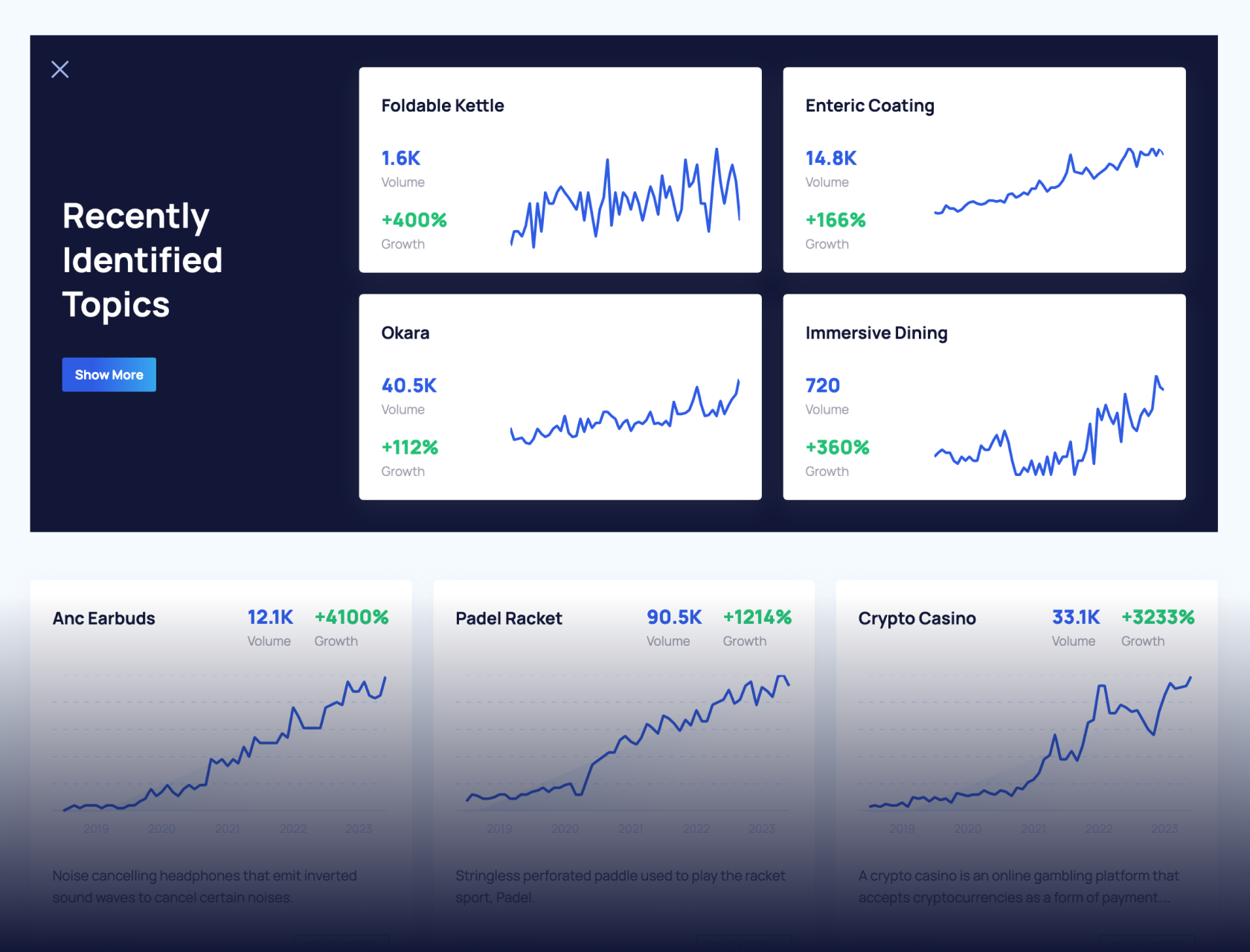

Exploding Topics’ advanced algorithm monitors millions of unstructured data points to spot trends early on.

Keyword Research

Performance Tracking

Competitor Intelligence

Fix Your Site’s SEO Issues in 30 Seconds

Find technical issues blocking search visibility. Get prioritized, actionable fixes in seconds.

Powered by data from

5 Important Data Storage Trends 2024-2026

IT leaders are dealing with more data than ever before. And the volume keeps increasing every day.

How can enterprises safely store this massive amount of data, but also maintain accessibility for employees?

It’s not an easy problem to solve, but there are many breakthroughs on the horizon that are set to disrupt the industry in the coming months.

Read this list of five trends to learn about recent developments in the data storage industry.

1. Scientific Progress Could Finally Make DNA Data Storage Feasible

Storing data on DNA molecules is an emerging technology trend in 2024.

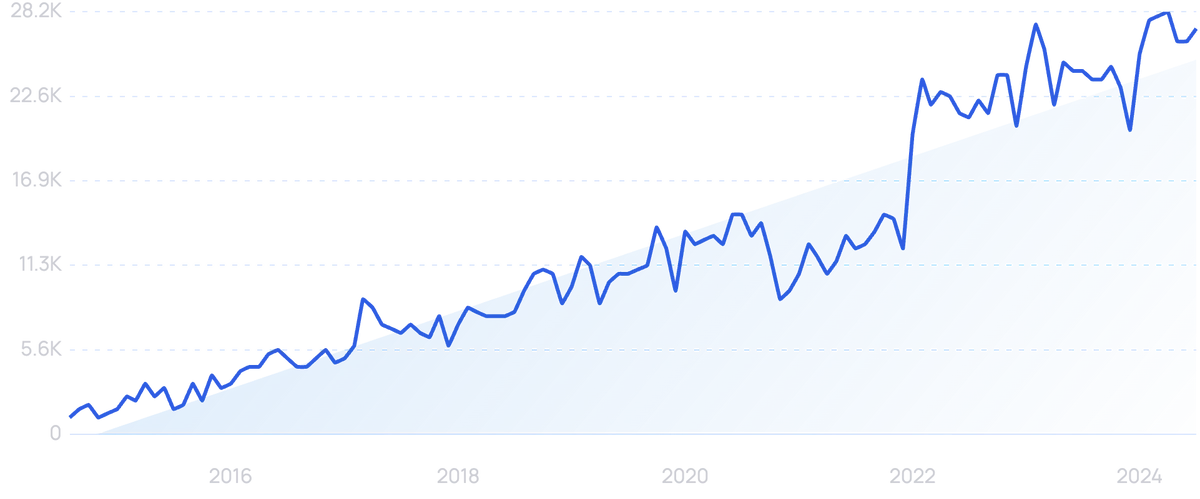

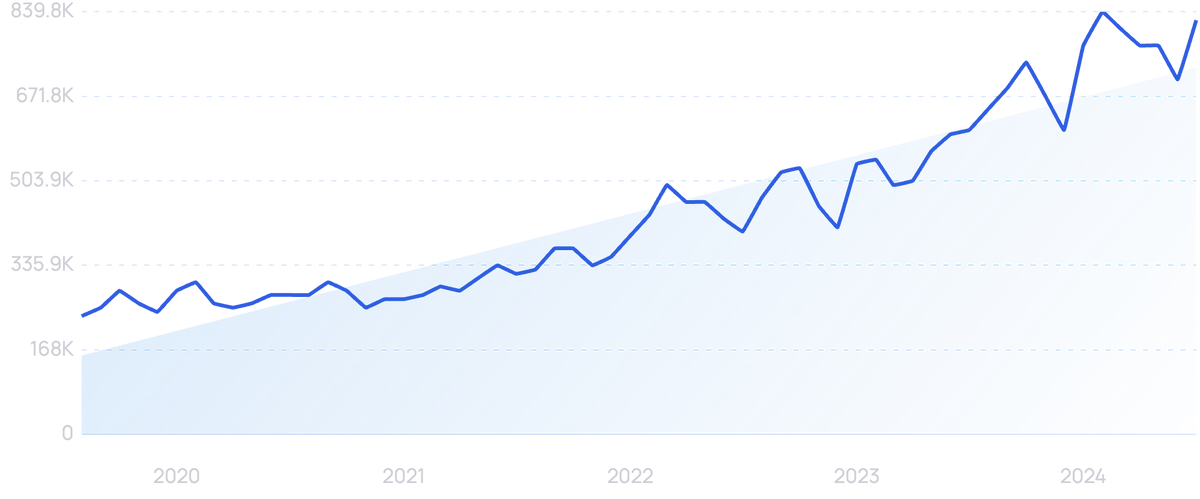

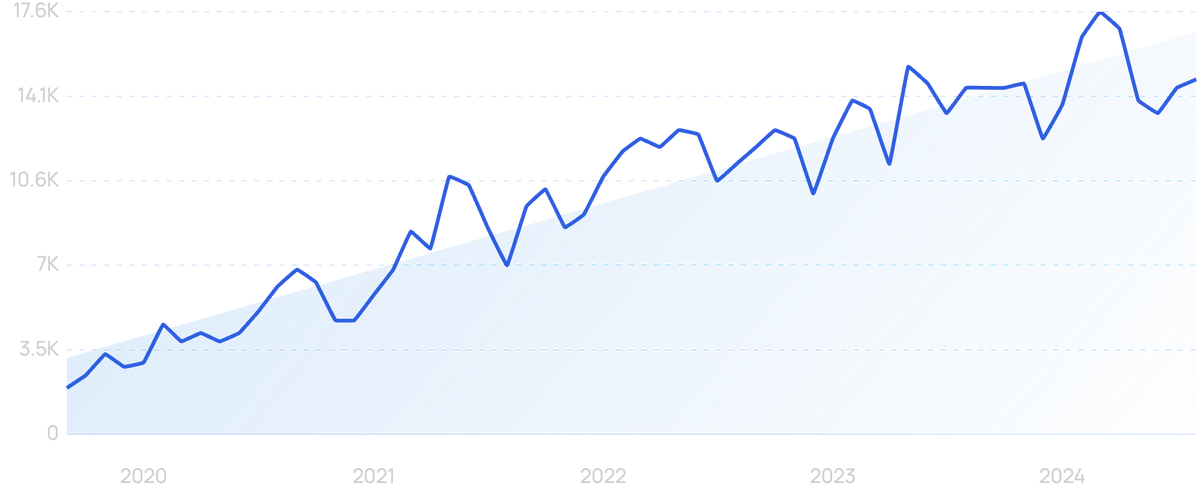

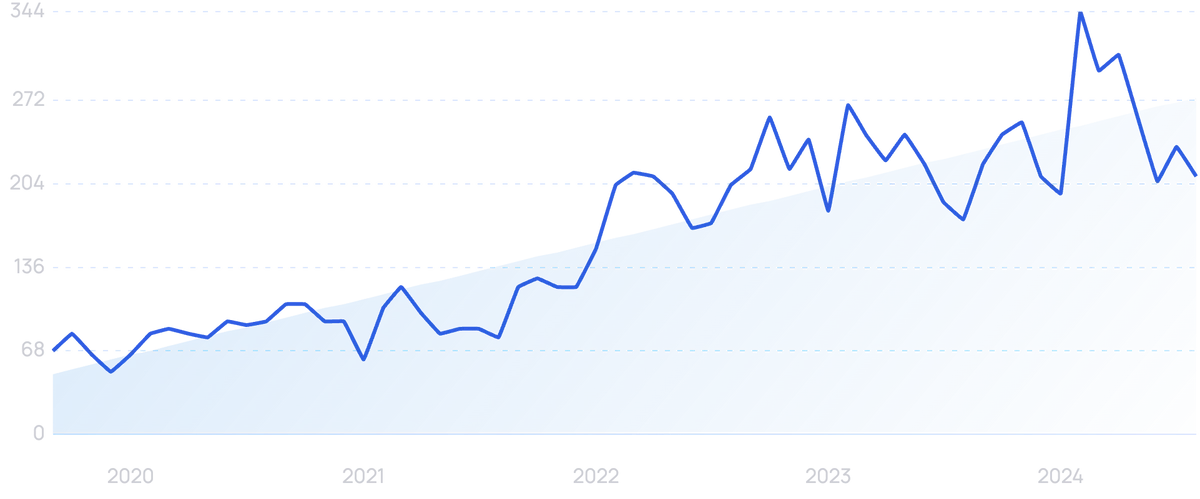

Searches for “DNA storage” over 10 years.

The DNA data storage market is predicted to grow at a CAGR of 65.8% through 2028.

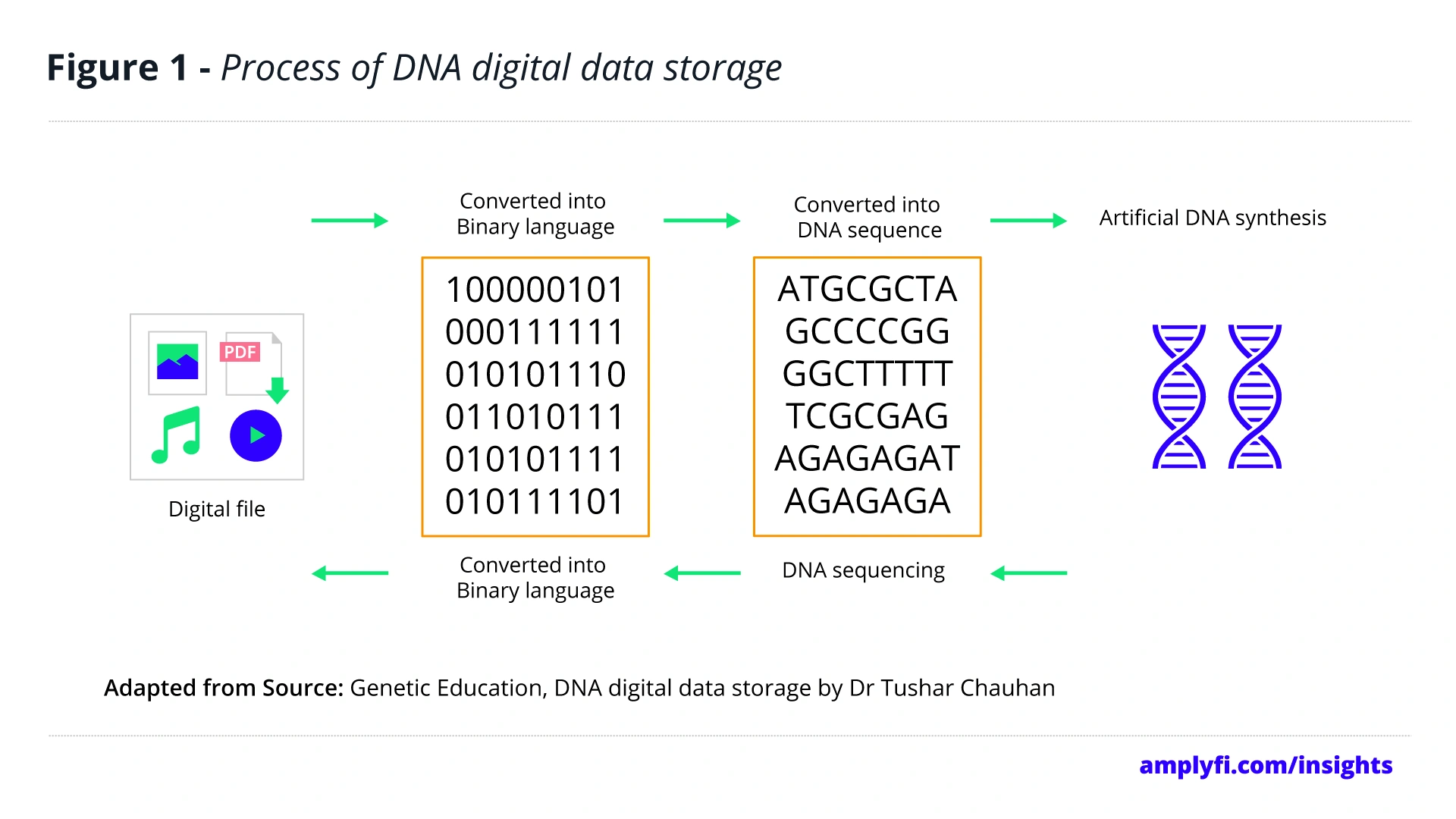

While computers understand binary code, DNA understands a four-letter code.

For DNA data storage, the data is first coded in ACGT sequences and then linked to the DNA.

The specific order and sequences of those four letters form the basic building blocks of all life. And, scientists have figured out how to write computer code in DNA code.

There are multiple benefits of DNA storage: capacity, life span, and sustainability are just a few.

That said, increased capacity is the main reason that DNA data storage is appealing.

The world is predicted to have 175 ZB of data by 2025. That’s nearly a 3x increase over 2020 and a 5x increase over 2018.

Where can that massive amount of data go? Many tech leaders say the future is in DNA storage.

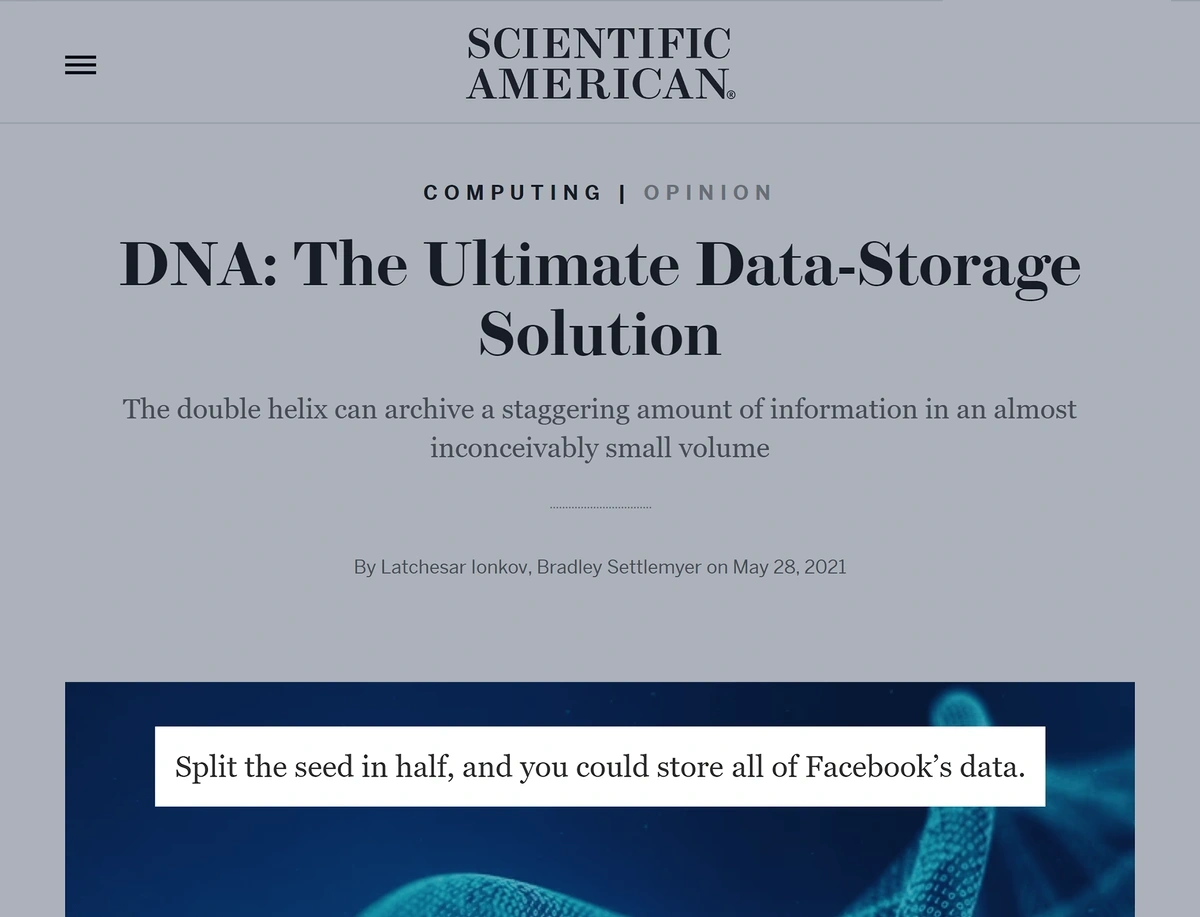

For example, DNA could store all of Facebook’s data in the space of half a poppy seed.

A coffee mug could store all of the world’s data.

Another advantage of DNA-based storage is its stability.

DNA will never degrade. If kept dry and cool, the files can last hundreds of thousands of years.

One of the main issues that’s held this trend back is cost.

With current DNA technology, it costs about $3,500 to synthesize 1 megabyte of data.

The cost of synthesis has long been the main problem associated with DNA data storage.

However, research is ongoing and some say that the cost could go down to $100 per terabyte of data by the end of 2024.

Another issue is the speed of writing DNA.

The long-held record speed was 200 MB per day. However, in late 2021, researchers boosted that to 20 GB per day.

That’s still substantially slower than the speed to write to tape: 1,440 GB per hour.

Catalog is a Boston-based company that’s pursuing DNA data storage.

The company recently announced it was partnering with Seagate to lower the cost and complexity of its DNA systems.

Catalog has raised nearly $60 million in funding.

Researchers at Catalog have the goal of commercializing the DNA storage process.

Some tech experts say DNA storage could be available commercially within five years.

2. Zero-Trust Architecture Aim to Improve Data Security

In a survey of Fortune 500 CEOs, two-thirds named cybersecurity as their top business risk.

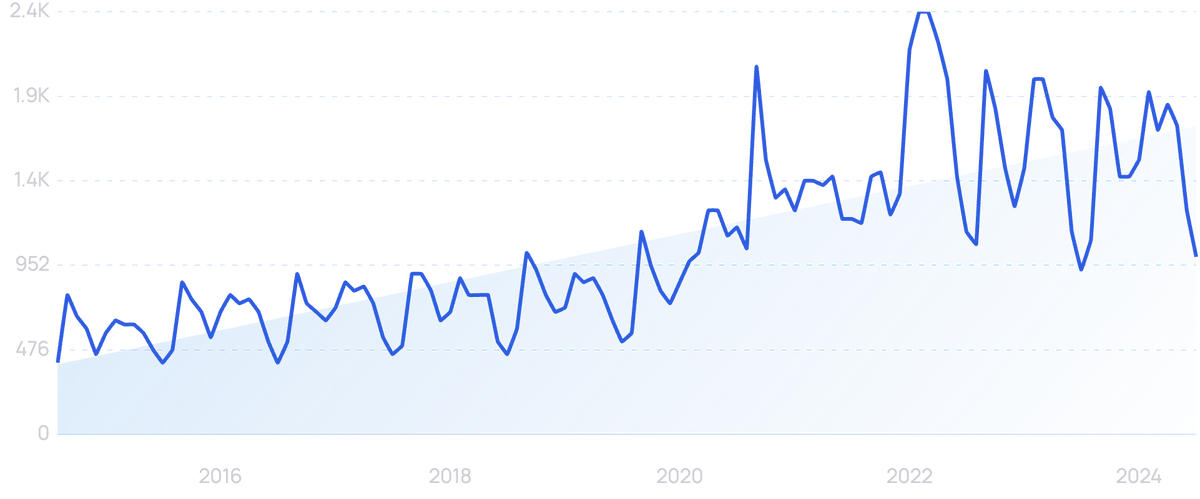

Search volume for “cybersecurity” has been steadily climbing, up more than 250% in the past 5 years.

In 2022, KPMG released a study showing that cybersecurity risks are increasing.

Nearly 85% of the business leaders in the study said they believe cyber risks are growing and only 35% say their companies are properly protected.

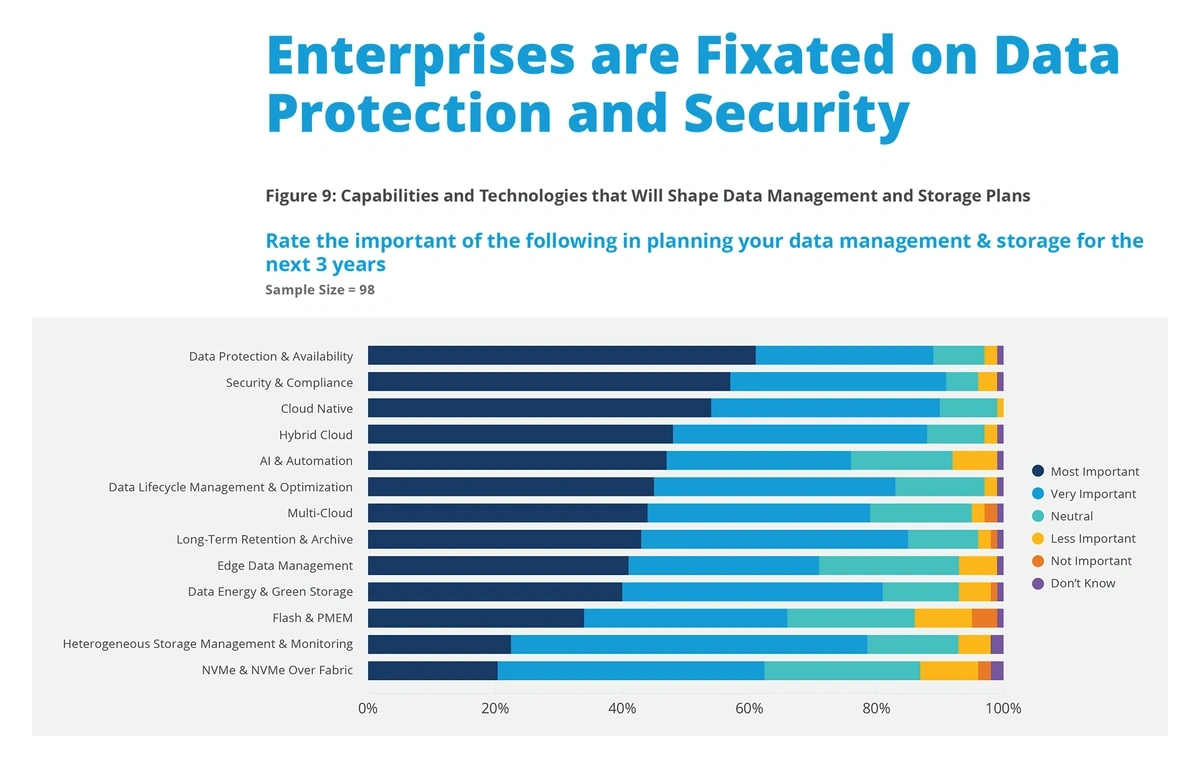

When IT decision-makers were asked about the most important aspects of data management and storage for the next three years, 61% rated data protection and availability as one of their most important factors.

In addition, 57% said security and compliance was one of their most important factors.

In a 2021 survey, data protection and security were ranked as the most important factors of data management moving forward into the next three years.

The storage security issue is likely more pressing than most businesses realize.

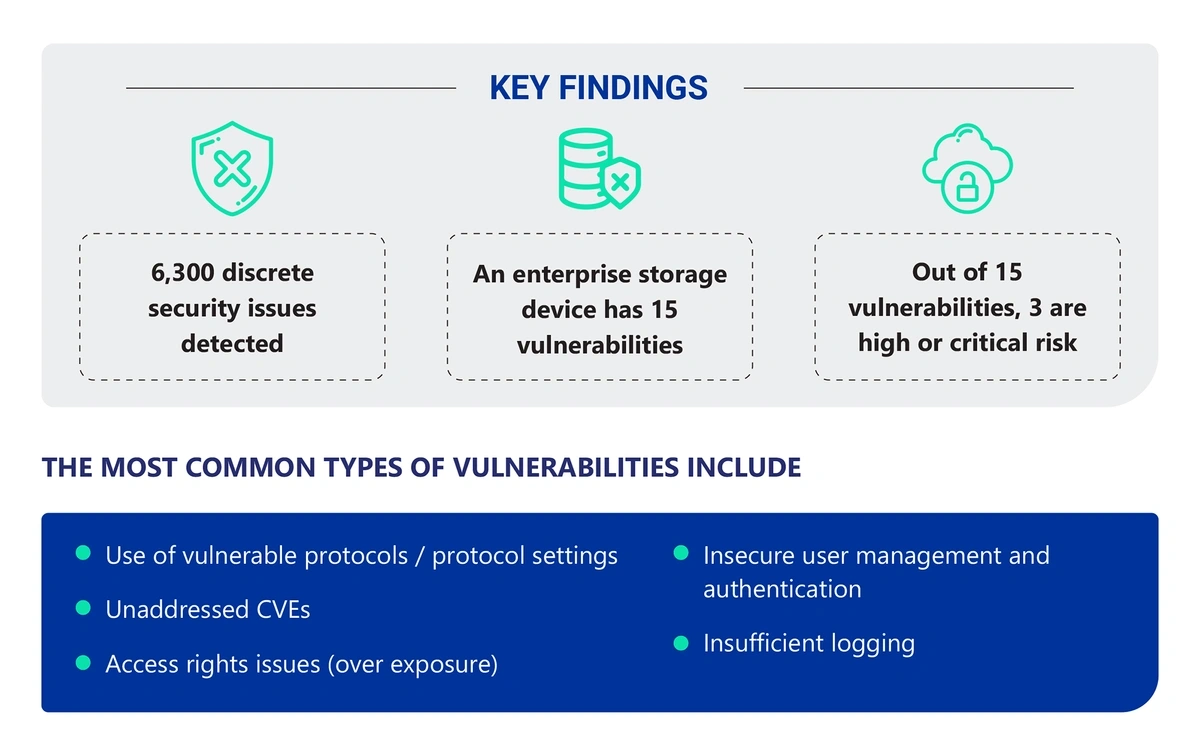

More than 400 high-end storage devices were analyzed in Continuity’s “The State of Storage Security Report.”

They found that each enterprise storage device has 15 security issues on average. Of those, three are considered high-risk issues.

In total, more than 6,300 discrete security issues were found.

Ransomware attacks have become especially prevalent.

In mid-2022, one report revealed an 80% increase in ransomware attacks year-over-year.

The educational sector has been heavily targeted by hackers in recent years.

The Los Angeles Unified School District suffered a massive data breach in 2022 when a Russian group stole nearly 300,000 files containing sensitive data and leaked it on the dark web.

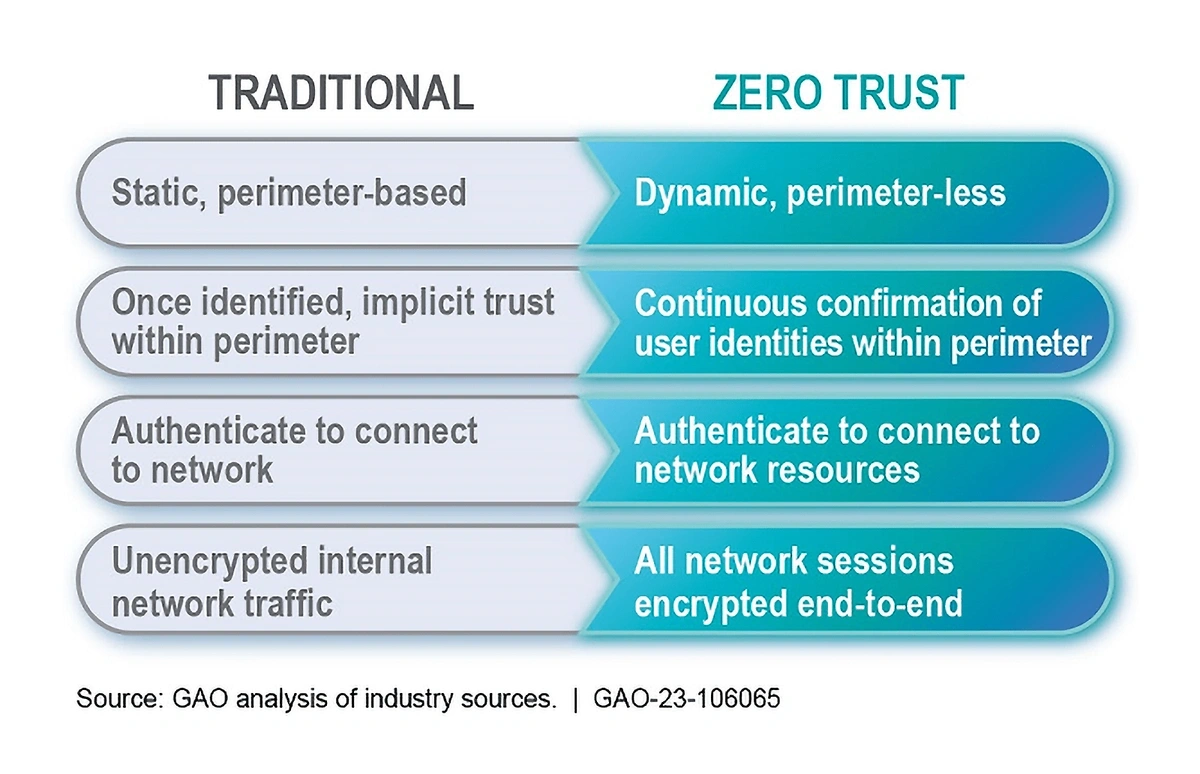

One strategy to combat cyberattacks on data is zero-trust architecture (ZTA).

Search volume for “zero trust architecture” has skyrocketed in recent years.

This approach is different from traditional cybersecurity models in which users can go anywhere in the network once they’re granted initial access.

In ZTA, every interaction between a network and a user is authenticated, authorized, and validated.

ZTA models defend the network from the inside and the outside.

Because ransomware attacks are increasingly focused on backups of data, creating immutable backups is another strategy companies are using to avoid a data crisis.

These are clean copies of data that cannot be altered.

It works through the write-once-read-many (WORM) mechanism—as employees upload files, they set an immutability flag that locks the files in place for a set period of time the data is frozen for that specified duration.

Rubrik’s Zero Trust Data Protection offers a built-in immutable file system that works with hybrid and multi-cloud data platforms.

Big-name companies like Citigroup, The Home Depot, and others protect their data with Rubrik’s system.

Leaders at Rubrik are so confident in their data security measures that they offer a $5 million warranty to customers.

The company’s annual recurring revenue grew 100% from 2021 to 2022.

3. Multiple Uses and Benefits of AI in Data Storage

The use of artificial intelligence for IT operations (AIOps) is growing within data storage systems.

Search volume for “AIOps” shows 127% growth over the past 5 years.

AI can monitor storage infrastructure and applications, as well as diagnose problems, automate actions, and perform predictive analysis.

According to IBM, one-third of companies have already launched AIOps to automate their IT processes.

Because AI has the capability of optimizing storage capacity and streamlining management, companies are looking to this as a major cost-saving solution.

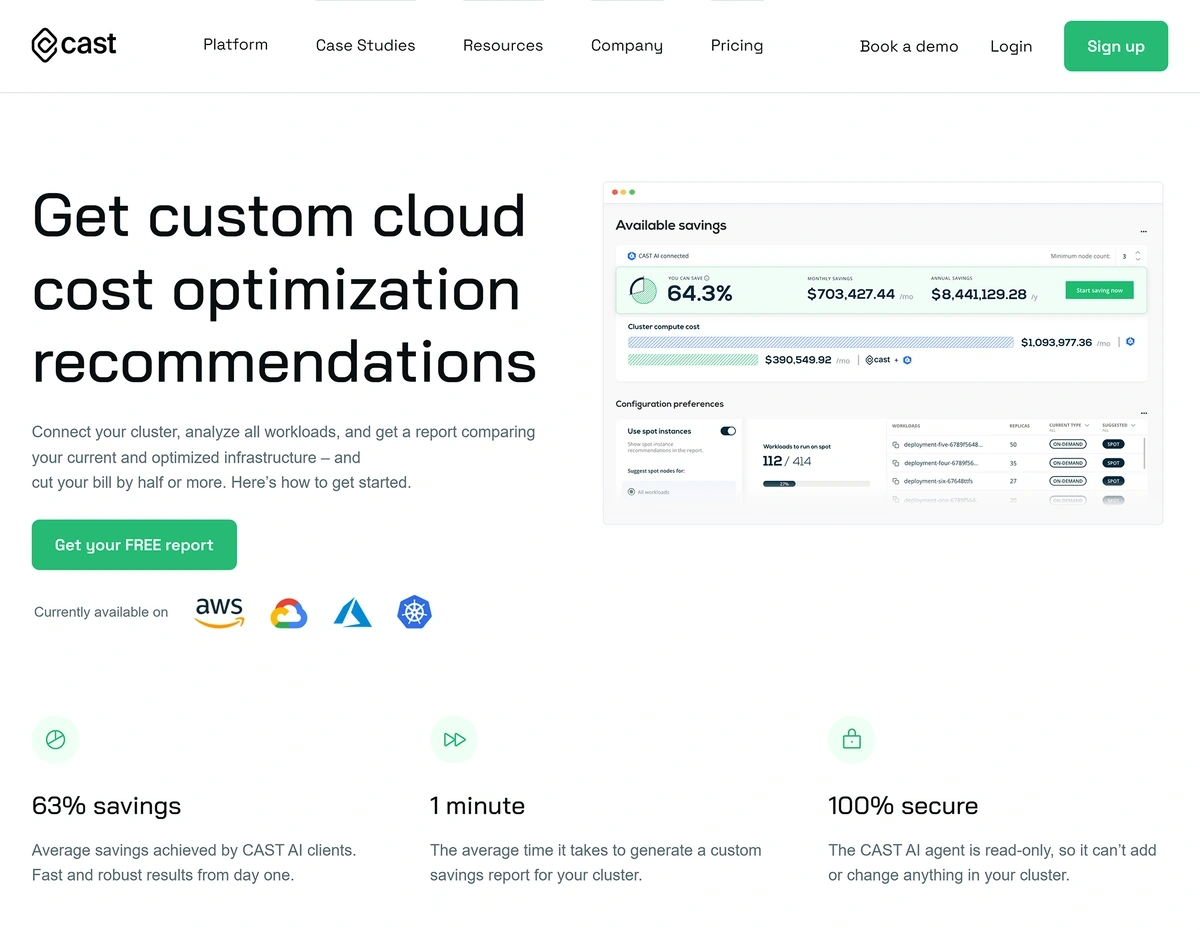

The platform from Cast AI manages enterprise cloud resources automatically, generating an average savings of 63%.

Cast AI identifies workload needs and picks the optimal combination of resources to meet those needs.

Another advantage of using AI in this way is that it releases staff members who spend a considerable amount of time on routine or low-value tasks. Instead, they can devote their time to tasks that create value for the business.

That’s one of the reasons why the state of Utah is extensively deploying AIOps throughout its IT operations platform.

The state’s Department of Technology employed about 1,000 people 15 years ago, but that number is down to 750 in 2022.

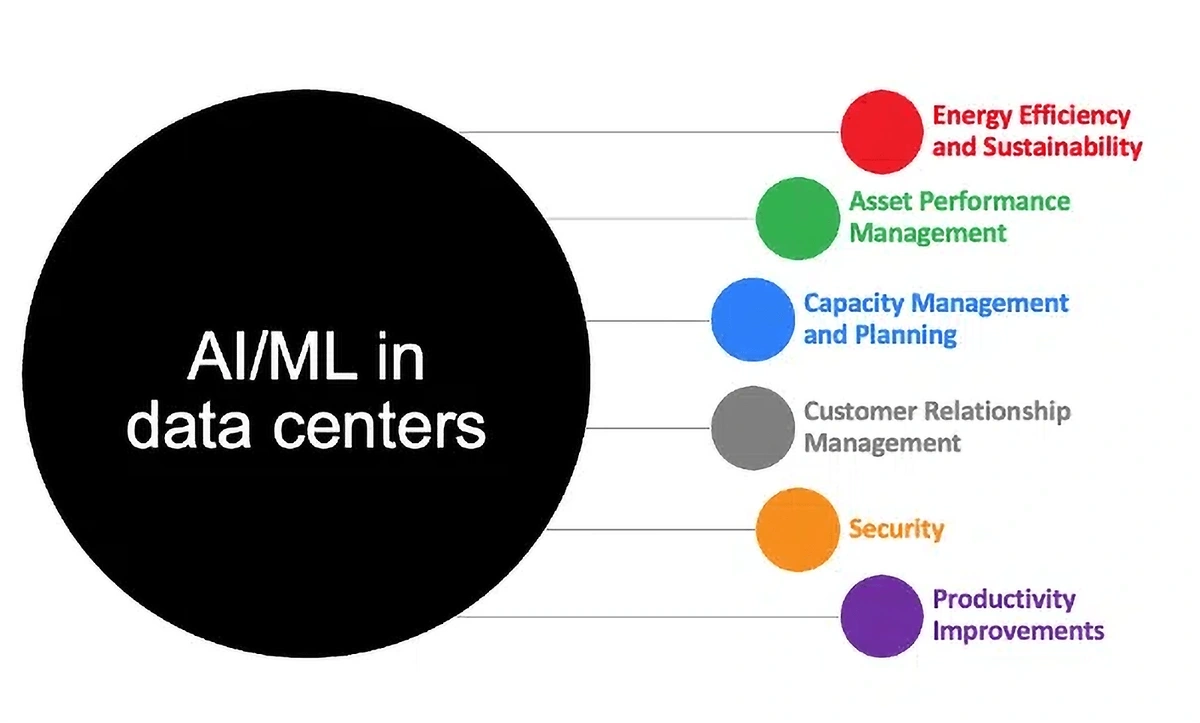

AI is also becoming a viable option for automating data center operations.

By 2025, Gartner predicts that half of all data centers will deploy robots using AI/ML to increase efficiency by up to 30%.

As of now, AI is mostly being used to optimize cooling and predict maintenance issues.

Industry leaders say that the technology will soon be used for security, workload management, and power management.

AI has the potential to dramatically improve data center operations.

4. Reducing Costs of Data Storage

Companies are constantly creating data, leading to the need for more data storage. At the same time, data storage is becoming more expensive.

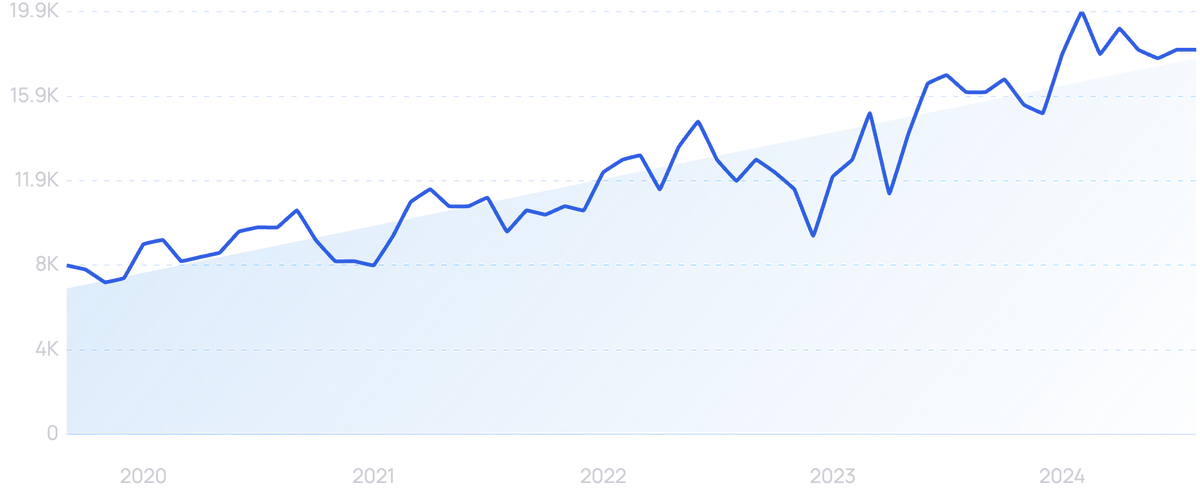

Search interest in “data storage costs” continues to climb, up 205% over 5 years.

In fact, it now costs more than $3,000 to store a single terabyte of data for a year.

Companies with extremely large amounts of data are paying even more.

Estimates show that it can cost more than $1 million to store a petabyte of data for five years.

A 2022 report from Seagate shows that UK enterprises are spending an average of $260,000 annually on storing and managing their own data.

In addition, they found that 90% of IT decision-makers were worried about the rising costs of data storage.

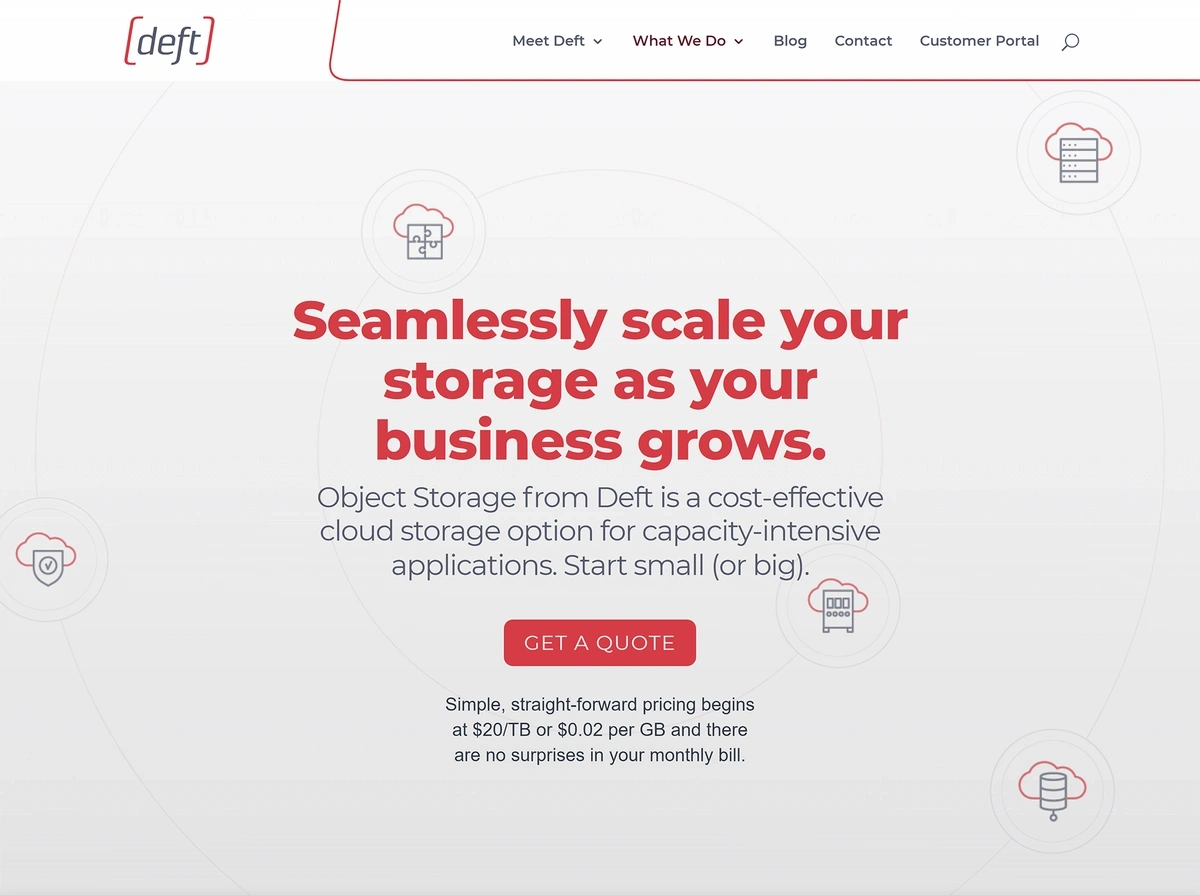

Object storage is one cost-saving option that’s being suggested as the ideal way to store archival data.

It’s scalable, flexible, and can be deployed on-premise or in the cloud.

Plus, it’s cheap.

Deft offers object storage for $20 per terabyte per month.

Deft offers object storage with data immutability.

In one example, a global bank was spending $2 billion each year to operate 600 data repositories.

They reorganized, decommissioned some data, and saved $400 million in annual costs.

Adoption of software-defined storage is also on the rise.

Instead of attaching storage to on-premise hardware, this approach creates a unified pool of storage that can be managed from one interface.

This increases storage utilization and reduces overprovisioning of storage.

Data deduplication is a simple concept; it simply removes redundant data. But it can result in substantial cost-savings.

In one case study, an engineering company needed to back up 10 terabytes of data.

The deduplication process reduced data volume by 90%.

5. Mitigating the Environmental Impact of Data Storage

While the amount of data is steadily increasing, the energy required to store all of the data is growing exponentially.

Both technology leaders and environmental activists are sounding the alarm.

Data centers create an enormous amount of heat and, in order for all of the tech to continue working properly, substantial cooling must take place.

These massive air conditioning needs account for more than 40% of the electricity used in a data center.

In addition, data centers use a large amount of energy running redundant systems and underutilized systems.

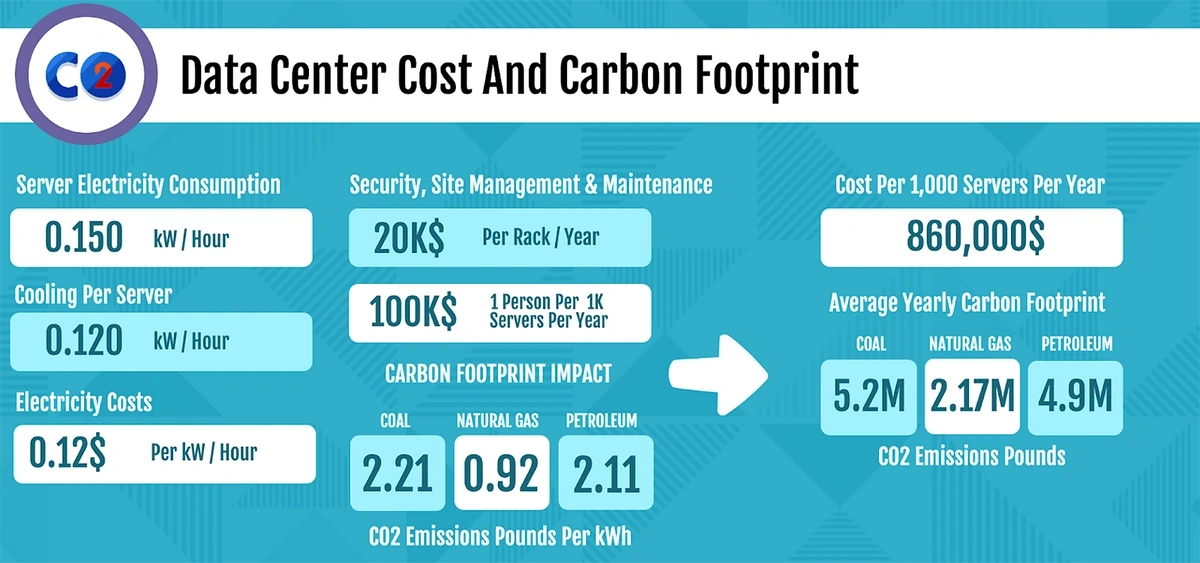

A snapshot of a data center’s carbon footprint shows substantial budgetary costs and environmental impact.

The most recent estimates show that the cloud is responsible for more greenhouse gas emissions than the airline industry.

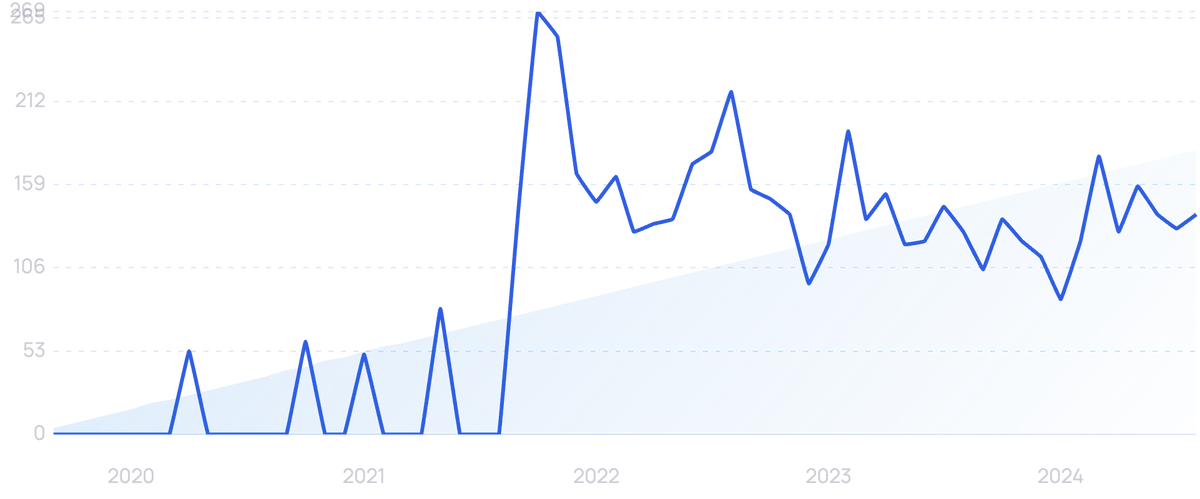

Search volume for “data center emissions” jumped in 2022.

According to an article from MIT, many data centers are switching to water-cooled systems.

However, this comes with its own environmental costs.

Microsoft had promised they’d only use 12-20 million liters of water annually at one Northern Holland data center, but in total, the company used 84 million liters of water in 2021.

Similar circumstances are appearing at data centers in the United States.

In 2019, Google used 2.3 billion gallons of water for their data centers in three states and, in 2021, they requested 1.46 billion gallons annually for a data center near Dallas.

As much of the western United States deals with a megadrought, data center companies are facing backlash from residents.

Residents in South Carolina have been vocal in their opposition to Google’s water usage.

Green data storage is a trend that’s emerging now.

Data shows the market could reach $261.2 billion by 2027, growing at a CAGR of 19.2%.

A handful of hyperscale companies like Google and Microsoft have pledged to be “water positive” in the near future, meaning the companies will replenish more water than they consume.

Search volume for “water positive” is up nearly 147% over 5 years.

Microsoft’s published goal for being water-positive is 2030. The company is working toward this goal by setting up rainwater collection systems, improving water efficiency, and tapping into solar energy.

Meta recently invested in seven new solar power facilities to provide energy for data centers in Georgia and Tennessee.

A few infrastructure companies are also taking dedicated steps toward green data storage.

For example, Equinix is raising the temperature threshold at its data centers to 80° F.

The company is also exploring renewable energy options like hydrogen fuel. In 2021, they reported 95% renewable energy coverage.

Equinix aims to be climate-neutral by 2030.

Conclusion

That wraps up our list of the top five data storage trends to watch over the next three years.

The sector is developing high-tech storage options and security measures that aim to help businesses maximize the value of their data. AI options and automation tools are emerging as well. For many organizations, this is the only way IT leaders will be able to successfully manage this new workload.

However, at least two main hurdles remain: budgetary restrictions and environmental concerns. As the volume of data continues to grow, watch for companies to strike a balance between the business value of data and these future costs.

Stop Guessing, Start Growing 🚀

Use real-time topic data to create content that resonates and brings results.

Exploding Topics is owned by Semrush. Our mission is to provide accurate data and expert insights on emerging trends. Unless otherwise noted, this page’s content was written by either an employee or a paid contractor of Semrush Inc.

Share

Newsletter Signup

By clicking “Subscribe” you agree to Semrush Privacy Policy and consent to Semrush using your contact data for newsletter purposes

Written By

Josh is the Co-Founder and CTO of Exploding Topics. Josh has led Exploding Topics product development from the first line of co... Read more