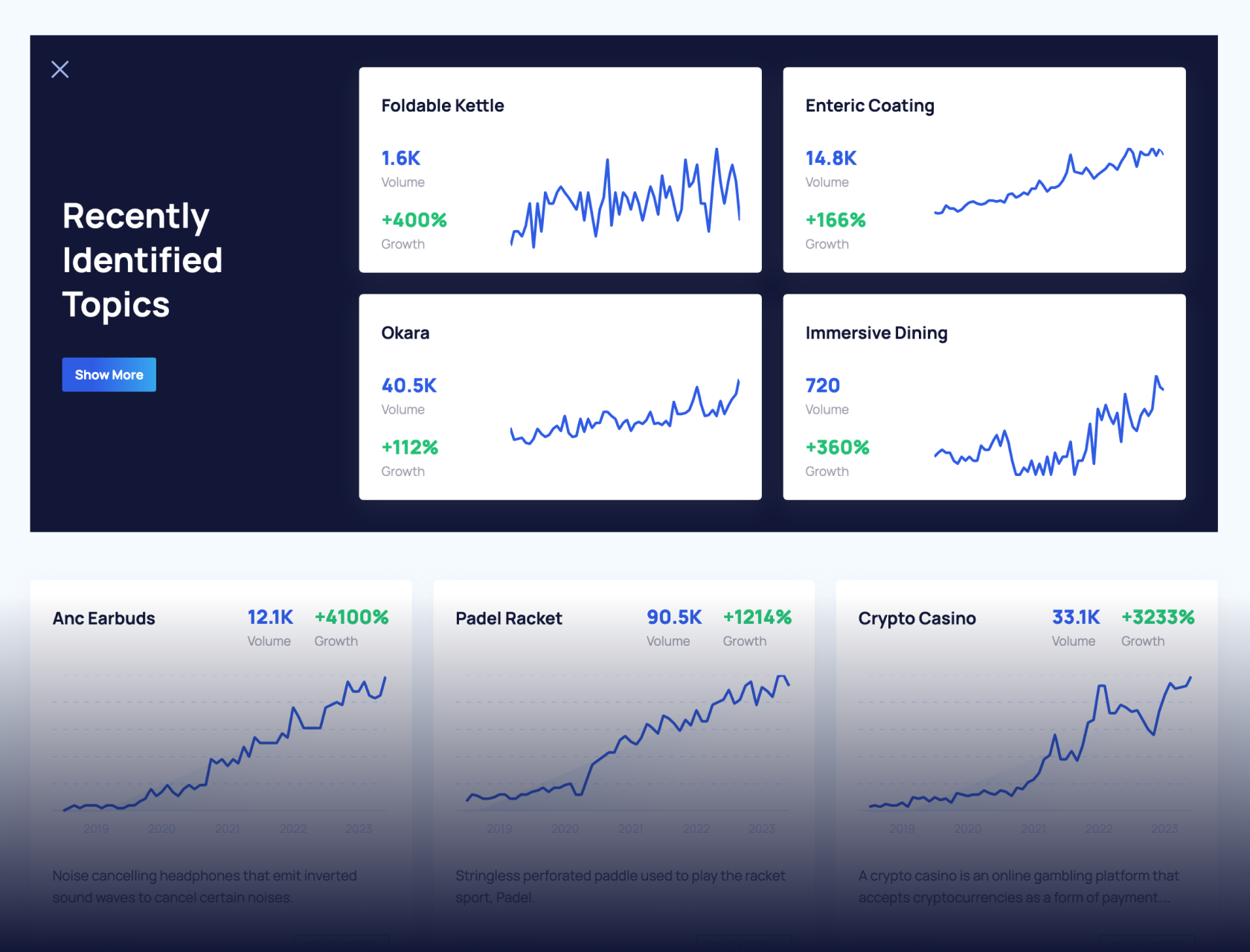

Get Advanced Insights on Any Topic

Discover Trends 12+ Months Before Everyone Else

How We Find Trends Before They Take Off

Exploding Topics’ advanced algorithm monitors millions of unstructured data points to spot trends early on.

Keyword Research

Performance Tracking

Competitor Intelligence

Fix Your Site’s SEO Issues in 30 Seconds

Find technical issues blocking search visibility. Get prioritized, actionable fixes in seconds.

Powered by data from

Best 44 Large Language Models (LLMs) in 2025

Large language models are pre-trained on large datasets and use natural language processing to perform linguistic tasks such as text generation, code completion, paraphrasing, and more.

The initial release of ChatGPT sparked the rapid adoption of generative AI, which has led to large language model innovations and industry growth.

Top LLMs in August 2025

This table lists the leading large language models in 2025.

| LLM Name | Developer | Release Date | Access | Parameters |

| GPT-5 | OpenAI | August 7, 2025 | API | Unknown |

| Claude 4.1 | Anthropic | August 5, 2025 | API | Unknown |

| Grok 5 | xAI | July 9, 2025 | API | Unknown |

| Qwen 3 | Alibaba | April 29, 2025 | API, Open Source | 235B |

| GPT-o4-mini | OpenAI | April 16, 2025 | API | Unknown |

| GPT-o3 | OpenAI | April 16, 2025 | API | Unknown |

| GPT-4.1 | OpenAI | April 14, 2025 | API | Unknown |

| Llama 4 Scout | Meta AI | April 5, 2025 | API | 17B |

| Gemini 2.5 Pro | Google DeepMind | Mar 25, 2025 | API | Unknown |

| GPT-4.5 | OpenAI | Feb 27, 2025 | API | Unknown |

| Claude 3.7 Sonnet | Anthropic | Feb 24, 2025 | API | Unknown (est. 200B+) |

| Grok-3 | xAI | Feb 17, 2025 | API | Unknown |

| Gemini 2.0 Flash-Lite | Google DeepMind | Feb 5, 2025 | API | Unknown |

| Gemini 2.0 Pro | Google DeepMind | Feb 5, 2025 | API | Unknown |

| GPT-o3-mini | OpenAI | Jan 31, 2025 | API | Unknown |

| Qwen 2.5-Max | Alibaba | Jan 29, 2025 | API | Unknown |

| DeepSeek R1 | DeepSeek | Jan 20, 2025 | API, Open Source | 671B (37B active) |

| DeepSeek-V3 | DeepSeek | Dec 26, 2024 | API, Open Source | 671B (37B active) |

| Gemini 2.0 Flash | Google DeepMind | Dec 11, 2024 | API | Unknown |

| Sora | OpenAI | Dec 9, 2024 | API | Unknown |

| Nova | Amazon | Dec 3, 2024 | API | Unknown |

| Claude 3.5 Sonnet (New) | Anthropic | Oct 22, 2024 | API | Unknown |

| GPT-o1 | OpenAI | Sept 12, 2024 | API | Unknown (o1-mini est. ~100B) |

| DeepSeek-V2.5 | DeepSeek | Sept 5, 2024 | API, Open Source | Unknown |

| Grok-2 | xAI | Aug 13, 2024 | API | Unknown |

| Mistral Large 2 | Mistral AI | July 24, 2024 | API | 123B |

| Llama 3.1 | Meta AI | July 23, 2024 | Open Source | 405B |

| GPT-4o mini | OpenAI | July 18, 2024 | API | ~8B (est.) |

| Nemotron-4 | Nvidia | July 14, 2024 | Open Source | 340B |

| Claude 3.5 Sonnet | Anthropic | June 20, 2024 | API | ~175-200B (est.) |

| GPT-4o | OpenAI | May 13, 2024 | API | ~1.8T (est.) |

| DeepSeek-V2 | DeepSeek | May 6, 2024 | API, Open Source | Unknown |

| Phi-3 | Microsoft | April 23, 2024 | API, Open Source | Mini 3B, Small 7B, Medium 14B |

| Mixtral 8x22B | Mistral AI | April 10, 2024 | Open Source | 141B (39B active) |

| Jamba | AI21 Labs | Mar 29, 2024 | Open Source | 52B (12B active) |

| DBRX | Databricks' Mosaic ML | Mar 27, 2024 | Open Source | 132B |

| Command R | Cohere | Mar 11, 2024 | API, Open Source | 35B |

| Inflection-2.5 | Inflection AI | Mar 7, 2024 | Proprietary | Unknown (predecessor ~400B) |

| Gemma | Google DeepMind | Feb 21, 2024 | API, Open Source | 2B, 7B |

| Gemini 1.5 | Google DeepMind | Feb 15, 2024 | API | ~1.5T Pro, ~8B Flash (est.) |

| Stable LM 2 | Stability AI | Jan 19, 2024 | Open Source | 1.6B, 12B |

| Grok-1 | xAI | Nov 4, 2023 | API, Open Source | 314 billion |

| Mistral 7B | Mistral AI | Sept 27, 2023 | Open Source | 7.3 billion |

| Falcon 180B | Technology Innovation Institute | Sept 6, 2023 | Open Source | 180 billion |

| XGen-7B | Salesforce | July 3, 2023 | Open Source | 7 billion |

| PaLM 2 | May 10, 2023 | API | 340 billion | |

| Alpaca 7B | Stanford CRFM | Mar 13, 2023 | Open Source | 7 billion |

| Pythia | EleutherAI | Mar 13, 2023 | Open Source | 70 million to 12 billion |

Context Windows and Knowledge Boundaries

LLMs with a larger context window size can handle longer inputs and outputs. The context window, therefore, determines how much information an LLM processes before its performance starts to degrade. The knowledge cutoff date determines the end date of the data used in training.

(It's worth noting that context windows are not the be-all-and-end-all of LLMs. Practicing context engineering on models with smaller windows can even produce better results.)

| LLM Name | Context Window (Tokens) | Knowledge Cutoff Date |

| Gemini 2.0 Pro | 2,000,000 | August 2024 |

| Gemini 2.5 Pro | 1,000,000 (2,000,000 coming soon) | January 2025 |

| GPT-4.1 | 1,047,576 | May 2024 |

| Gemini 2.0 Flash | 1,000,000 | August 2024 |

| Gemini 1.5 Pro | 2,000,000 | November 2023 |

| GPT-o4-mini | 200,000 | May 2024 |

| GPT-o3 | 200,000 | May 2024 |

| Claude 3.7 Sonnet | 200,000 | October 2024 |

| Claude 3.5 Sonnet (New) | 200,000 | April 2024 |

| Claude 3.5 Sonnet | 200,000 | April 2024 |

| DeepSeek R1 | 131,072 | July 2024 |

| Qwen 3 | 128,000 | Unknown (Mid-2024) |

| Grok-3 | 128,000 | N/A - Real time |

| Gemini 2.0 Flash-Lite | 128,000 | August 2024 |

| Grok-2 | 128,000 | July 2024 |

| DeepSeek V3 | 128,000 | July 2024 |

| Llama 3.1 | 128,000 | December 2023 |

| GPT-4.5 | 128,000 | October 2023 |

| GPT-o3-mini | 200,000 | October 2023 |

| GPT-o1 | 200,000 | October 2023 |

| GPT-4o mini | 128,000 | October 2023 |

| GPT-4o | 128,000 | October 2023 |

| Phi-3 | 128,000 | October 2023 |

| DeepSeek-V2.5 | 32,768 | July 2024 |

| Mistral Large 2 | 32,768 | Unknown (pre-Jul 24) |

| Mixtral 8x22B | 65,536 | Unknown (pre-Apr 24) |

| DBRX | 32,768 | December 2023 |

| DeepSeek-V2 | 32,768 | November 2023 |

| Mistral 7B | 32,768 | Unknown (pre-Sept 23) |

| Nemotron-4 | 32,768 | June 2023 |

| Inflection-2.5 | 32,768 | Mid-2023 |

| Nova | 32,000 | Unknown (pre-Dec 24) |

| Qwen 2.5-Max | 32,000 | October 2023 |

| Gemini 1.0 Pro | 32,000 | February 2023 |

| Command R | 8,192 | November 2023 |

| Jamba | 8,192 | Mid-2023 |

| Gemma | 8,192 | Mid-2023 |

| Grok-1 | 8,192 | Mid-2023 |

| PaLM 2 | 8,192 | February 2023 |

| Falcon 180B | 8,192 | Late 2022 |

| Stable LM 2 | 4,096 | December 2023 |

| XGen-7B | 4,096 | Mid-2023 |

| Alpaca 7B | 2,048 | June 2022 |

| Pythia | 2,048 | Mid-2022 |

As adoption continues to grow, so does the LLM industry.

- The global large language model market is projected to grow from $6.5 billion in 2024 to $140.8 billion by 2033

- 92% of Fortune 500 firms have started using generative AI in their workflows

- Generative AI is disrupting the SEO industry and changing the way we find information online

- LLM search will drive 75% of search revenue by 2028

Here's a deeper dive on some of the most important models over the last 3 years.

1. GPT-5

Developer: OpenAI

Release date: August 2025

Number of Parameters: Unknown

Context Window (Tokens): 400,000

Knowledge Cutoff Date: October 2024

What is it? GPT-5 is the largest OpenAI model to date. In benchmarking, it outperforms GPT-4 in most tests.

GPT-5 is part of the reasoning-focused "o" lineup from OpenAI, meaning it is designed to "think" more deliberately before responding. Unlike earlier models that relied solely on unsupervised learning, GPT-5 incorporates advanced multimodal and reasoning capabilities, enabling more accurate and context-aware interactions. OpenAI has positioned GPT-5 as a major step forward, offering improved performance in tasks requiring logic, planning, and real-time understanding.

Pro users began gaining access to GPT-5 in mid-August 2025. Access will expand to Team, Enterprise, and Education users in early September.

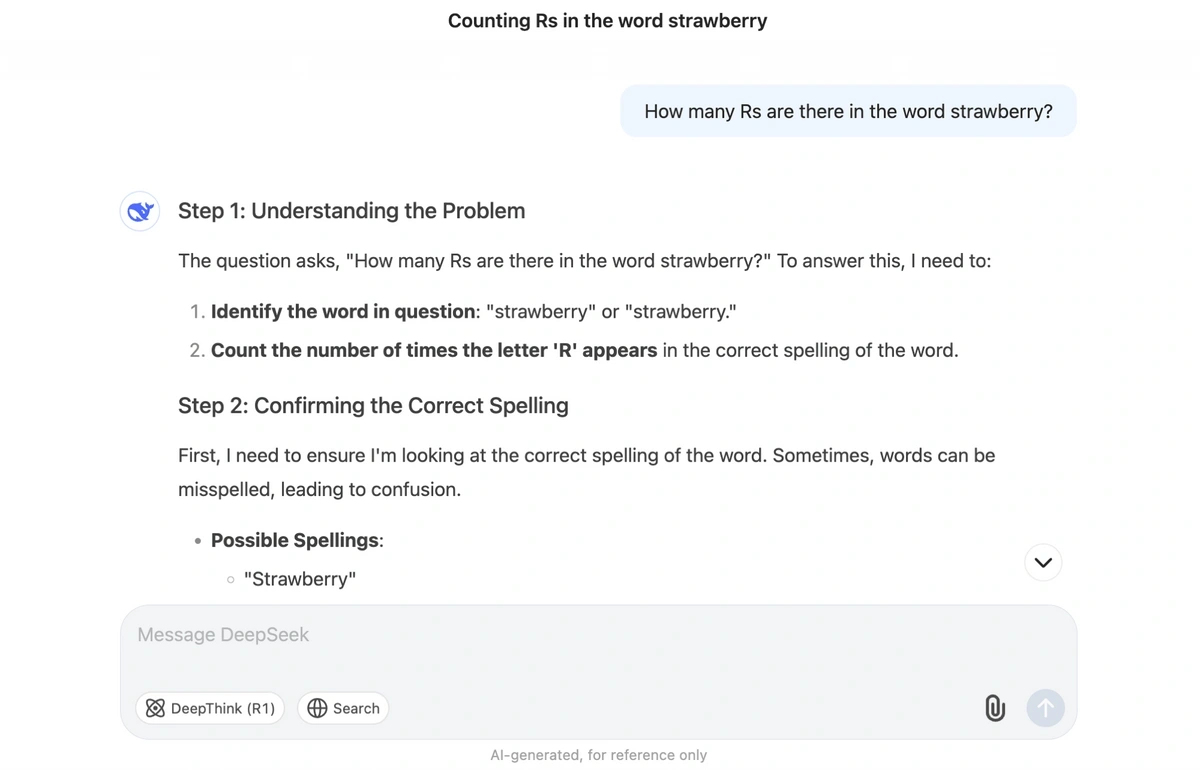

2. DeepSeek R1

Developer: DeepSeek

Release date: January 2025

Number of Parameters: 671B total, 37B active

Context Window (Tokens): 131,072

Knowledge Cutoff Date: July 2024

What is it? DeepSeek R1 is a reasoning model that excels in math and coding. It beats or matches OpenAI o1 in several benchmarks, including MATH-500 and AIME 2024.

(We've done a thorough DeepSeek vs ChatGPT comparison, where we put the R1 model to the test.)

On its release, DeepSeek immediately hit headlines due to the low cost of training compared to most major LLMs. Traffic to the DeepSeek website exploded in early 2025.

DeepSeek R1 is free to use and open-source. It's accessible via the API, the DeepSeek website, and mobile apps.

According to Semrush, DeepSeek gets over 500 million visits per month from more than 84 million unique visitors.

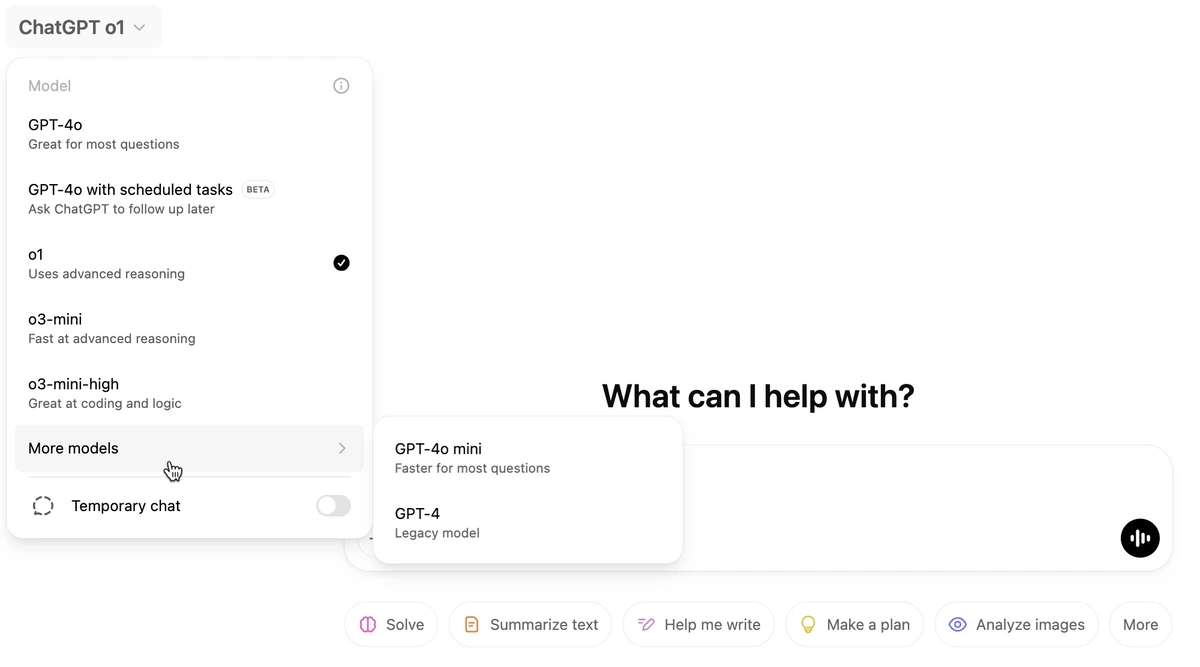

3. GPT-o3-mini

Developer: OpenAI

Release date: January 31, 2025

Number of Parameters: Unknown (estimated ~100B for o1-mini, likely higher for o3-mini)

Context Window (Tokens): 128,000

Knowledge Cutoff Date: October 2023

What is it? GPT-o3-mini is a small, fast model with reasoning capabilities. It follows GPT-o1, the first model in the "o" series from OpenAI, and a smaller version of the forthcoming o3 model.

Compared to o1, o3-mini is cost-efficient but does not support vision. Developers can choose from three levels of "effort", and similarly, users on the web can switch to o3-mini-high. OpenAI says o3-mini-high is more intelligent, but slower to reason.

After the full version of o3 is released, OpenAI is expected to release GPT-4.5 "Orion" and GPT-5 in mid-2025. The ChatGPT website continues to be one of the world's most popular sites, receiving more than 75 million visitors from organic search in February 2025.

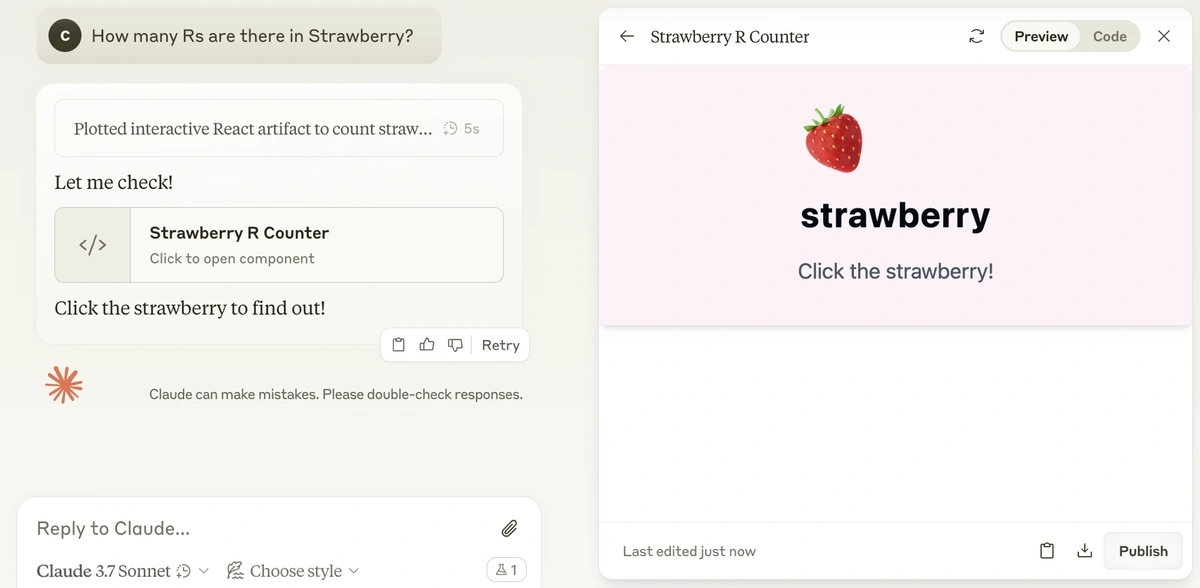

4. Claude 3.7 Sonnet

Developer: Anthropic

Release date: February 24, 2025

Number of Parameters: Unknown (estimated to be 200B+)

Context Window (Tokens): 200,000

Knowledge Cutoff Date: October 2024

What is it? Claude 3.7 Sonnet is the successor to Claude 3.5 Sonnet "New". It's anticipated to be the most intelligent model from Anthropic so far.

Claude remains a popular model for creative tasks and coding. On the web, it receives 3M+ visits from organic search each month.

The company describes 3.7 Sonnet as a hybrid reasoning model, meaning that it functions as a regular LLM, but is also capable of "thinking". In this extended thinking mode, 3.7 Sonnet publishes its "thought process" before providing an answer. The amount of "thinking" time is also user-customizable.

As part of the release, Claude's knowledge cutoff date was extended to October 2024. The context window remains unchanged at 200,000 tokens.

5. Grok-4

Developer: xAI

Release date: July 9, 2025

Number of Parameters: Unknown (Grok-1: 314 billion)

Context Window (Tokens): 256,000

Knowledge Cutoff Date: None (uses real-time information)

What is it? Grok-4 is the newest flagship model from xAI, building on the capabilities of Grok-3 with major improvements in reasoning, speed, and real-time awareness. It’s fully integrated into X (formerly Twitter) for Premium+ subscribers.

As of launch, Grok now serves 42.7 million active users, with daily visits averaging 6.85 million since Grok-4 became available.

6. Mistral Large 2

Developer: Mistral AI

Release date: July 24, 2024

Number of Parameters: 123 billion

Context Window (Tokens): 32,768

Knowledge Cutoff Date: Unknown

What is it? Mistral Large 2 is a massive upgrade on its predecessor, Mistral 7B. It's designed to bring Mistral up to speed with the latest competing LLMs from Meta and OpenAI. It was also fine-tuned to reduce the number of hallucinations. Large 2 is multilingual and has a 128k context window.

Benchmarking suggests that Mistral Large 2 outperforms Llama 3.1 405B in Python, C++, Java, PHP, and C# coding tasks.

7. PaLM 2

Developer: Google

Release date: May 10, 2023

Number of Parameters: 340 billion

Context Window (Tokens): 8,192

Knowledge Cutoff Date: February 2023

What is it? PaLM 2 is an advanced large language model developed by Google. As the successor to the original Pathways Language Model (PaLM), it’s trained on 3.6 trillion tokens (compared to 780 billion) and 340 billion parameters (compared to 540 billion). PaLM 2 was originally used to power Google's first generative AI chatbot, Bard (rebranded to Gemini in February 2024).

Want to Spy on Your Competition?

Explore competitors’ website traffic stats, discover growth points, and expand your market share.

8. Falcon 180B

Developer: Technology Innovation Institute (TII)

Release date: September 6, 2023

Number of Parameters: 180 billion

Context Window (Tokens): 8,192

Knowledge Cutoff Date: Late 2022

What is it? Developed and funded by the Technology Innovation Institute, Falcon 180B is an upgraded version of the earlier Falcon 40B LLM. It has 180 billion parameters, which is 4.5 times larger than the 40 billion parameters of Falcon 40B.

In addition to Falcon 40B, it also outperforms other large language models like GPT-3.5 and LLaMA 2 on tasks such as reasoning, question answering, and coding. In February 2024, the UAE-based Technology Innovation Institute (TII) committed $300 million in funding to the Falcon Foundation.

9. Stable LM 2

Developer: Stability AI

Release date: January 19, 2024

Number of Parameters: 1.6 billion and 12 billion

Context Window (Tokens): 4,096

Knowledge Cutoff Date: December 2023

What is it? Stability AI, the creators of the Stable Diffusion text-to-image model, are the developers behind Stable LM 2. This series of large language models includes Stable LM 2 12B (12 billion parameters) and Stable LM 2 1.6B (1.6 billion parameters). Released in April 2024, the larger 12B model outperforms models like LLaMA 2 70B on key benchmarks despite being much smaller.

10. Gemini 1.5 Pro

Developer: Google DeepMind

Release date: February 15, 2024

Number of Parameters: Unknown

Context Window (Tokens): 2,000,000

Knowledge Cutoff Date: November 2023

What is it? Gemini 1.5 offered a significant upgrade over its predecessor, Gemini 1.0. Gemini 1.5 Pro provided a one million-token context window on release (1 hour of video, 700,000 words, or 30,000 lines of code), the largest available at the time. This upgrade is 35 times larger than Gemini 1.0 Pro and surpasses the previous largest record of 200,000 tokens set by Anthropic’s Claude 2.1.

In June 2024, Gemini 1.5 Pro's context window was increased to 2,000,000 tokens.

11. Llama 4 Scout

Developer: Meta AI

Release date: April 5, 2025

Number of Parameters: 17 billion

Context Window (Tokens): 10,000,000

Knowledge Cutoff Date: August 2024

What is it? Llama 4 Scout is the latest release in Meta’s open-source model lineup, building on the success of Llama 3 and 3.1. While earlier versions like the 70B and 8B models outperformed competitors such as Mistral 7B and Google’s Gemma 7B on benchmarks like MMLU, reasoning, coding, and math, Llama 4 Scout takes things significantly further. It features 405 billion parameters and supports an extended context window of up to 128,000 tokens.

Llama 4 Scout delivers greater factual accuracy and broader general knowledge. Meta has also expanded its multilingual capabilities, adding support for eight more languages. This model now stands as the largest open-source release from Meta to date.

Earlier models, including Llama 2, remain available in 7B, 13B, and 70B variants for developers needing lighter-weight options.

12. Mixtral 8x22B

Developer: Mistral AI

Release date: April 10, 2024

Number of Parameters: 141 billion

Context Window (Tokens): 65,536

Knowledge Cutoff Date: Unknown

What is it? Mixtral 8x22B is a sparse Mixture-of-Experts (SMoE) model has 141 billion total parameters but only uses 39B active parameters to focus on improving the model’s performance-to-cost ratio.

13. Inflection-2.5

Developer: Inflection AI

Release date: March 10, 2024

Number of Parameters: Unknown

Context Window (Tokens): 32,768

Knowledge Cutoff Date: Mid 2023

What is it? Inflection-2.5 was developed by Inflection AI to power its conversational AI assistant, Pi. Significant upgrades have been made, as the model currently achieves over 94% of GPT-4’s average performance while only having 40% of the training FLOPs. In March 2024, the Microsoft-backed startup reached 1+ million daily active users on Pi.

In October 2024, Inflection released the Inflection 3.0 family of models designed for enterprise use.

14. Jamba

Developer: AI21 Labs

Release date: March 29, 2024

Number of Parameters: 52 billion

Context Window (Tokens): 8,192

Knowledge Cutoff Date: Mid 2023

What is it? AI21 Labs created Jamba, the world's first production-grade Mamba-style large language model. It integrates SSM technology with elements of a traditional transformer model to create a hybrid architecture. The model is efficient and highly scalable, with a context window of 256K and deployment support of 140K context on a single GPU.

15. Command R

Developer: Cohere

Release date: March 11, 2024

Number of Parameters: 35 billion

Context Window (Tokens): 8,192

Knowledge Cutoff Date: November 2023

What is it? Command R is a series of scalable LLMs from Cohere that support ten languages and 128,000-token context length (around 100 pages of text). This model primarily excels at retrieval-augmented generation, code-related tasks like explanations or rewrites, and reasoning. In April 2024, Command R+ was released to support larger workloads and provide real-world enterprise support.

16. Gemma

Developer: Google DeepMind

Release date: February 21, 2024

Number of Parameters: 2 billion and 7 billion

Context Window (Tokens): 8,192

Knowledge Cutoff Date: Mid 2023

What is it? Gemma is a series of lightweight open-source language models developed and released by Google DeepMind. The Gemma models are built with similar tech to the Gemini models, but Gemma is limited to text inputs and outputs only. The models have a context window of 8,000 tokens and are available in 2 billion and 7 billion parameter sizes.

17. Phi-3

Developer: Microsoft

Release date: April 23, 2024

Number of Parameters: 3.8 billion

Context Window (Tokens): 8,000-128,000

Knowledge Cutoff Date: Mid 2023

What is it? Classified as a small language model (SLM), Phi-3 is Microsoft's latest release with 3.8 billion parameters. Despite the smaller size, it's been trained on 3.3 trillion tokens of data to compete with Mistral 8x7B and GPT-3.5 performance on MT-bench and MMLU benchmarks.

To date, Phi-3-mini is the only model available. However, Microsoft plans to release the Phi-3-small and Phi-3-medium models later this year.

18. XGen-7B

Developer: Salesforce

Release date: July 3, 2023

Number of Parameters: 7 billion

Context Window (Tokens): 4,096

Knowledge Cutoff Date: Mid 2023

What is it? XGen-7B is a large language model from Salesforce with 7 billion parameters and an 8k context window. The model was trained on 1.37 trillion tokens from various sources, such as RedPajama, Wikipedia, and Salesforce's own Starcoder dataset.

Salesforce has released two open-source versions, a 4,000 and 8,000 token context window base, hosted under an Apache 2.0 license.

19. DBRX

Developer: Databricks' Mosaic ML

Release date: March 27, 2024

Number of Parameters: 132 billion

Context Window (Tokens): 32,768

Knowledge Cutoff Date: December 2023

What is it? DBRX is an open-source LLM built by Databricks and the Mosaic ML research team. The mixture-of-experts architecture has 36 billion (of 132 billion total) active parameters on an input. DBRX has 16 experts and chooses 4 of them during inference, providing 65 times more expert combinations compared to similar models like Mixtral and Grok-1.

20. Pythia

Developer: EleutherAI

Release date: February 13, 2023

Number of Parameters: 70 million to 12 billion

Context Window (Tokens): 2,048

Knowledge Cutoff Date: Mid 2022

What is it? Pythia is a series of 16 large language models developed and released by EleutherAI, a non-profit AI research lab. There are eight different model sizes: 70M, 160M, 410M, 1B, 1.4B, 2.8B, 6.9B, and 12B. Because of Pythia's open-source license, these LLMs serve as a base model for fine-tuned, instruction-following LLMs like Dolly 2.0 by Databricks.

21. Alpaca 7B

Developer: Stanford CRFM

Release date: March 27, 2024

Number of Parameters: 7 billion

Context Window (Tokens): 32,768

Knowledge Cutoff Date: Unknown

What is it? Alpaca is a 7 billion-parameter language model developed by a Stanford research team and fine-tuned from Meta's LLaMA 7B model. Users will notice that, although being much smaller, Alpaca performs similarly to text-DaVinci-003 (ChatGPT 3.5). However, Alpaca 7B is available for research purposes, and no commercial licenses are available.

22. Nemotron-4 340B

Developer: NVIDIA

Release date: June 14, 2024

Number of Parameters: 340 billion

Context Window (Tokens): 32,768

Knowledge Cutoff Date: June 2023

What is it? Nemotron-4 340B is a family of large language models for synthetic data generation and AI model training. These models help businesses create new LLMs without larger and more expensive datasets. Instead, Nemotron-4 can create high-quality synthetic data to train other AI models, which reduces the need for extensive human-annotated data.

The model family includes Nemotron-4-340B-Base (foundation model), Nemotron-4-340B-Instruct (fine-tuned chatbot), and Nemotron-4-340B-Reward (quality assessment and preference ranking). Due to the 9 trillion tokens used in training, which includes English, multilingual, and coding language data, Nemotron-4 matches GPT-4's high-quality synthetic data generation capabilities.

Conclusion

New breakthroughs and innovations are emerging at an unprecedented pace.

From compact models like Phi-2 and Alpaca 7B to cutting-edge architectures like Jamba and DBRX, the field of LLMs is pushing the boundaries of what's possible in natural language processing (NLP).

We will keep this list regularly updated with new models. If you liked learning about these LLMs, check out our lists of generative AI startups and AI startups.

Stop Guessing, Start Growing 🚀

Use real-time topic data to create content that resonates and brings results.

Exploding Topics is owned by Semrush. Our mission is to provide accurate data and expert insights on emerging trends. Unless otherwise noted, this page’s content was written by either an employee or a paid contractor of Semrush Inc.

Share

Newsletter Signup

By clicking “Subscribe” you agree to Semrush Privacy Policy and consent to Semrush using your contact data for newsletter purposes

Written By

Anthony is a Content Writer at Exploding Topics. Before joining the team, Anthony spent over four years managing content strat... Read more