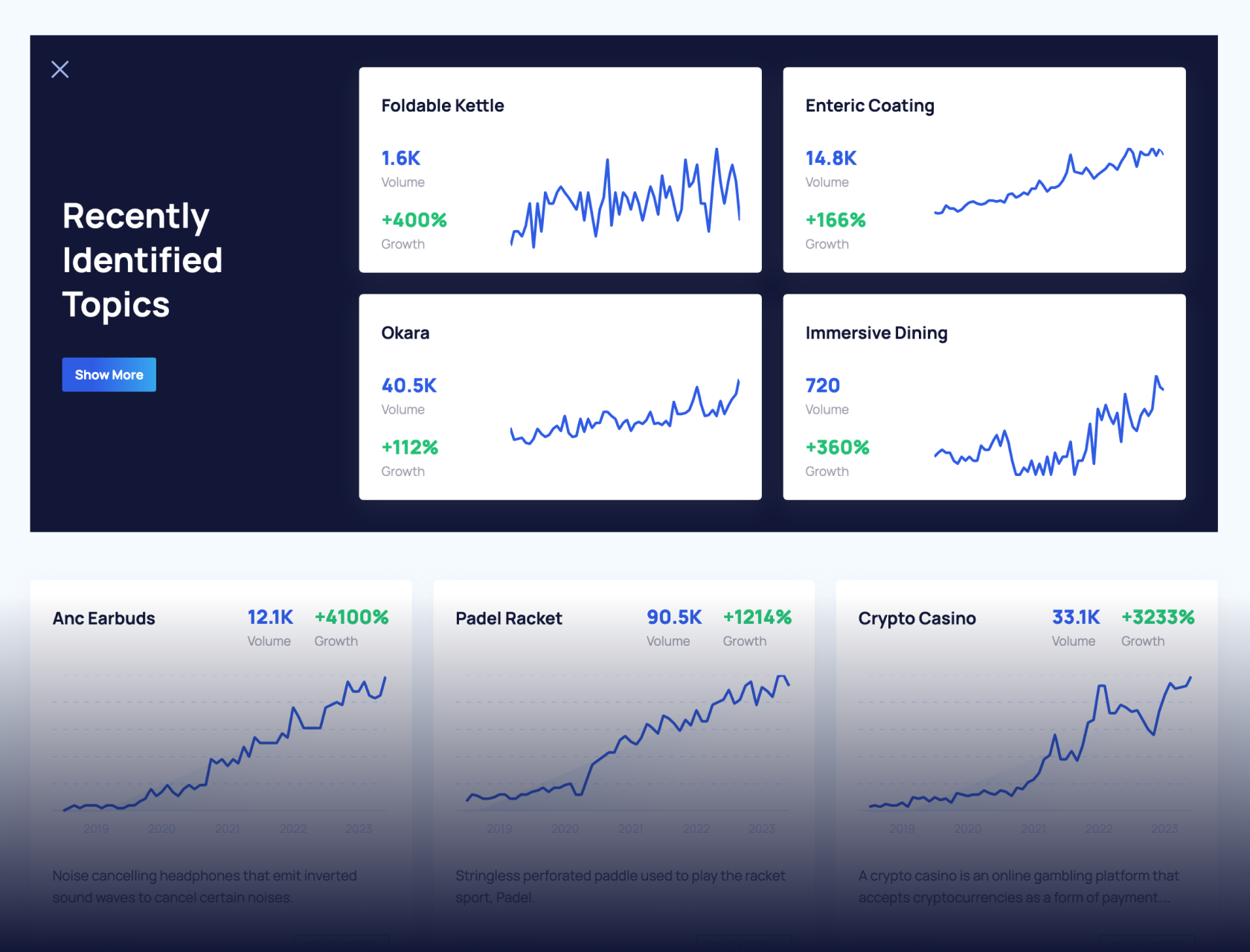

Get Advanced Insights on Any Topic

Discover Trends 12+ Months Before Everyone Else

How We Find Trends Before They Take Off

Exploding Topics’ advanced algorithm monitors millions of unstructured data points to spot trends early on.

Features

Keyword Research

Performance Tracking

Competitor Intelligence

Fix Your Site’s SEO Issues in 30 Seconds

Find technical issues blocking search visibility. Get prioritized, actionable fixes in seconds.

Powered by data from

Top 5 Data Privacy Trends (2025 & 2026)

You may also like:

Americans are worried about data privacy.

Many are concerned about how the government is using their personal data while others are worried about how AI will affect the privacy of their data.

It’s no surprise then that 72% of Americans support increased privacy regulations. But that’s just one factor influencing today’s data privacy landscape.

We’ll cover specific trends for users and businesses, as well as the latest privacy-enhancing tech solutions.

1. Lack of Privacy Worries Social Media Users

Social media platforms are notorious for collecting data on users.

This data feeds algorithms and enables brands to target specific users in their advertising.

However, the sheer amount of data collected by social media platforms is staggering.

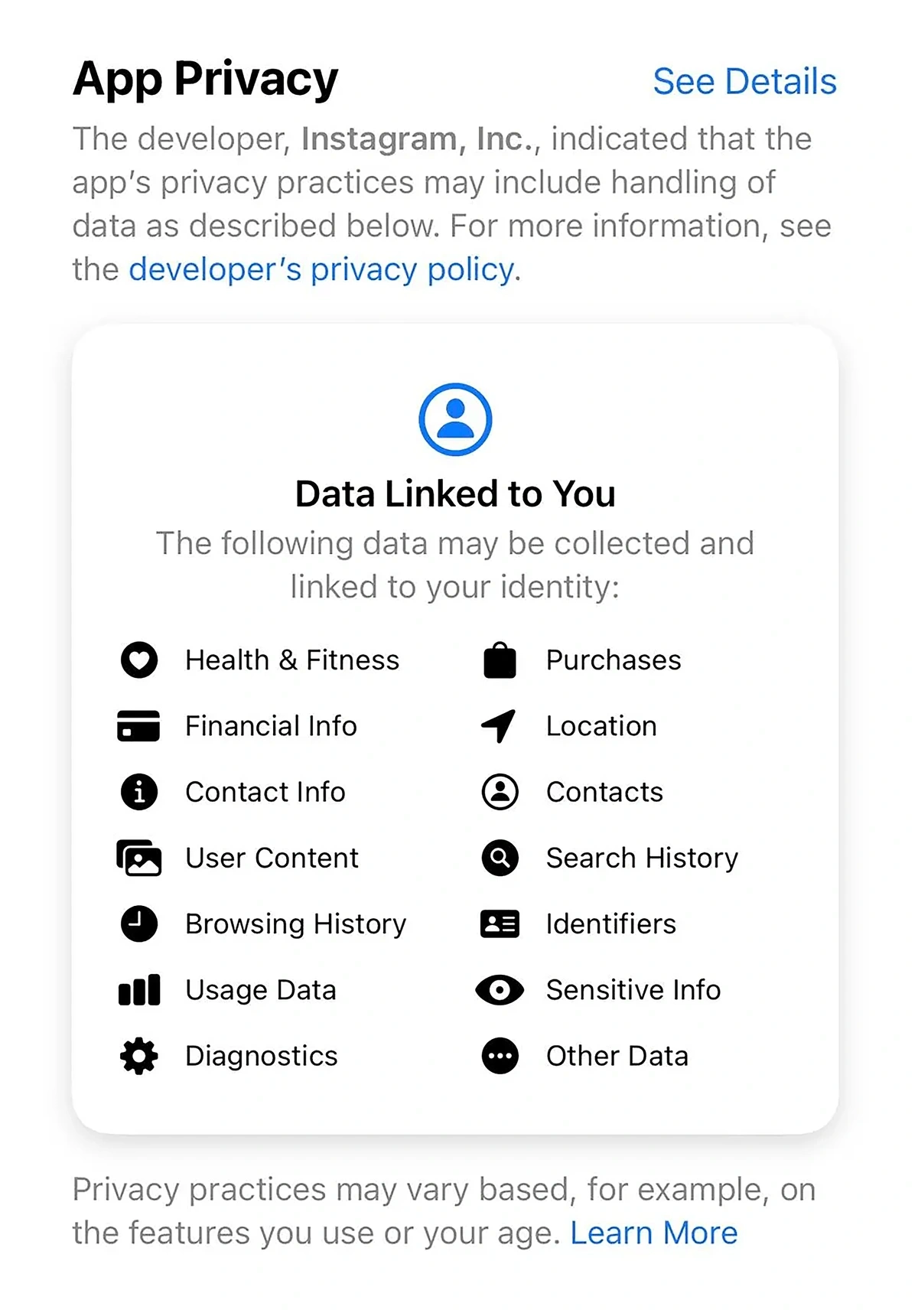

Reports show Meta platforms (Instagram, Threads, and Facebook) track and collect 86% of a user’s personal data.

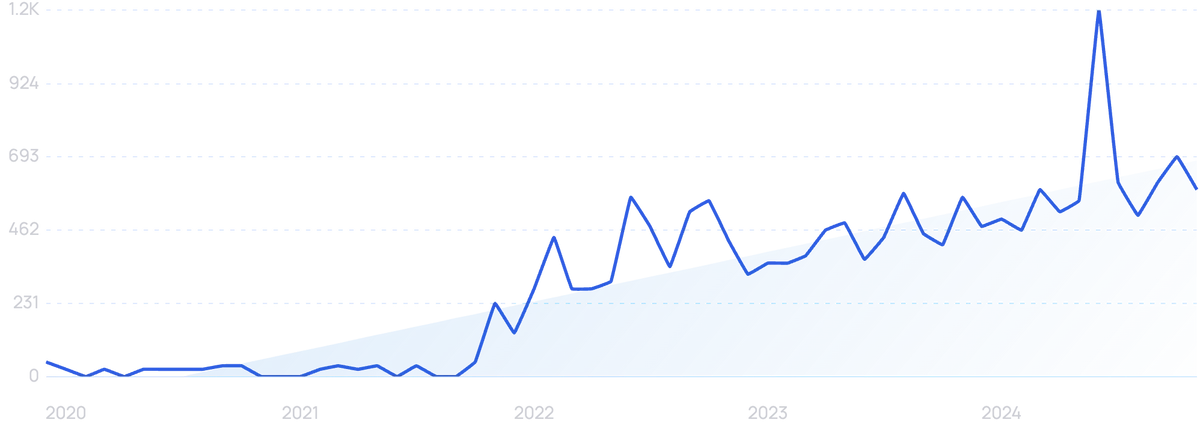

Search volume for “Meta privacy” grew rapidly in 2022 and 2023.

Lack of privacy on Threads, especially, was noted when the platform debuted in the summer of 2023.

TechCrunch called it a “privacy nightmare”.

The app collects data on everything from search history to financial data to the user’s precise location.

The privacy concerns were so serious that the app was not allowed to launch in the EU (which has stricter privacy laws than the US) until December 2023.

And, some social media platforms are even collecting data on non-users.

One cybersecurity report noted that TikTok’s pixels are present on a wide range of websites and collect a host of data on non-users and users who have deleted the social app.

Americans are becoming increasingly unhappy about the lack of privacy on social networks.

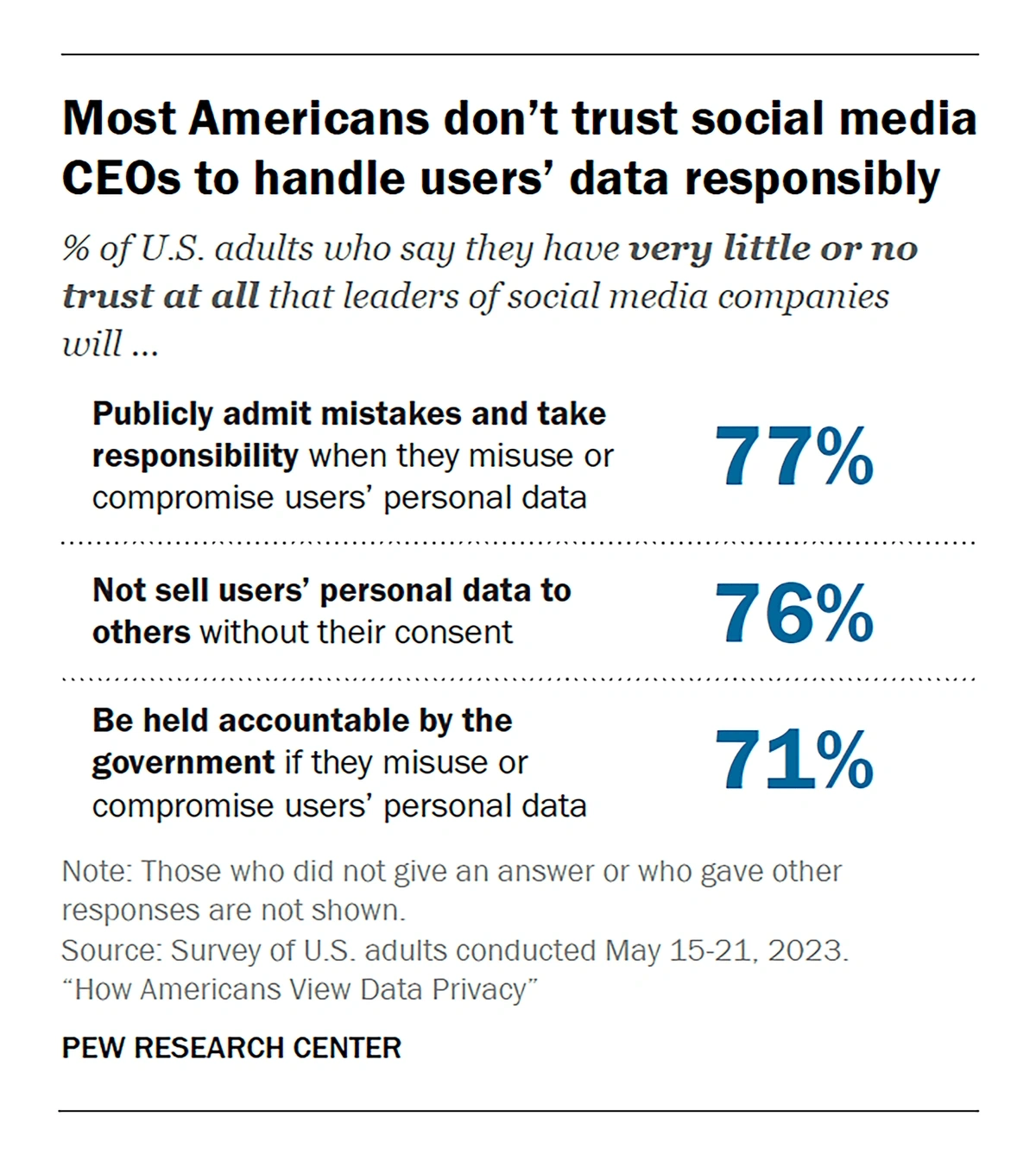

A survey from the Pew Research Center shows that 76% of Americans fear that leaders of social media companies will sell their personal data to others without their consent.

More than 70% of Americans are worried about their personal data being in the hands of social networking companies.

This is especially true when it comes to children.

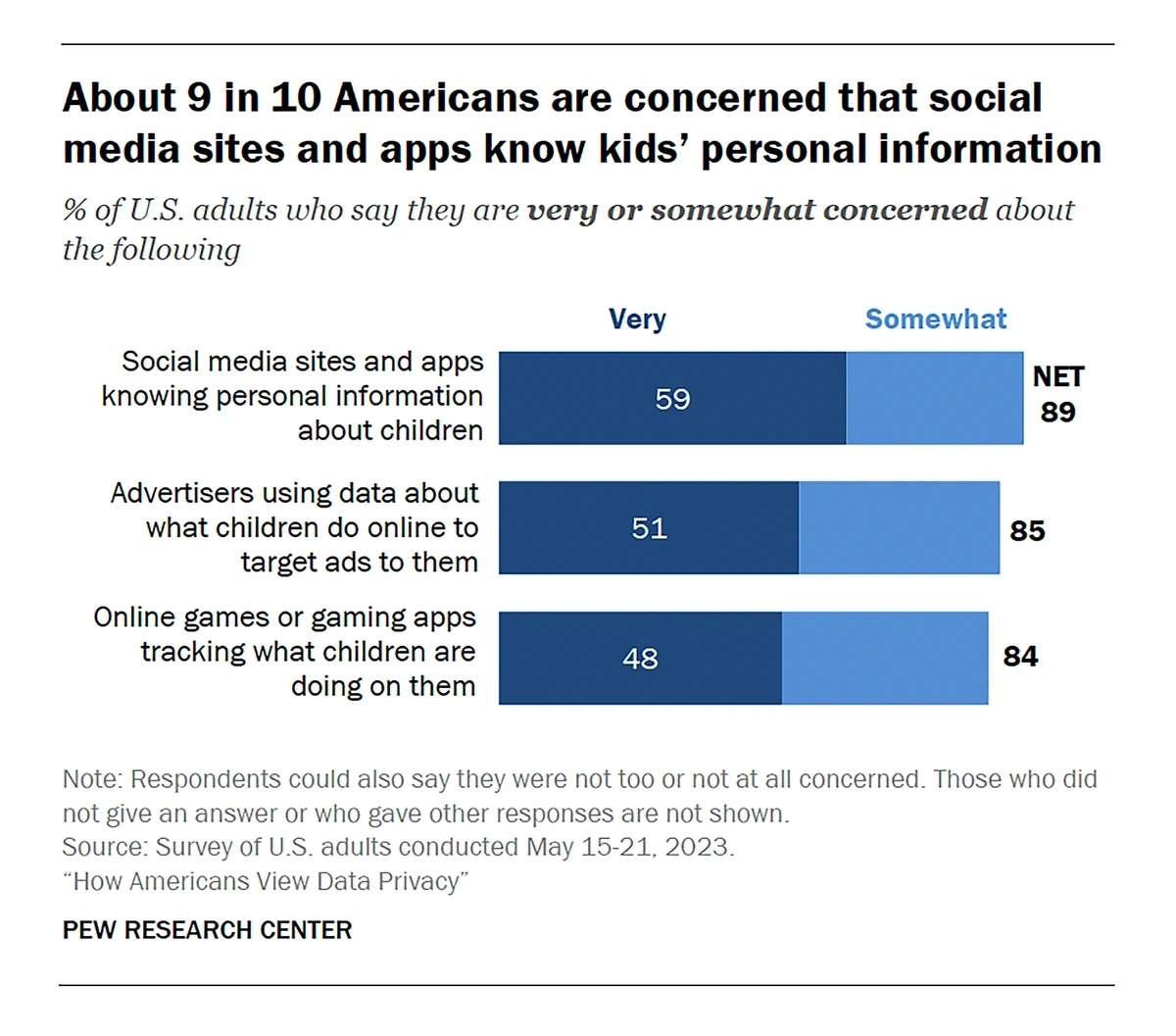

The Pew Research Center reports that 89% of Americans are concerned about social media platforms collecting data on children.

Nearly 90% of people are concerned about social media sites tracking children’s data.

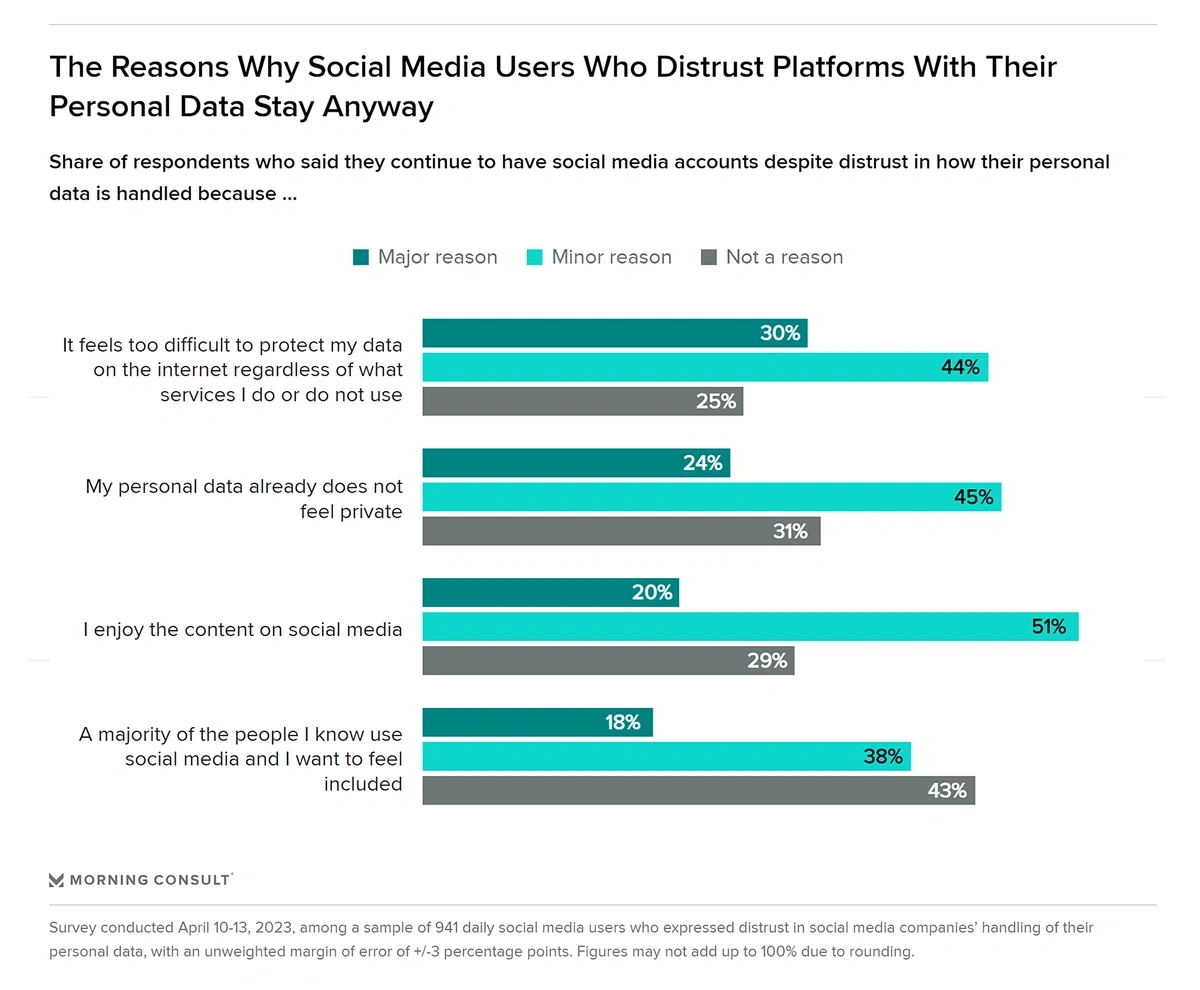

Despite the privacy concerns, Americans continue to use social media.

There are more than 320 million users in the US and a penetration rate of over 92%.

Surveys show this is mainly due to apathy.

Many users continue to use social media despite privacy concerns.

Nearly one-third of users say it’s too difficult to protect their data no matter what sites they use, and that’s the main reason they still use social media despite the lack of privacy.

Others say their personal data isn’t private anywhere.

2. AI Tools Create Privacy Concerns for Businesses and Consumers

The mass introduction of AI systems over the past year has many tech experts, business leaders, and consumers sounding the alarm about potential privacy concerns.

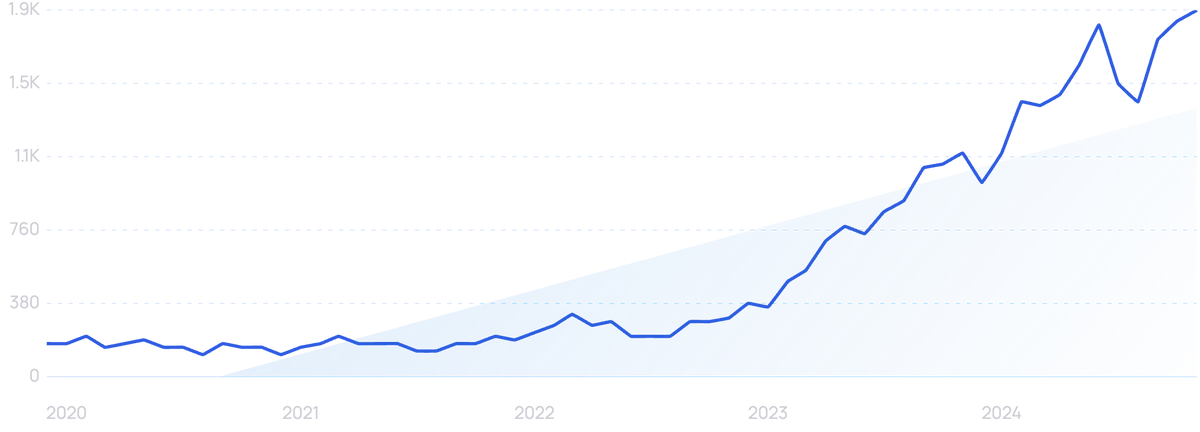

Search volume for “AI privacy” is up more than 1,011% in the past 5 years.

One-third of businesses surveyed by IAPP said AI governance is a top priority.

A major part of that governance is ensuring the privacy of company and customer data.

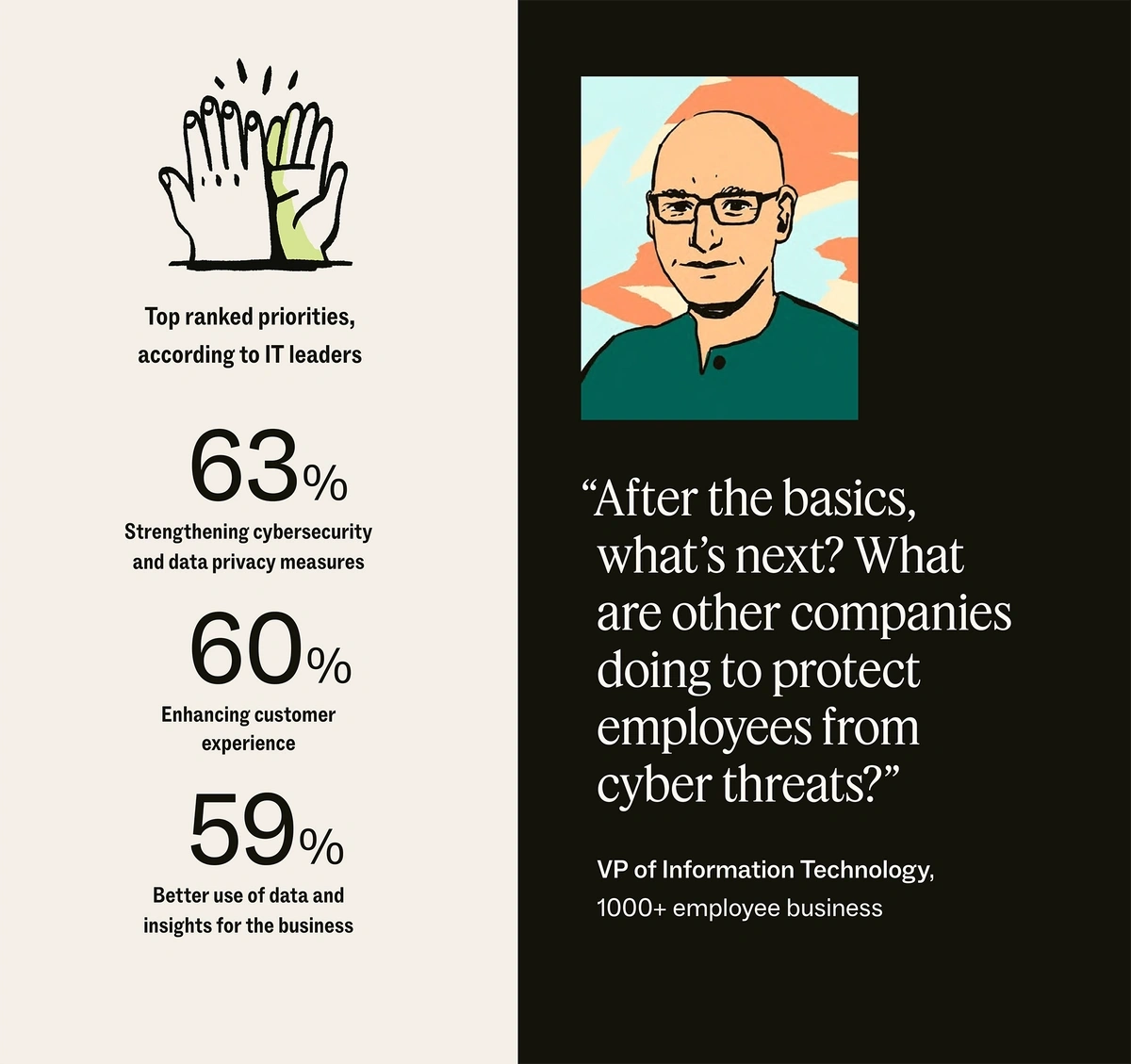

A survey from Zendesk showed 84% of IT leaders reported an increased importance of deploying AI and 87% of them are investing in data privacy and cybersecurity solutions as part of their deployments.

Zendesk reports that 63% of all IT leaders say they’re focused on strengthening cybersecurity and data privacy measures.

One concern is that employees may not realize the sheer lack of privacy when sharing data with open AI systems.

For example, Samsung employees leaked secret code to ChatGPT in three separate events during the spring of 2023 and the company subsequently banned the use of the AI tool.

Several banks, like CitiGroup and JPMorgan Chase, have also banned ChatGPT.

In addition, Apple has banned GitHub’s Copilot, an AI-powered programming tool.

Apple, along with several other companies, has restricted the use of generative AI by their employees.

It’s not just businesses that are concerned about the data privacy implications of using AI. Many consumers view AI as a threat to privacy, as well.

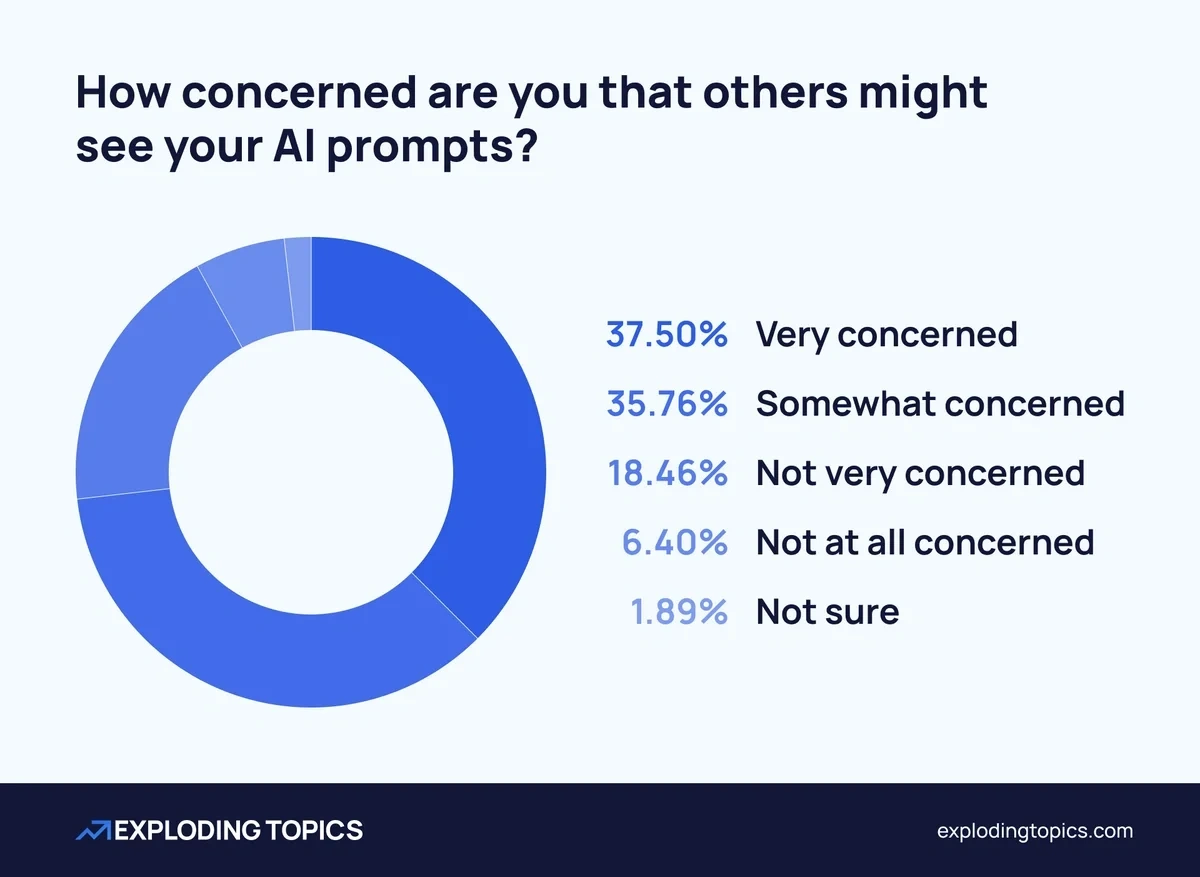

An Exploding Topics AI privacy survey of US consumers found that 73.26% are at least somewhat concerned that others may see their AI prompts.

Other research shows that nearly 60% of global consumers say AI is a significant threat to their privacy

When AI models are trained, they often obtain and use personal data. This data may include sensitive information like addresses, financial information, medical records, and social security numbers.

The larger problem is that cybercriminals may reverse engineer AI systems to gain access to specific data and personal information.

The World Economic Forum has called on tech companies to prioritize privacy in the collection and management of data.

In some cases, the AI systems can do this on their own. It’s called “predictive harm”.

The complex algorithms and capabilities of AI systems enable it to take a handful of seemingly unrelated data and use it to predict very sensitive attributes about a person. That could include things like sexual orientation, health status, and political affiliation.

In addition, once an AI tool has been trained on this kind of personal data there’s virtually no way to remove it.

Tech experts say the only way to remove the data is to delete the AI model in its entirety.

3. The Number of Costly Data Breaches Continue to Climb

Data from IBM shows the average cost of a data breach in 2023 was $4.5 billion. That’s 15% more than three years previously.

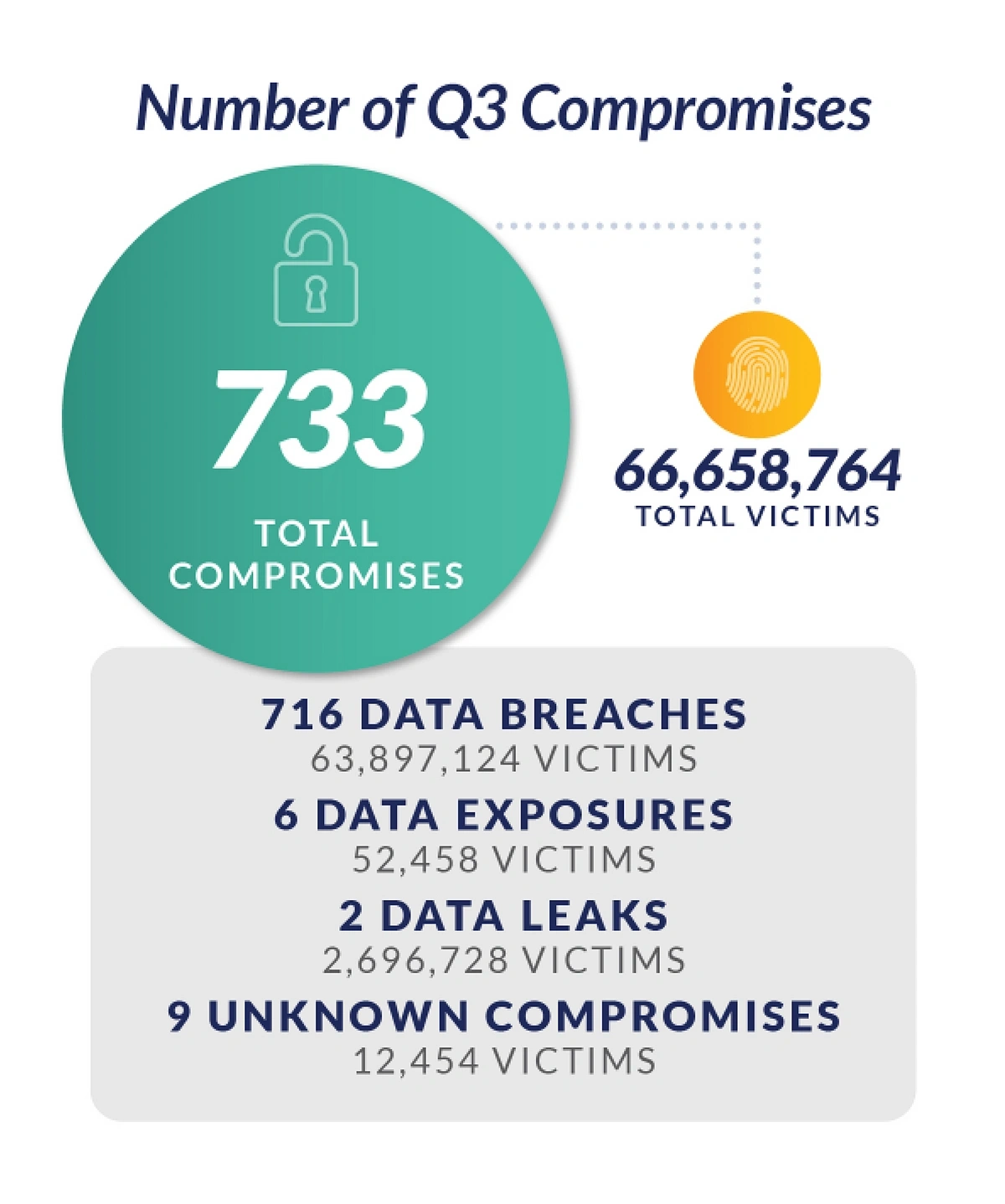

According to the Identity Theft Resource Center, there were 2,116 data compromises in the first nine months of 2023 alone.

Through those compromises, criminals got their hands on the data of 233.9 million people.

The Identity Theft Resource Center noted that phishing and other social engineering attacks are one of the most frequently reported causes of data breaches.

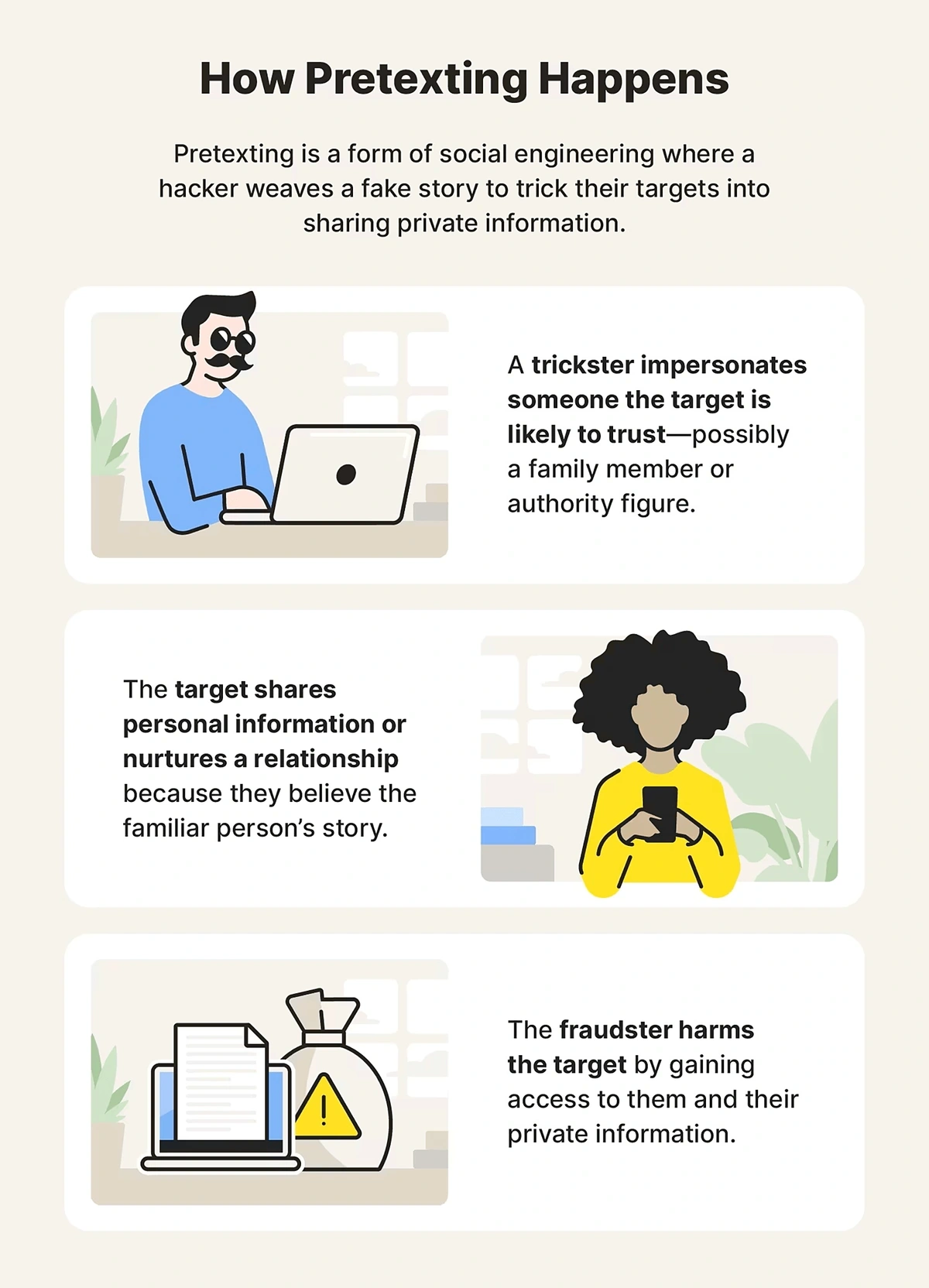

A particular type of social engineering called pretexting is on the rise.

Pretexting is one method malicious individuals use to gain access to data.

This term describes the process of hackers creating believable stories, or pretexts, in order to convince individuals to trust them. They often make it appear as if their messages come from someone the target trusts, like a friend or boss. Once they have that trust, they’re able to extort sensitive information and data.

This can happen via email, voice, or texting.

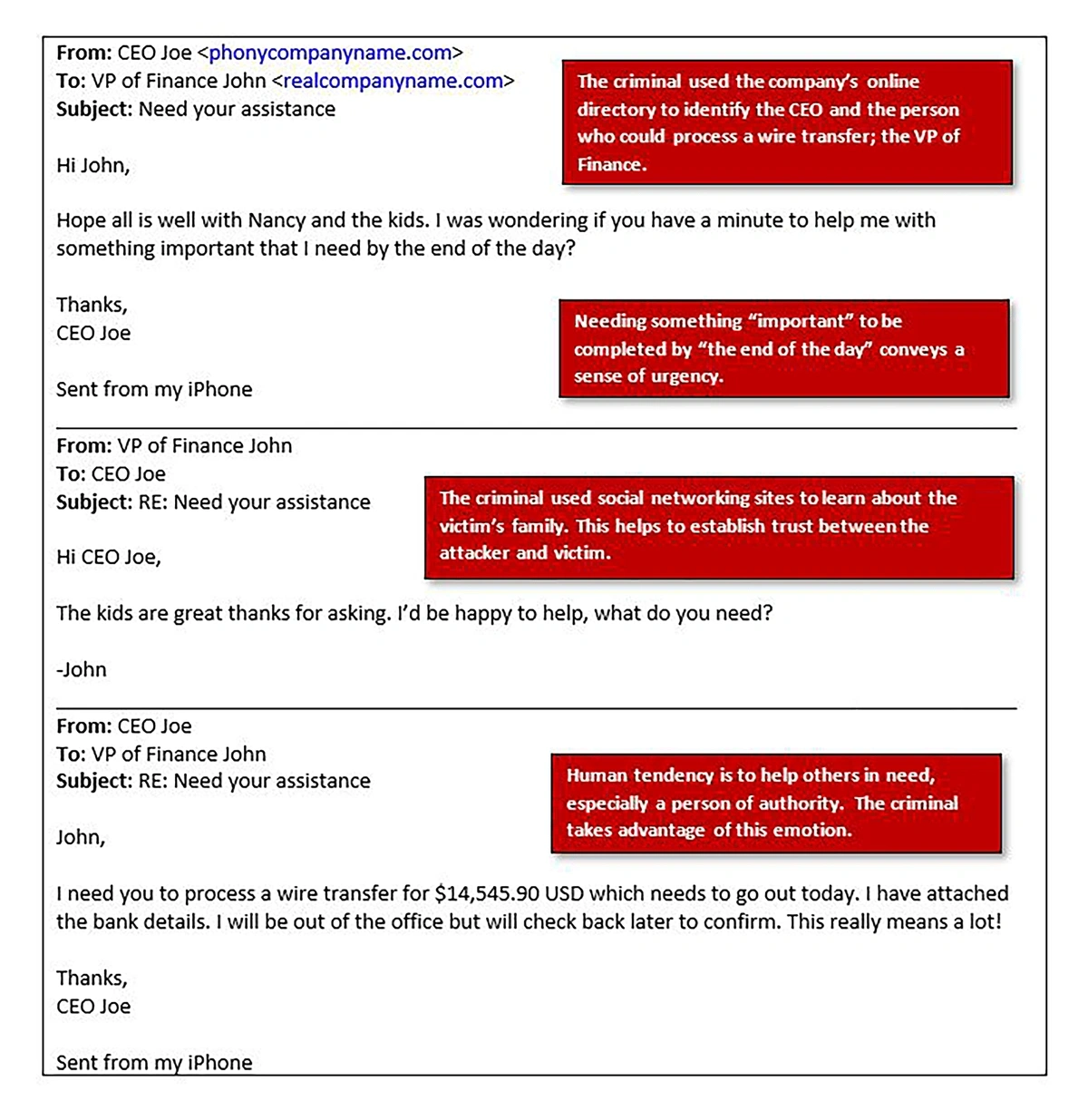

An example of a pretexting attack sent through email.

A report from Verizon showed 50% of all social engineering attacks from November 2021 to October 2022 were pretexting attacks.

One of the most successful hacking groups, Scattered Spider, frequently uses text-based pretexting and fake phone calls as part of their strategy.

Ransomware is also on the rise. That’s according to a recent report commissioned by Apple.

The report found a 70% increase in ransomware attacks in the first nine months of 2023 compared to the same time period in 2022.

Minneapolis Public Schools fell victim to a ransomware attack in March 2023.

The school refused to pay the $1 million ransom and 300,000 files filled with private student and employee data were leaked on the dark web.

In another incident, the City of Dallas was attacked with ransomware in May 2023.

The stolen data included “tons of personal information of employees (phones, addresses, credit cards, SSNs, passports), detailed court cases, prisoners, medical information, clients’ information, and thousands and thousands of governmental documents”.

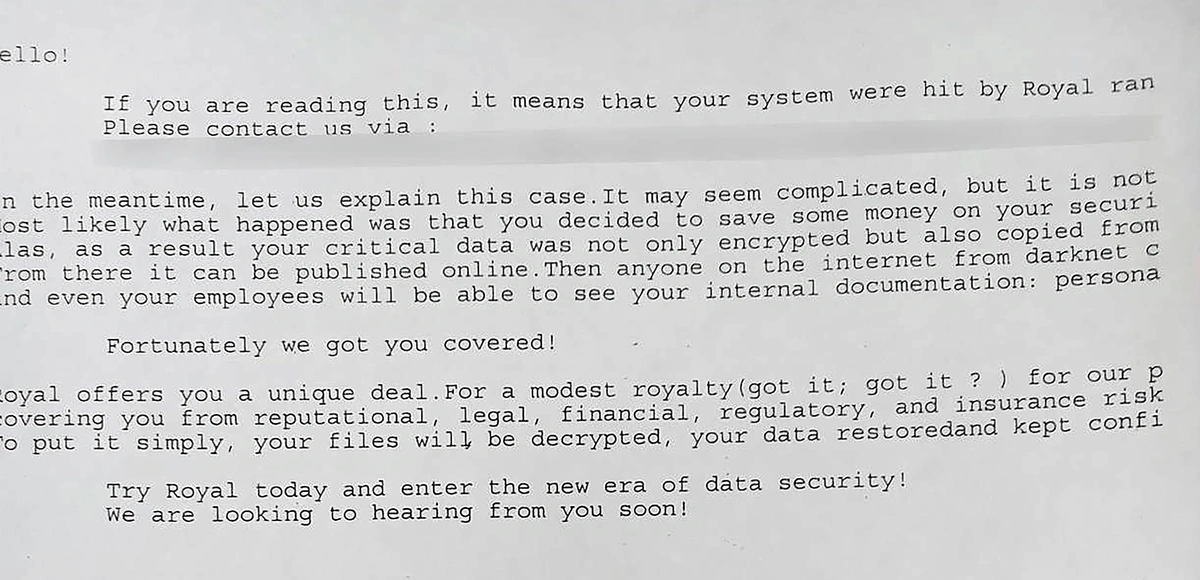

The ransomware note received by Dallas city officials.

4. The Vulnerabilities of Healthcare Data

Information provided to insurers and medical professionals is strictly safeguarded by HIPPA.

But in today’s world, that’s not nearly enough protection.

Nearly 75% of individuals are concerned about the privacy of their medical data.

Their fears are not unfounded.

In 2023 alone, more than 540 organizations reported data breaches to the HHS Office.

Those breaches impacted 112 million individuals—more than double that of 2022.

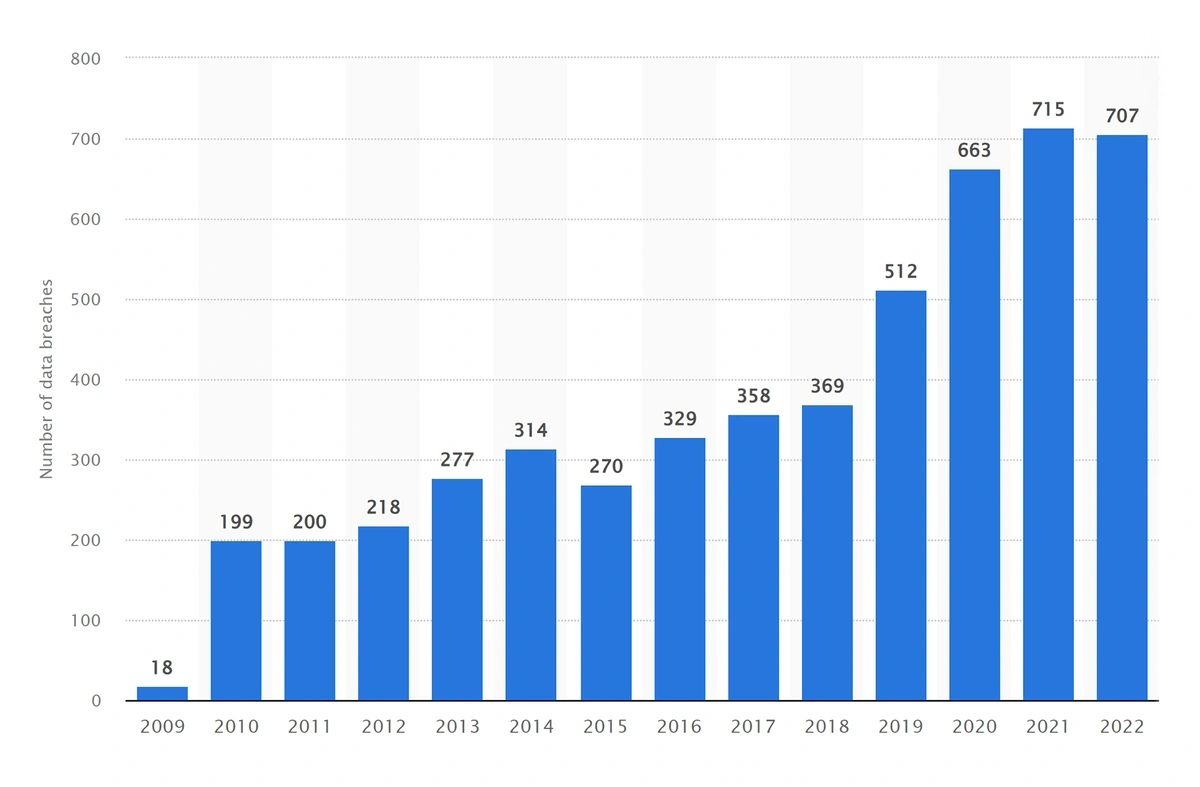

The number of healthcare data breaches has dramatically increased since 2019.

In addition to cyber threats, there are fewer malicious threats hidden online.

Any medical data that’s typed into a search bar, entered in an app, or tracked on a wearable is not protected by HIPPA. Many times, that data isn’t protected at all.

If the data is protected, it’s up to the company collecting the data to keep it safe.

But lately, there have been many incidents in which healthcare data has been tracked or sold without users’ consent.

The FTC urges individuals to fully evaluate the privacy policies of health apps.

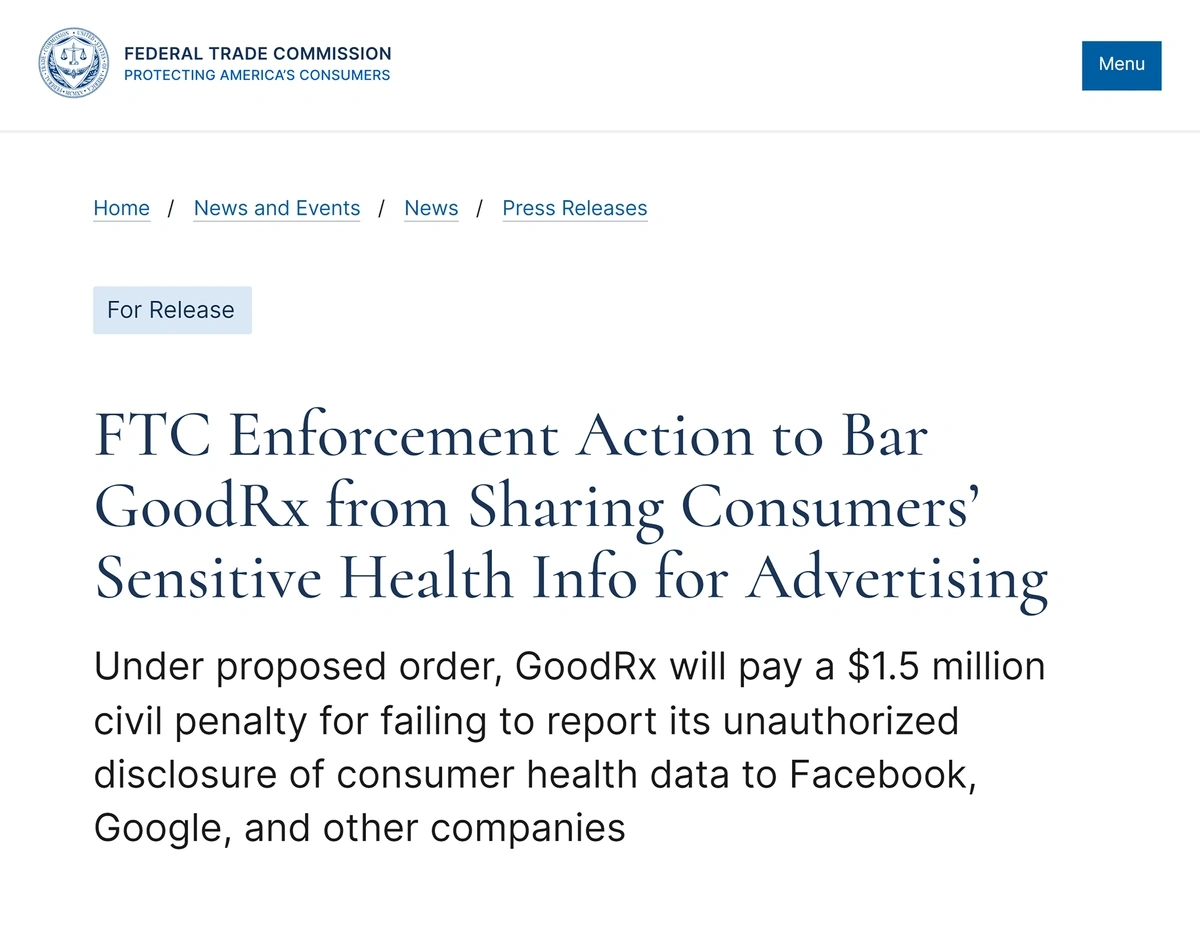

Take GoodRx, for example.

The company led users to believe their data was safe under HIPAA protections, and the company assured users it would not sell the data.

GoodRx, however, sold the data to Facebook, Google, and other businesses.

The FTC got involved and fined GoodRx $1.5 million.

GoodRx was fined $1.5 million for sharing customer information with third-party advertisers.

The mental health app BetterHelp was fined $7.8 million for similar actions.

Lawmakers in Connecticut, Washington, and Nevada have passed legislation that expands the protection of what they call “consumer health data”. That mostly covers any sensitive medical data that’s not already covered by HIPAA.

The laws require users to opt-in to data collection. They also specify that entities must give users a way to revoke their permission.

5. A New Wave of Privacy-Enhancing Technologies

Privacy-enhancing technologies (PETs) allow businesses to leverage their data while protecting it.

Gartner predicted that more than 60% of large businesses would employ at least one PET solution by 2025.

PETs work by keeping data essentially hidden while a researcher or business user is accessing it — both privacy and utility are preserved.

These tools cover everything from data collection, processing, analysis, and sharing.

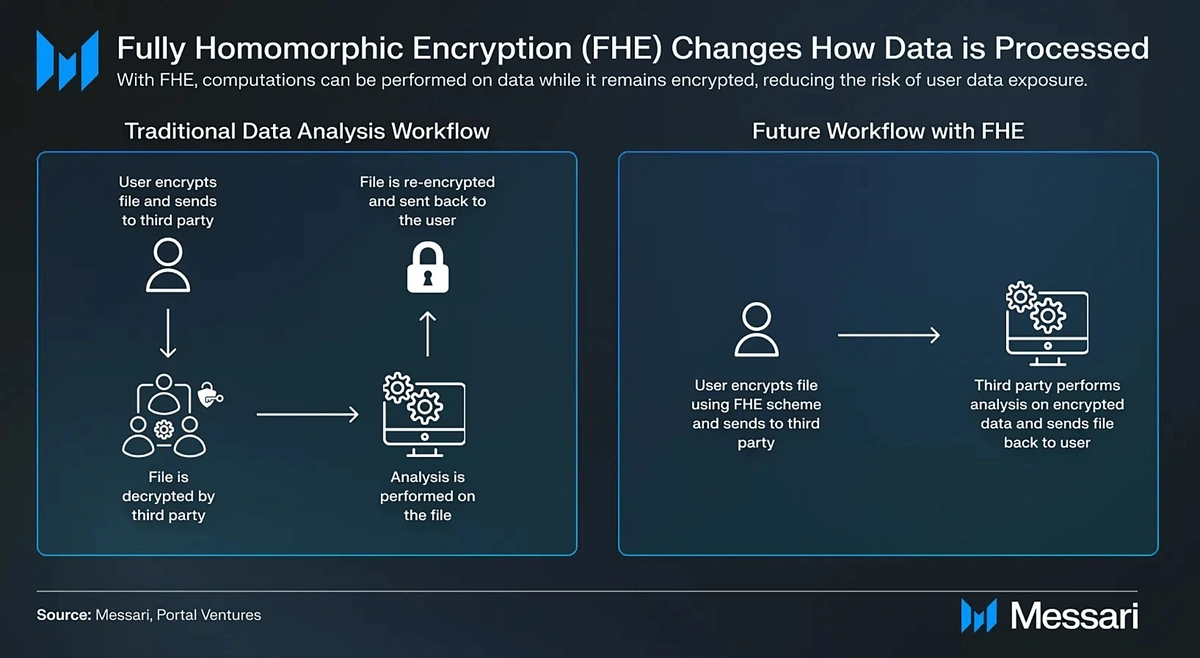

In recent months, there’s been increased interest in fully homomorphic encryption (FHE) of data.

FHE provides end-to-end encryption for sensitive data.

Data with homomorphic encryption allows users to perform computations on encrypted data without decrypting it.

This type of data protection comes in three levels: partial, somewhat, and full.

In FHE, users have total flexibility to perform any operations on the data and retain its encryption.

FHE software has long been out of reach for most because it’s computationally intensive and up to 1,000 times slower than other types of data processing.

But that’s set to change in 2025.

Industry experts are eager to access new FHE software.

There are at least six companies vying to commercialize chips that can utilize FHE in a fast, efficient manner. To be usable, the chips would need to reduce the computing time from weeks down to just seconds.

The technology could offer enhanced privacy in areas like financial records, medical data, and blockchain.

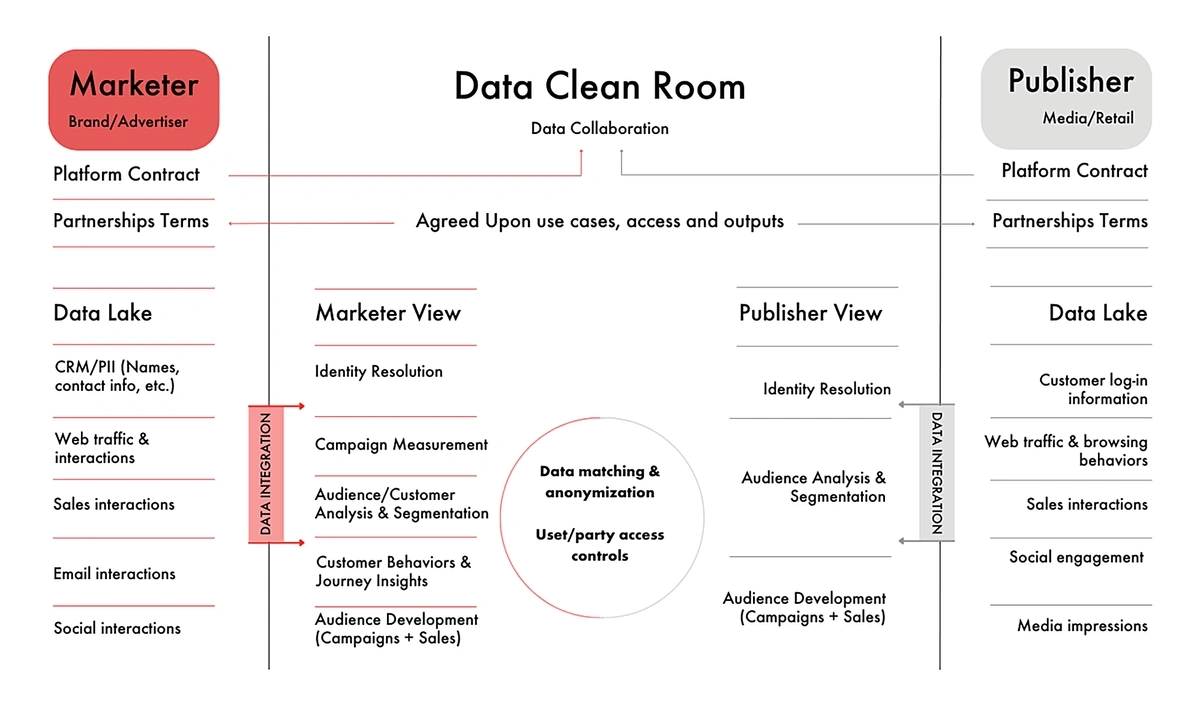

Another privacy-enhancing technology that’s gotten a lot of attention lately is the concept of data clean rooms.

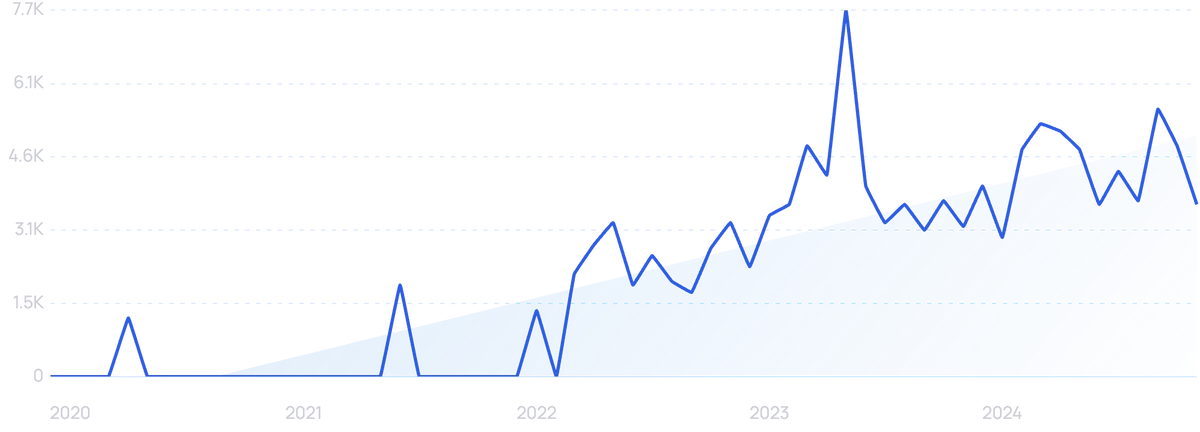

Search interest in “data clean room” has increased 4,600% in the past 5 years.

These virtual spaces provide a secure way for advertisers and media companies to share data. This allows advertisers to see the impact of their campaigns across platforms and publishers.

According to the Interactive Advertising Bureau, 64% of companies using PETs are employing data clean rooms as part of their overall strategy.

Data clean rooms ensure the privacy of data while offering benefits to marketers and publishers.

In mid-2023, Paramount launched a data clean room.

NBC, Roku, Disney, and Clear Channel Outdoor have them as well.

Key Takeaways

Concerned consumers are likely to continue their calls for more laws and regulations aimed at protecting their data. At the same time, new technology like AI has the potential to introduce even more privacy issues.

In the coming years, PETs are likely to play a key role as businesses deploy enhanced data privacy strategies. Organizations wanting to stay ahead of malicious threats will be looking for advanced cybersecurity solutions, as well.

Stay tuned — the future of data privacy is very much in flux.

Stop Guessing, Start Growing 🚀

Use real-time topic data to create content that resonates and brings results.

Exploding Topics is owned by Semrush. Our mission is to provide accurate data and expert insights on emerging trends. Unless otherwise noted, this page’s content was written by either an employee or a paid contractor of Semrush Inc.

Share

Newsletter Signup

By clicking “Subscribe” you agree to Semrush Privacy Policy and consent to Semrush using your contact data for newsletter purposes

Written By

Alison is an accomplished copywriter with proven success in editing, marketing, research, and management. Before writing for E... Read more