Get Advanced Insights on Any Topic

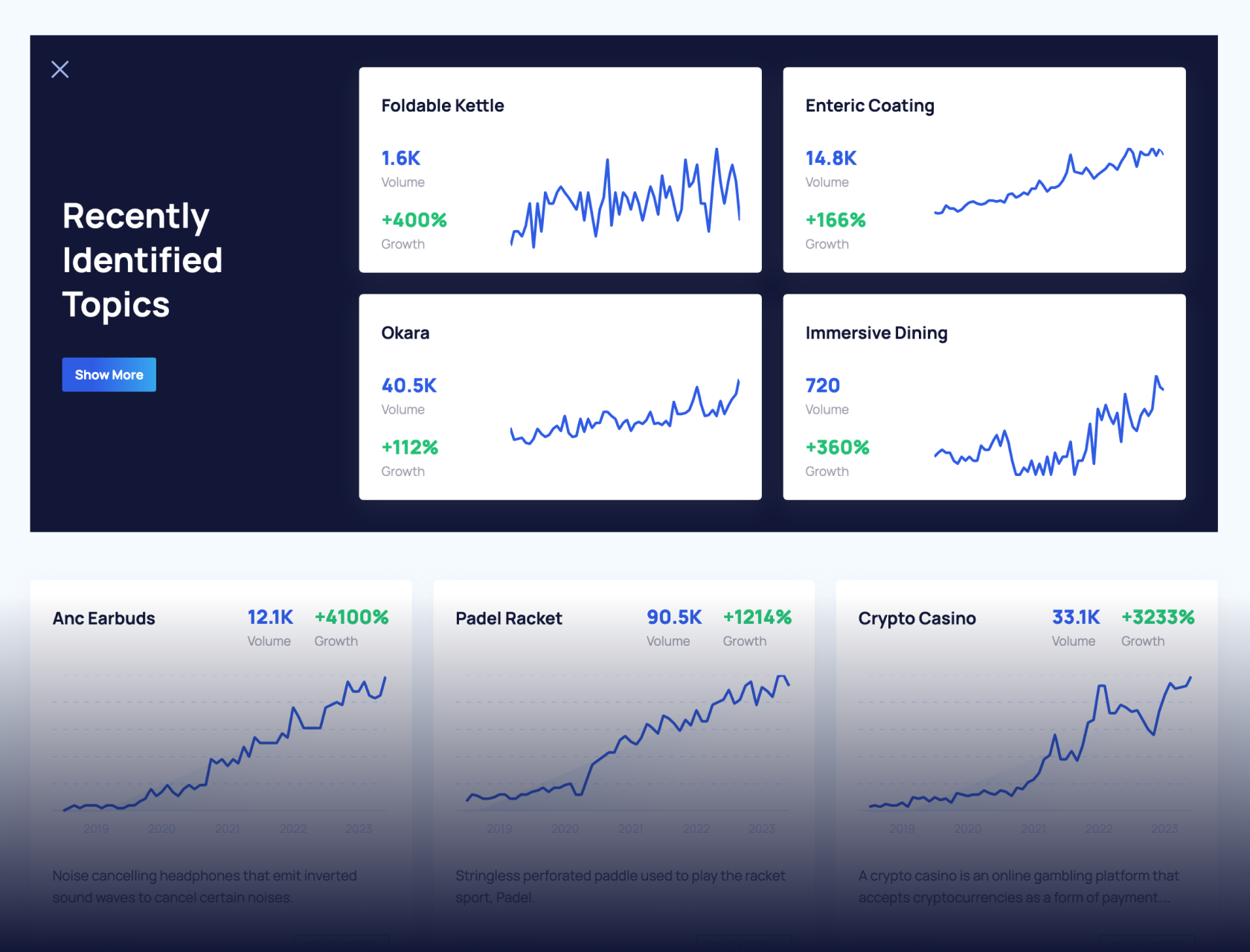

Discover Trends 12+ Months Before Everyone Else

How We Find Trends Before They Take Off

Exploding Topics’ advanced algorithm monitors millions of unstructured data points to spot trends early on.

Features

Keyword Research

Performance Tracking

Competitor Intelligence

Fix Your Site’s SEO Issues in 30 Seconds

Find technical issues blocking search visibility. Get prioritized, actionable fixes in seconds.

Powered by data from

How to Rank on AI Search Engines in 2025: Practical LLMO Guide

Visibility in AI search engines is a delicate combination of on-page, off-page, and technical optimization.

AI search platforms are as highly coveted as they are mysterious for marketing.

With billions of prompts served per day, showing up in AI answers can open the gates of a new revenue stream for your business.

The challenge is that LLMs (Large Language Models) are probabilistic black boxes. The ranking factors are confusingly foggy, so it’s hard to know how to show up there.

But patterns are starting to emerge.

After analyzing tons of AI search results, I’ve identified effective ranking strategies that are working right now.

Top Generative AI Optimization Strategies for LLM Visibility

- Optimize for passages: LLMs extract specific text chunks. Use direct answers at the start of sections, short paragraphs, scannable headings, and comparison tables to maximize extractability

- Target LLM-favorite content types: Comparison content, "best of" product reviews, tools/calculators, and opinion pieces get cited more frequently by AI platforms

- Implement complete structured data: Proper schema markup with all important fields filled out helps your content show up in AI Overviews and LLM responses

- Track AI-specific metrics: Monitor Share of Voice, AI citations vs mentions, and referral traffic from individual AI platforms to measure what's working and guide optimization efforts

- Anticipate and address query fan-out: Identify query fan-outs for the main topic and cluster them by intent to cast a wider net for AI citations

- Focus on content freshness: LLMs heavily favor recent content through time decay parameters. Regularly update articles and publish with strong initial distribution to maximize visibility

- Build off-page authority signals: Get mentioned on platforms LLMs trust (LinkedIn, Reddit, GitHub, Stack Overflow) through PR outreach, thought leadership, and link-worthy original research.

Content Optimization for AI Visibility

Like traditional SEO, the quality, structure, and format of your content play a huge role in AI discoverability.

Passage Optimization and Extractibility

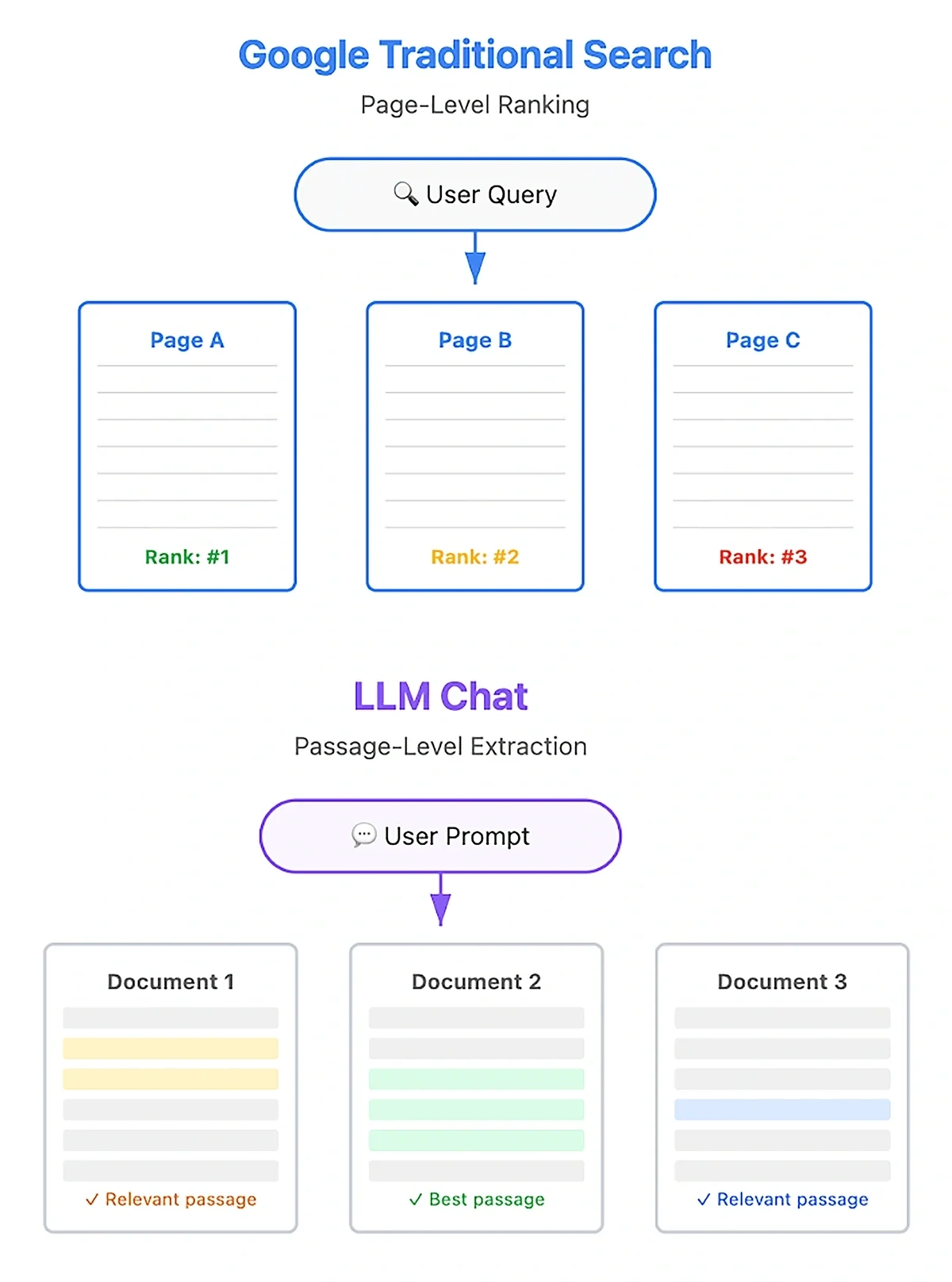

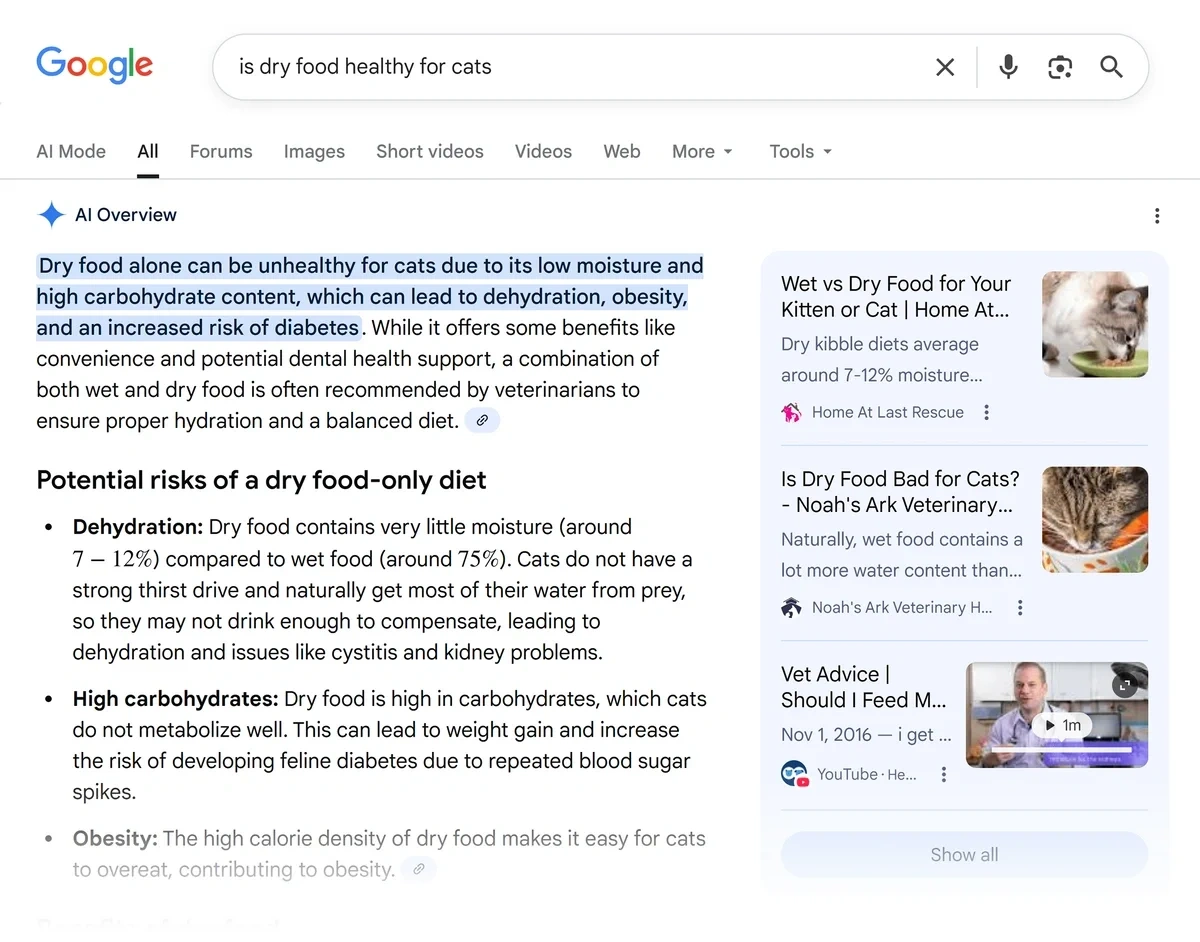

The traditional way of optimizing content for search is about looking at things at the page level.

Standard search engines rank pages, after all.

But LLM optimization techniques require a different approach. That’s because an AI model pays more attention to specific passages rather than the full length of your content.

In the context of AI search, a passage is a piece of text that makes complete sense on its own.

So when web search is enabled in a tool like ChatGPT, it will try its best to find the ideal passage from a page.

This LLM behavior presents excellent opportunities: it means that every passage in your article is a candidate for being pulled into an AI answer.

So, how do you go about crafting AI-ready passages for optimum extractability?

The short answer is to chunk your content carefully. Here are some ways you can do that:

- Direct answers: Avoid beating around the bush and give an exact answer as close to the start of each section as possible.

- Short paragraphs: Use information-dense and short paragraphs when answering the main intent and sub-intents. At the same time, mix up your paragraphs with more humanized writing so you don’t sound robotic in your content.

- Scannable sections: Organize your content into short sections with appropriate heading levels (H2, H3, etc.). Focus on making it easier for an LLM bot or an agentic AI model interacting with your page to land on relevant parts of your content.

- Comparison tables: Use tables to summarize comparisons between products and concepts. It’s easier for LLMs to extract data from structured elements like tables.

There’s one critical piece of info you need to write LLM-worthy answers compellingly: the prompts your audience is using when chatting with AI tools.

If you know the prompts that matter to your business, you can provide the answers in your content that AI needs to offer a good, up-to-date response to the query.

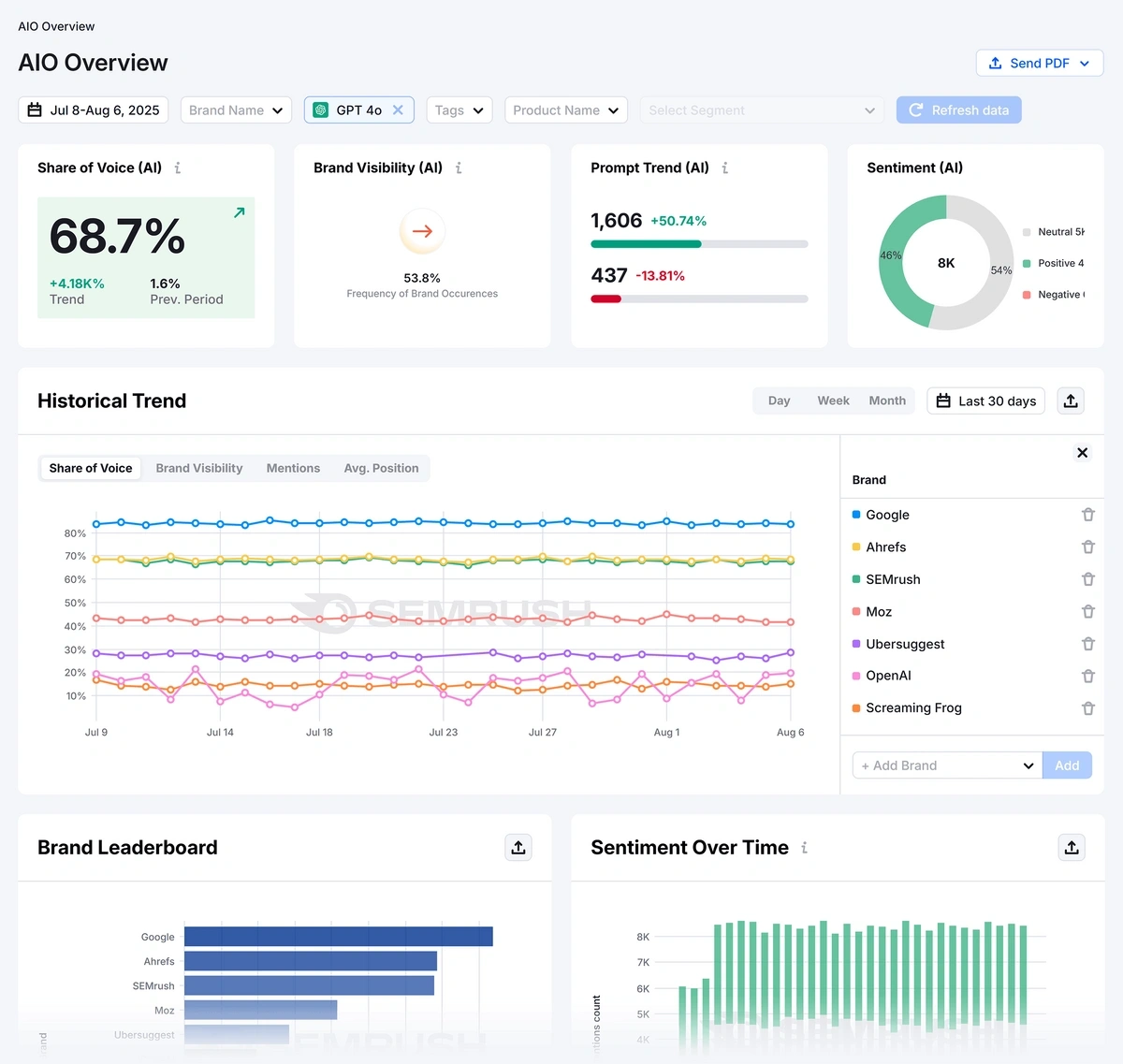

The Semrush Enterprise AIO is a mighty useful tool for identifying prompt themes and patterns that matter to your business.

Use the insights from this tool to address relevant questions using the chunking techniques I described above for the best chance to get picked by LLMs.

LLM-Appropriate Content Types

Most LLMs have a preference for the kind of content they lift from sources on the web. Certain types of pages enjoy significantly more visibility than others.

Target these formats, and you have a good chance of gaining LLM visibility:

1. Comparisons

AI platforms like Google AI Mode and ChatGPT often cite pages with comparison content.

This holds true even when the query prompt doesn’t contain any keywords expressly indicating comparison intent, such as “X vs Y”.

I’ve noted that AI search engines also favor comparison content for eCommerce queries.

LLMs fulfill the natural human inclination to grasp information in comparative terms.

So even when there’s an implicit comparative intent behind any query, you can bet the LLM response will include some kinds of comparisons.

For example, if you have a post about a tourist destination, it’s not all your audience would be interested in.

There’s a good chance the reader would also like to know how that destination compares with another popular spot.

Good content producers anticipate these kinds of follow-up questions and address them smartly.

Even if it’s not a true comparison post in the “X vs. Y” format, you can enrich your content by drawing sensible comparisons between related products, alternatives, and ideas.

That’s how you can be the source for AI Overviews and other AI-generated responses when they answer user queries by leaning on comparisons.

2. “Best Of” Product Reviews

Similar to a comparison, many prompts trigger LLMs to search for listicles about the best products or solutions to a problem.

I asked Perplexity about the best running shoes for specific kinds of runners.

It cited several listicle-style posts rounding up the best products.

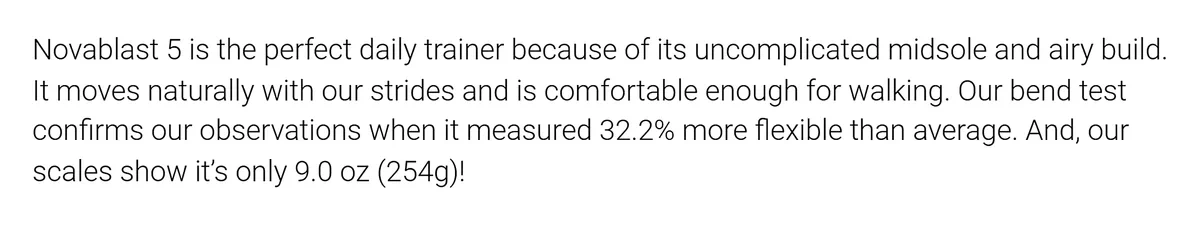

Looking deeper, I’ve also noticed that Perplexity and other LLMs prioritize “best off” roundups backed by real experience and expertise.

For instance, RunRepeat highlights its testing methodology prominently on every page.

Authors on that site also use language that demonstrates they’ve used the products they’re referring to.

This is a great example of content written to win the trust of AI and your audience.

It’s targeting a content format highly likely to appear in LLMs, and it’s using principles of trustworthy, authoritative content to attract AI citations.

The website also avoids giving you a generic ranked list, from best to worst.

Instead, all the recommendations are aligned with a unique intent. Such as “best stability running shoes” and “best shoes for wide feet”.

These are hyper-focused sub-intents that LLMs love suggesting since their goal is to offer personalized recommendations.

Tie your list with sub-intents to cover different needs and pain points of your audience, and you’ll be rewarded with AI citations whenever a user has a chat with an AI with that exact problem.

3. Tools, Calculators, and Templates

The written word is the easiest to produce for LLMs.

If you really want to force AI chatbots to surface your website, you need to create assets they can’t easily replicate: online tools, calculators, or even templates.

When I asked Perplexity about content freshness, it came back with a diverse set of results that includes our calculator tool as well as multiple strategy guides.

Along with creating linkworthy assets, it’s equally important to apply strong foundational SEO.

That means giving your pages a descriptive title, a meta description, and helpful content that explains everything about your tool.

Yes, AI agents can figure out what a tool or downloadable asset is about by interacting with your site.

But that’s not something you should leave to interpretation. Provide the necessary details and context yourself so LLMs have the exact information they need to recommend your tools and assets.

4. Opinion Pieces and Thought Leadership

People often turn to LLMs when they need something original that they’re struggling to find manually.

The problem is LLMs aren’t good at providing original solutions.

Instead, they rely on existing knowledge of human experts to find unique ideas and perspectives for you.

That’s a gap that content producers can fill by offering insightful opinion pieces that LLMs can quote from.

Here’s an example of an opinion piece I wrote a few weeks back, turning up in Perplexity’s sources:

In addition to creating original pieces with concrete insights that LLMs can summarize, you need strong distribution to enhance visibility for AI.

That means publishing your content across prominent publishers and sites relevant to your industry, sharing it across social media, and so on.

Pro Tip: LinkedIn is frequently cited by tools like Perplexity and ChatGPT. It can be worthwhile to repurpose your thought leadership content as shorter LinkedIn posts and articles.

Authority Signals

LLMs prefer citing websites they consider authoritative.

I mentioned the importance of original perspectives in your content earlier to demonstrate your authoritativeness and expertise

But you also need to highlight expertise, experience, authoritativeness, and trustworthiness explicitly through your website.

Some site-level authority signals you should consider adding are:

- Author bios and credentials: Add bylines to every piece of content and link to a page containing the author’s credentials and background.

- Real examples: Use case studies, screenshots, and personal anecdotes to humanize your content and serve originality that LLMs can’t replicate. Describe your experience as a practitioner in the industry.

- Methodology and procedures: If you review products, explain your review methodology in detail in a dedicated page and reference it within relevant content as well.

- Editorial policy: Transparency boosts trust. Outline the standards you follow for your content, disclose any affiliate partnerships, conflicts of interest, and so on.

In addition, there are many ways you can inject originality and enhance the informativeness of your content to compel LLM of your authority and expertise.

One of the best examples is the use of original surveys to explore trending topics, like the AI trust gap research we performed.

The kind of original content that works best for you will depend on your industry. I recommend checking out these ideas for adding information gain to your content.

Query Fan-Out Research

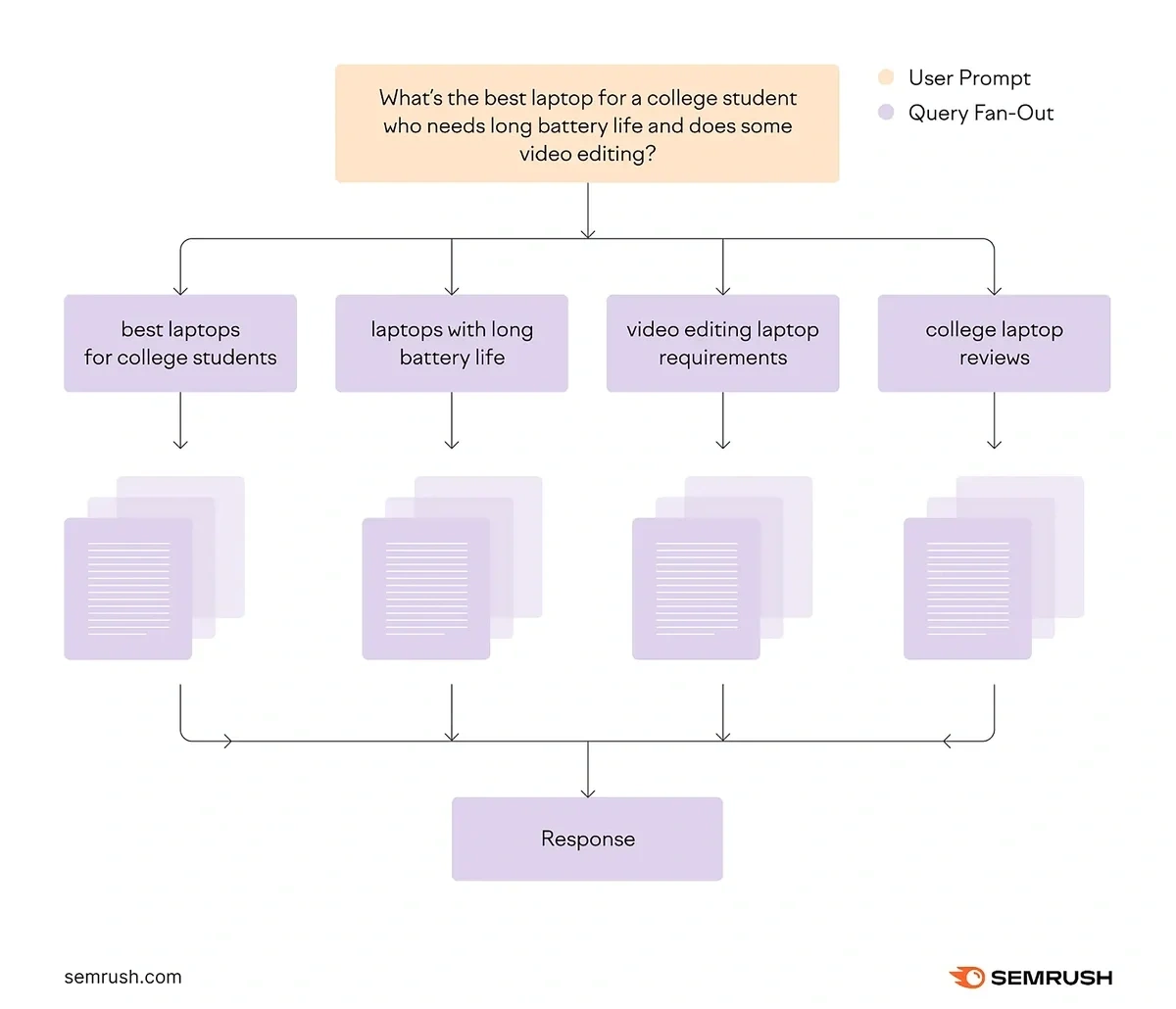

An LLM can perform many different searches in the background when answering your question, as opposed to serving relevant pages for the typed query.

That means effective LLM optimization is about focusing on not only the explicit prompt but also the hidden queries associated with the question.

To take an example, if you ask “reliable tools for forecasting topic trends,” AI search engines like ChatGPT and Google AI Mode will break your query down into multiple sub-queries, such as:

- best software for predicting future trends

- trend forecasting tools for content strategists

- Google Trends vs Exploding Topics for market research

The AI-generated answer you get is usually a summary of a bunch of these searches performed behind the scenes.

Thinking in terms of sub-queries is one of the most important ways you can adapt to LLM-powered search.

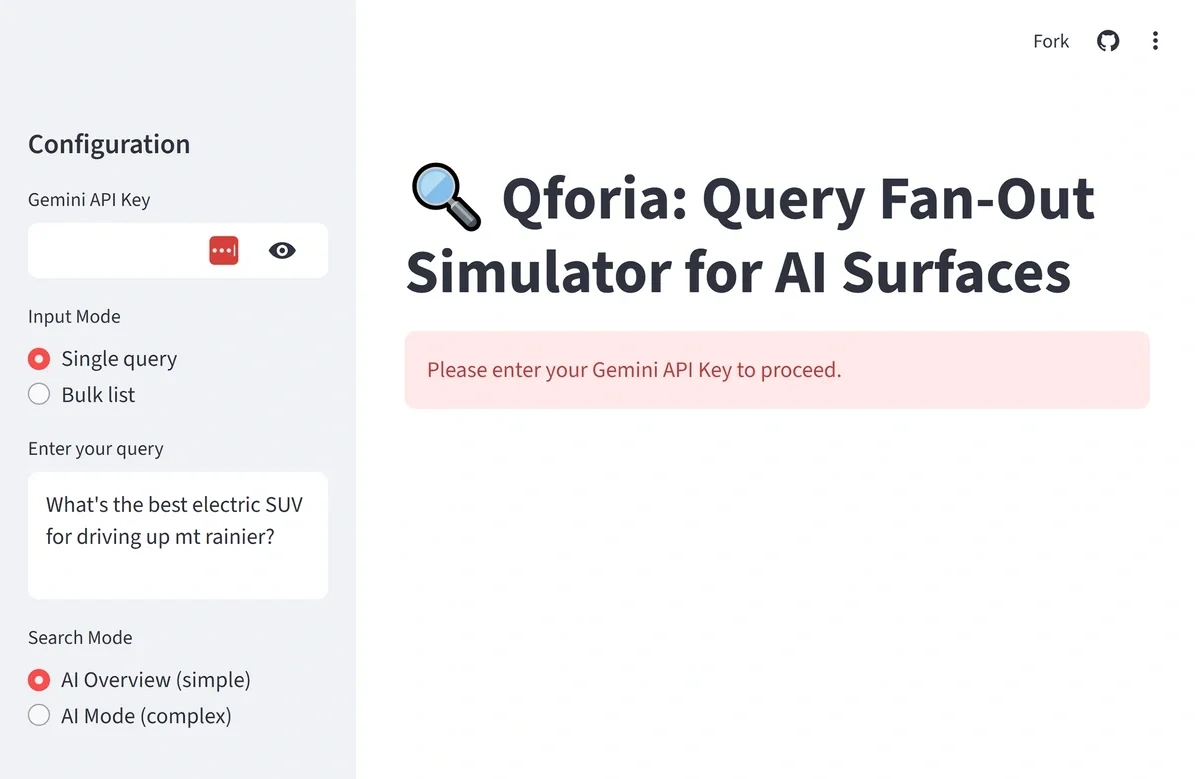

For researching query fan-outs, I recommend Mike King’s tool called Qforia:

The tool simulates sub-queries that AI tools like AI Mode are likely to run for your seed question.

It’s not 100% accurate, but it does a very good job of simulating the thought process of a typical LLM.

You can also just ask an AI tool like ChatGPT to suggest sub-queries for a given question. It will give you a reasonable list of suggestions.

Now, you also need to incorporate these queries into your content well in order to benefit from increased LLM visibility.

Review the list of sub-queries when creating your content briefs and cluster them by intent and user persona.

Related queries don't each need separate pages. Instead, cluster them around a central topic with one pillar page and several sub-pages.

Create a pillar page covering your topic broadly, addressing relevant queries. Follow that up with several sub-pages for specific topics, each targeting clusters of long-tail queries relevant to the sub-topic.

This is the most logical, SEO-friendly way to establish your topical authority.

Once again, feel free to use AI to assist with the clustering process.

Clusters of content based on AI query fan-outs allow you to address all possible questions your users might ask related to a topic.

Think of it as casting a wider web across likely questions LLMs would want to gather the answers for when chatting with your target audience.

Do this well, and you have a good chance of showing up when LLMs look up multiple sources across the web to answer the user's seed query.

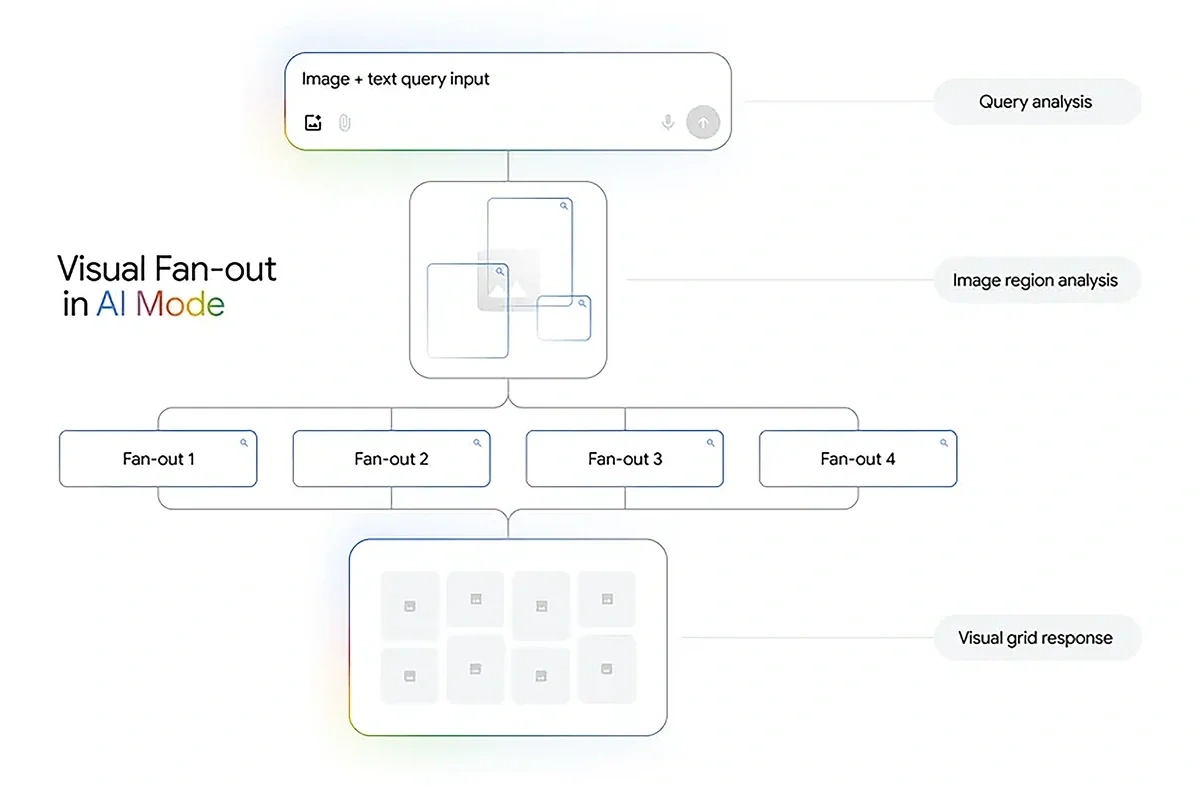

Image Optimization and Visual Fan-Out

Taking the query fan-out approach for textual searches, Google has now expanded fan-out for visual searches.

This adds a new dimension to image optimization and raises the importance of contextually rich images.

It’s especially relevant for inspiration-styled content in the niches of lifestyle, art, and fashion, as well as for e-commerce stores serving shoppers through AI.

Here are a few optimization opportunities likely to emerge from visual fan-outs:

- Rich image metadata and alt text: Use natural, conversational language that matches how users would describe the scene. Add descriptors about mood, style, specific product attributes to enrich context.

- Image quality matters more than ever: The emphasis on context means Google can analyze images to grasp subtle details. As a result, high-resolution, detailed imagery becomes more valuable.

- Product presentation in styled contexts: Avoid product images in isolated, white backgrounds only. Create images with complementary products/elements and a complete styling context.

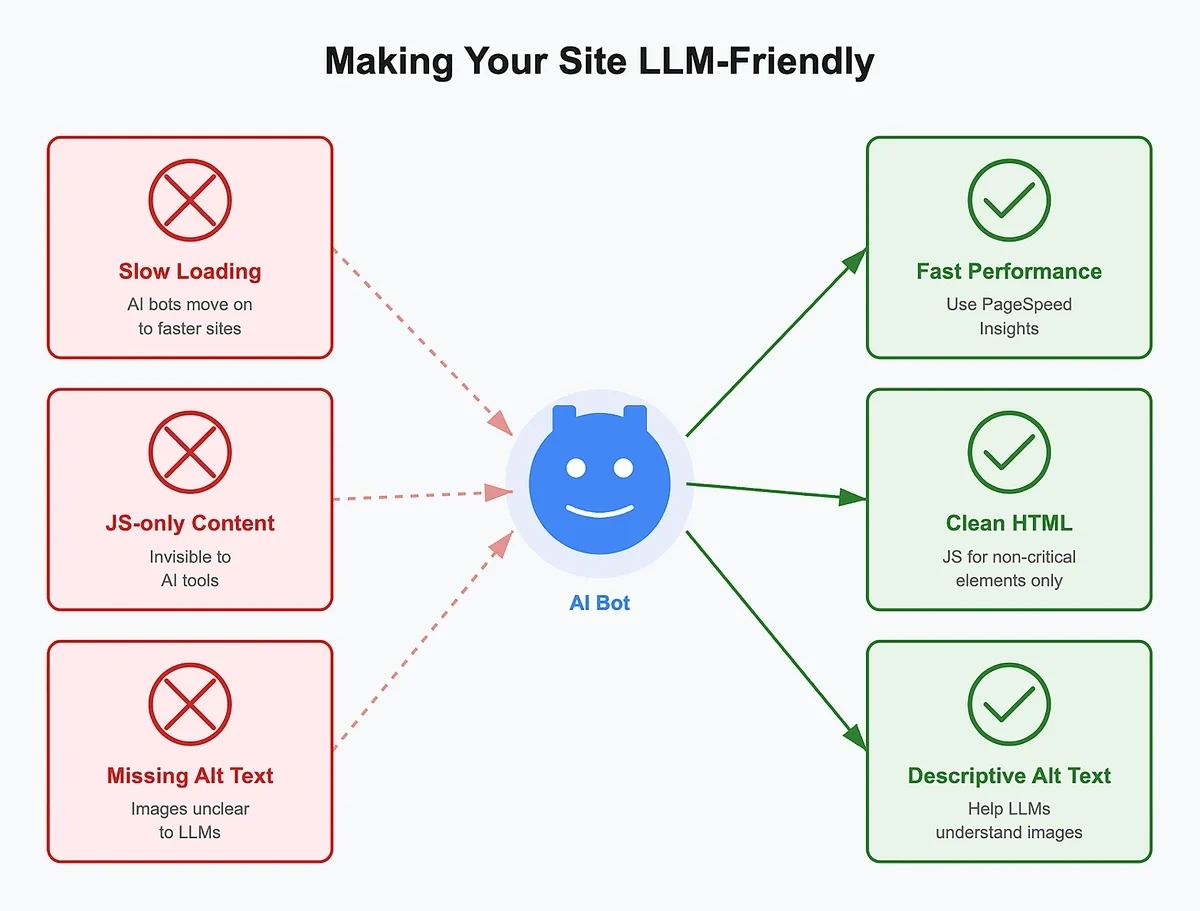

Technical Optimization for AI Discoverability

AI search engines favor content that’s easy for them to read, understand, and contextualize.

You can create original and high-quality content, but you’ll only be operating at half your potential if your content isn’t LLM-friendly.

A few technical optimization steps can make your content more machine-readable and boost discoverability.

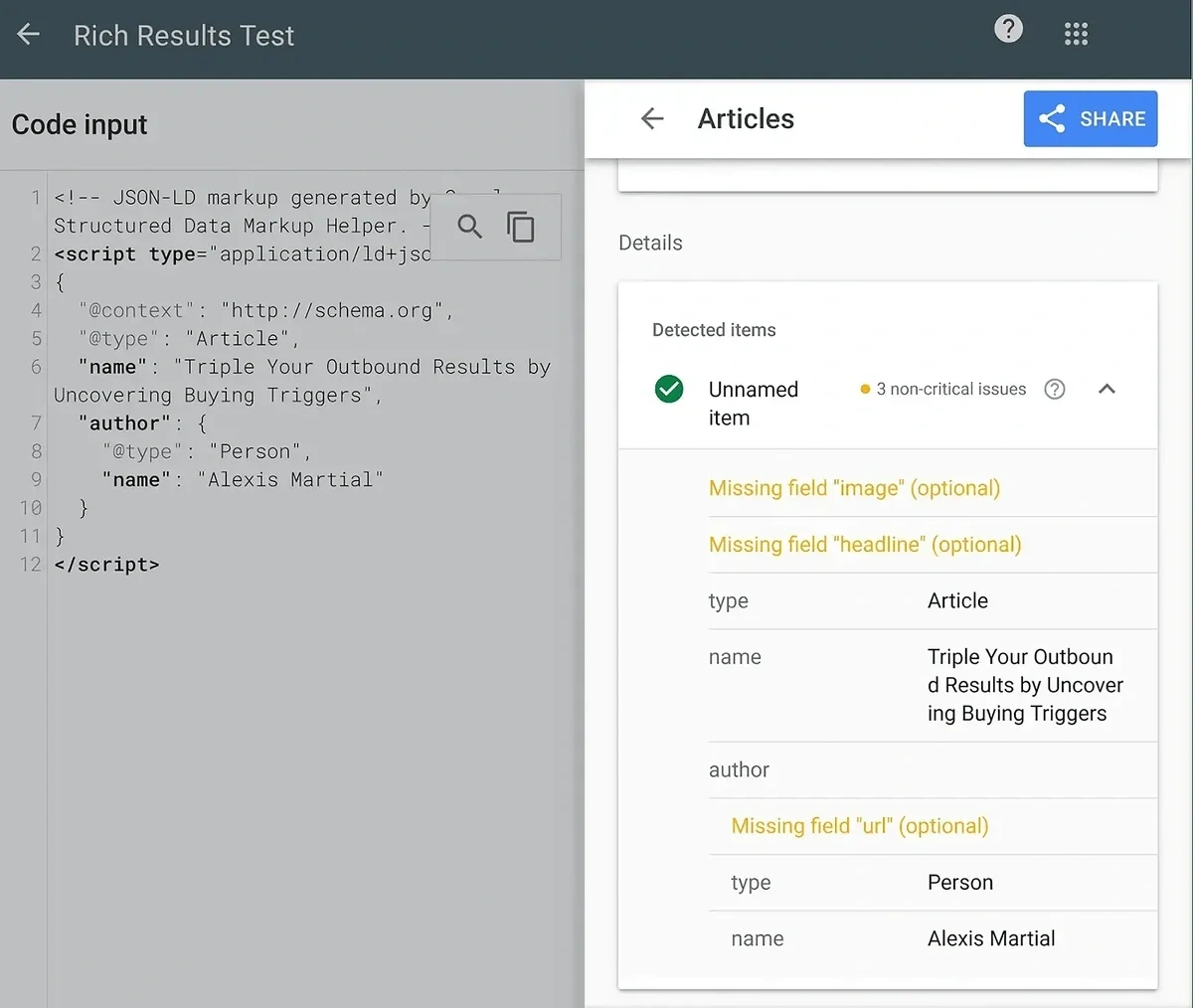

Appropriate Structured Data Implementation

Schema is a standard for adding specific details about your page in a way that search engines and LLMs can easily understand.

Search engines pull data directly from schema you incorporate into your pages to display rich snippets in search engine results.

But schema isn’t magic.

I’ve come across many inexperienced brands that simply treat schema like a checkbox to cross out without caring for proper implementation.

What does good implementation look like?

First, you need to use the right schema for the right content type:

- Article for general blog posts and news content

- Review for product reviews and comparisons

- FAQPage for FAQ-like content

Second, complete the schema with the important fields and properties.

Technically, all schema properties are optional and can be left blank.

But many of these properties are practically essential for proper search engine understanding.

Recently, a small scale study found a correlation between AI Overviews and good schema implementation.

The researchers were unable to get pages to appear in AI Overviews when they left schema properties incomplete or didn’t use them at all.

These correlations aren’t conclusive, but it makes theoretical sense that strong schema implementation should enable AI tools to use your content better.

Rendering and Performance

This is a no-brainer.

LLM tools prioritize speed and accessibility. So if your page takes ages to load, the AI bots will simply move on.

It’s also a problem if your content is hidden behind fancy JavaScript. AI tools can’t interact with JavaScript, so your content is invisible if it’s enclosed within JavaScript code.

Here’s what you should do instead:

- Avoid JS-only render: Use a clean HTML structure for your important content and reserve JavaScript for non-critical elements of your site

- Core Web Vitals: Improve your site performance and identify page loading issues with tools like PageSpeed Insights.

- Image optimization: AI tools don’t always “see” images accurately. Add descriptive alt text to help LLMs understand what your images are about.

The Semrush SEO Audit tool surfaces site-level issues that could be harming your performance.

This tool lets you move fast by identifying key problems and fixing the technical foundations of your site.

LLM-Friendly Crawl Directives

AI crawlers need open access in order to access your content for citations or use your content as their training data.

Review your website’s robots.txt file and make sure you don’t have any LLMs in the denylist. It might also make sense to explicitly add LLMs to your allowlist for good measure.

Here are the crawler bots for popular LLMs you should set proper robots.txt rules for:

- OAI-SearchBot

- ChatGPT-User

- PerplexityBot

- Claude-SearchBot

- Claude-User

- Perplexity‑User

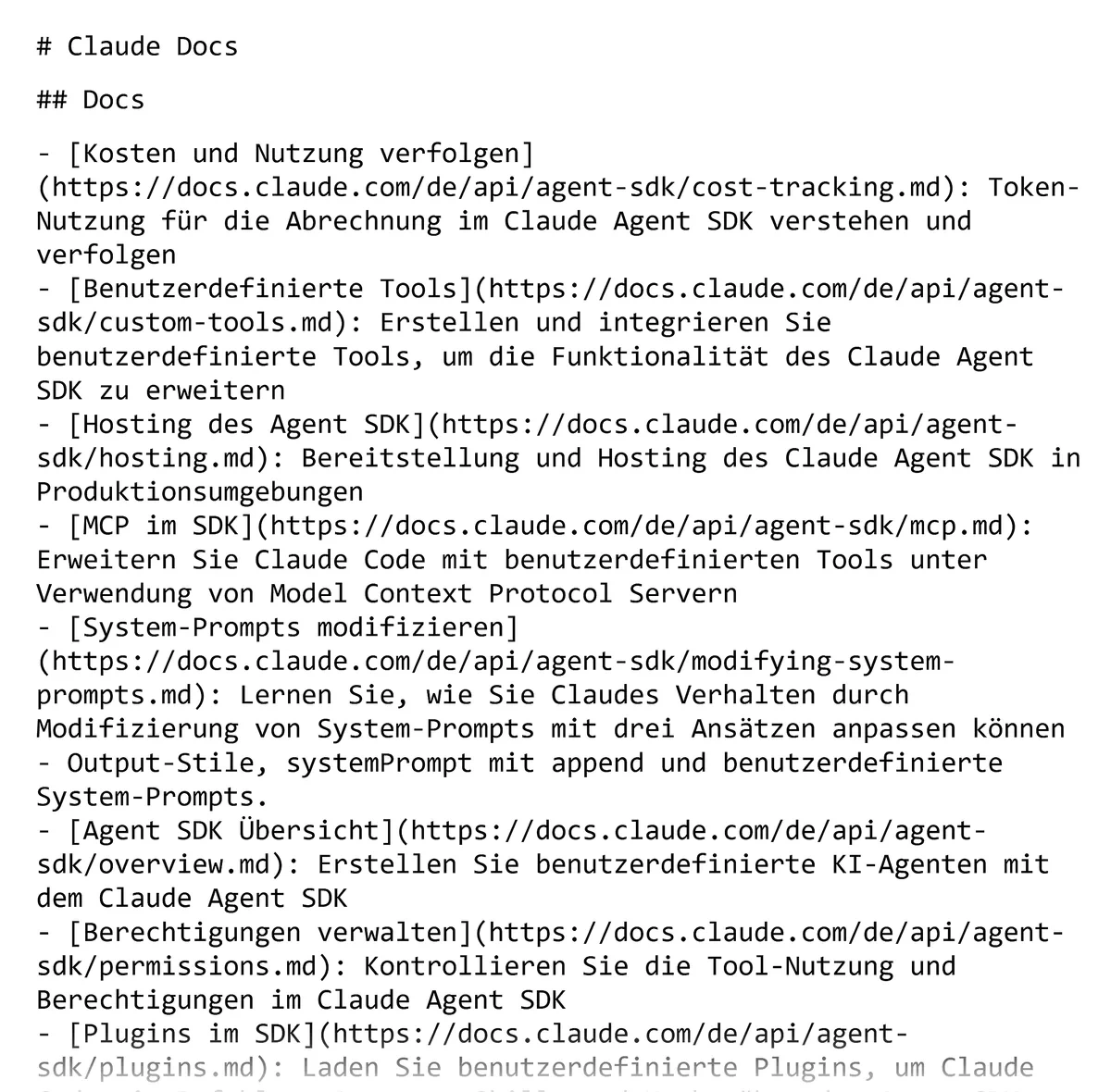

Should you use llms.txt?

llms.txt is a new standard that’s intended to serve a more easily readable version of your website for LLMs.

The idea is simple: instead of forcing an LLM to translate your complex page into a machine-readable state, you provide that info yourself in a simple markdown file.

The llms.txt standard is only a proposal at this stage. We can’t say for sure if it will be adopted by AI systems and become as normalized as a sitemap is.

That said, it will take you no time to generate an llms.txt yourself or with an AI tool. So you might as well use it for the time when the protocol starts seeing wider adoption.

Strategic Internal Linking

The conventional wisdom for internal linking techniques applies just the same way for LLMs as they do for classic SEO.

Even though LLMs don’t browse your content like traditional search engines do, the presence of internal links can help LLMs gauge your topical depth when trained on your data.

Besides, AI agents directly interact with your web page like a human user would. Internal links have navigational value for these agents as they explore your site.

Use the pillar-to-subtopic interlinking strategy to connect related pages together.

This offers the best user experience to users and AI agents, and increases contextual understanding for LLMs.

Similarly, follow linking best practices such as:

- Clean up redirect chains: Avoid complicated redirect chains. AI agents prioritize speed, and a long chain of redirects can put the destination page out of an agent’s reach.

- Fix orphaned pages: Ensure you don’t have any important pages without any internal links pointing to them. This can cause indexing issues in Google and put an important page outside of an LLM/AI agent’s radar.

- Correct broken links: Update any internal links pointing to deleted content. For 404 pages, offer links to related content to assist AI agents in finding their way.

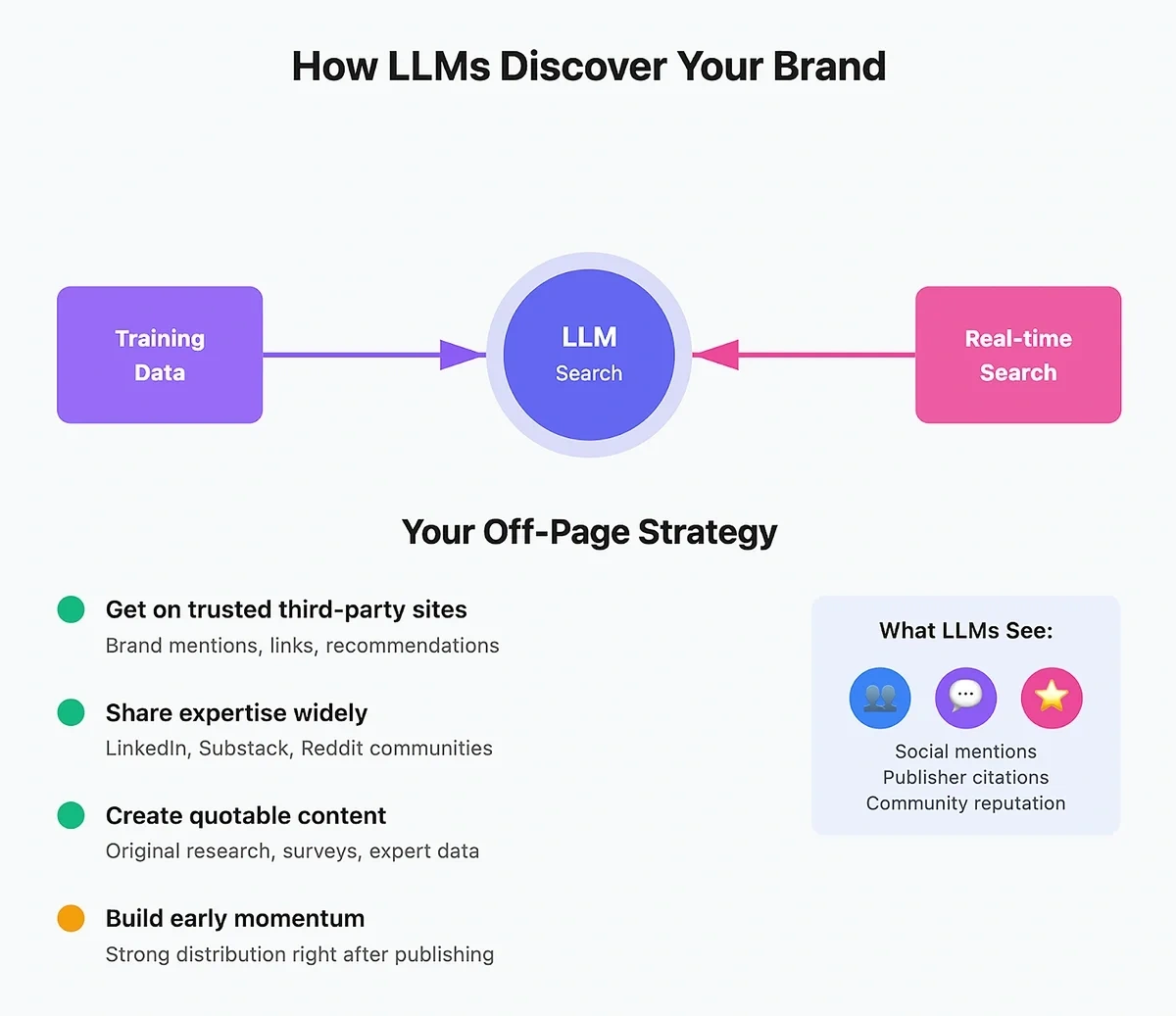

AI Citations, Recommendations, and Digital PR

Beyond on-page content and technical factors, your website’s off-page marketing matters a great deal for LLMs.

Most AI tools will use their training data along with a real-time search to formulate thoughts about your brand.

In other words, it’s exposed to what people think about you on social media and whether you’re recommended by other publishers and websites that matter to LLMs.

So you can make your way into an LLM’s recommendations and citations if you’re featured prominently and positively on third-party websites that LLMs trust. In other words, digital PR is a big part of SEO in the AI era.

What you can do:

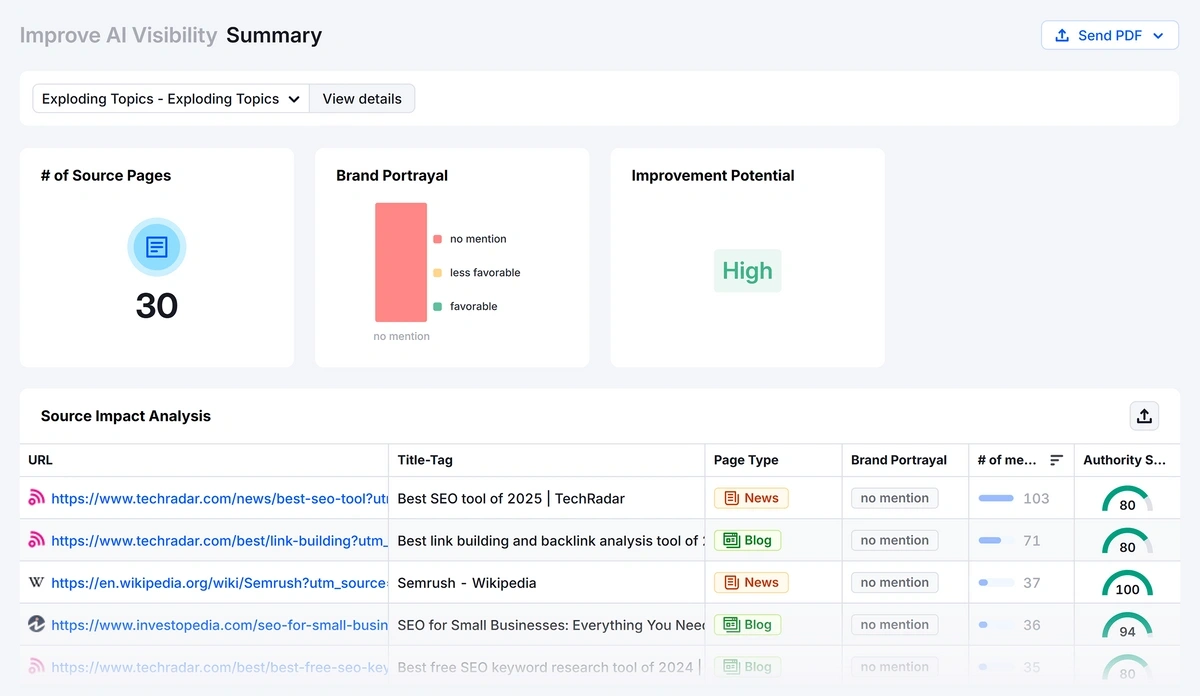

- Outreach notable platforms: Every industry has prominent publishers and sites with influential power. Use the Source Impact Analysis feature of Semrush Enterprise AIO to discover impactful publishers. Pursue opportunities for brand mentions, recommendations, and link placements on these sites to increase your exposure to AI search engines as well as the general audience.

- Contribute opinion pieces: Use platforms like LinkedIn and Substack to share your expertise and thought leadership. Distribute insightful content across communities like reddit to multiply your visibility.

- Create link assets and quotable content: Pitch high-quality, original content from your site as a source that journalists and other authors can use in their content. Surveys, first-hand research, and expert interviews are all useful information sources that can naturally earn mentions and links for.

- Build early momentum: AI search platforms like Perplexity include parameters like “new_post_impression_threshold” and “new_post_ctr”. This suggests that strong distribution immediately after publishing content is critical for winning AI visibility.

LLMs likely give preferential treatment to a fixed, curated list of sources they consider “authoritative”. Theoretically, having your brand mentioned in these domains significantly enhances your AI visibility. Some of these domains include:

- Github

- Amazon

- Dev.to

- Stack Overflow

- w3schools.com

Measuring and Maintaining LLM Visibility

As is the case with SEO, AI search optimization strategies should always be rooted in measurement.

The challenge is that LLMs don’t lend themselves to tracking as simply as conventional SEO does.

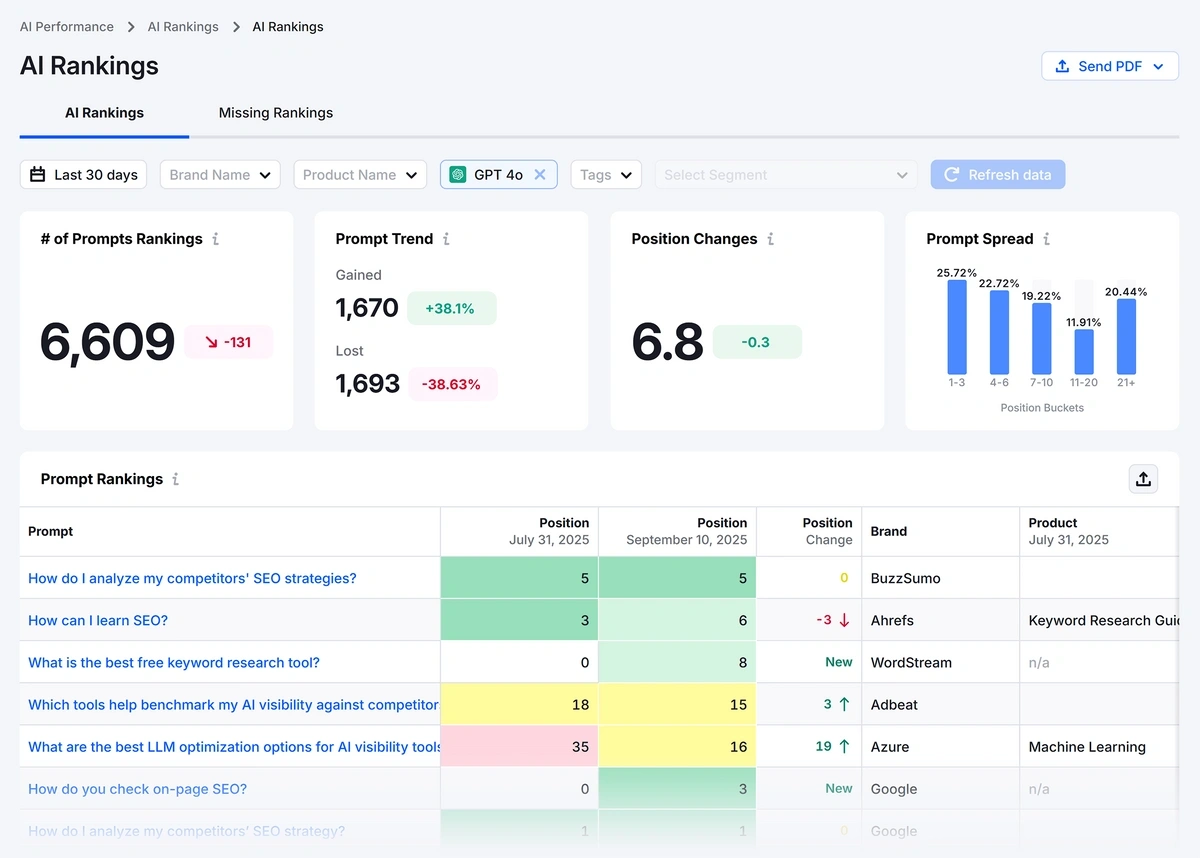

That said, top SEO tools like Semrush now offer toolkits for AI search monitoring that offer practical insights.

You can even get an instant overview using the free AI visibility checker from Exploding Topics.

AI Search Metrics to Track

Semrush Enterprise AIO tracks several important metrics that tell you the story about your LLM performance.

Does your brand have a dominating presence in AI answers? Or is it dwarfed by your competitors?

You can gauge your visibility in relation to your competitors in AI responses with the Share of Voice (SoV) metric.

Semrush SoV as a percentage. The closer you are to 100%, the more frequently you’re appearing in relevant, non-branded prompts analyzed for your industry.

Additionally, I recommend tracking these LLM visibility metrics to get the full picture about your performance:

AI Mentions

Tracking the raw count for your mentions in AI responses allows you to observe changes in AI visibility and measure the impact of your optimizations over time.

Every time you launch a PR campaign for off-site mentions or create content addressing relevant prompts, keep an eye on your AI mentions to evaluate if your tactics are working for you.

Pro Tip: Semrush Enterprise AIO tracks your performance for all major AI models separately. Use this granular tracking to your advantage to identify which AI platforms are working well for you and which ones need stronger optimization.

AI Citations

An AI citation occurs when you’re linked as a source in an AI response. This is different from a brand mention, where you’re referenced without a link pointing back to you.

You need to claim presence in both citations and mentions to stay visible across AI surfaces.

Semrush Enterprise AIO tracks how frequently AI tools link to your content as an answer source.

Plus, you can also identify the external sources that AI is using to recommend your brand.

Some sources play a greater role in influencing AI answers than others.

The Semrush Source Impact analysis is great for discovering the top authoritative sources that AI is referencing when recommending your brand.

The tool also analyzes the sentiment if your brand is mentioned in a source.

So you’ll know if you are positioned ideally by a website or if they could be hurting the sentiment around you with unfavorable portrayal.

Details like these are excellent because they help guide your next steps.

For example, you can outreach websites for updating messaging about your brand and picking strong sources that are visible to AI.

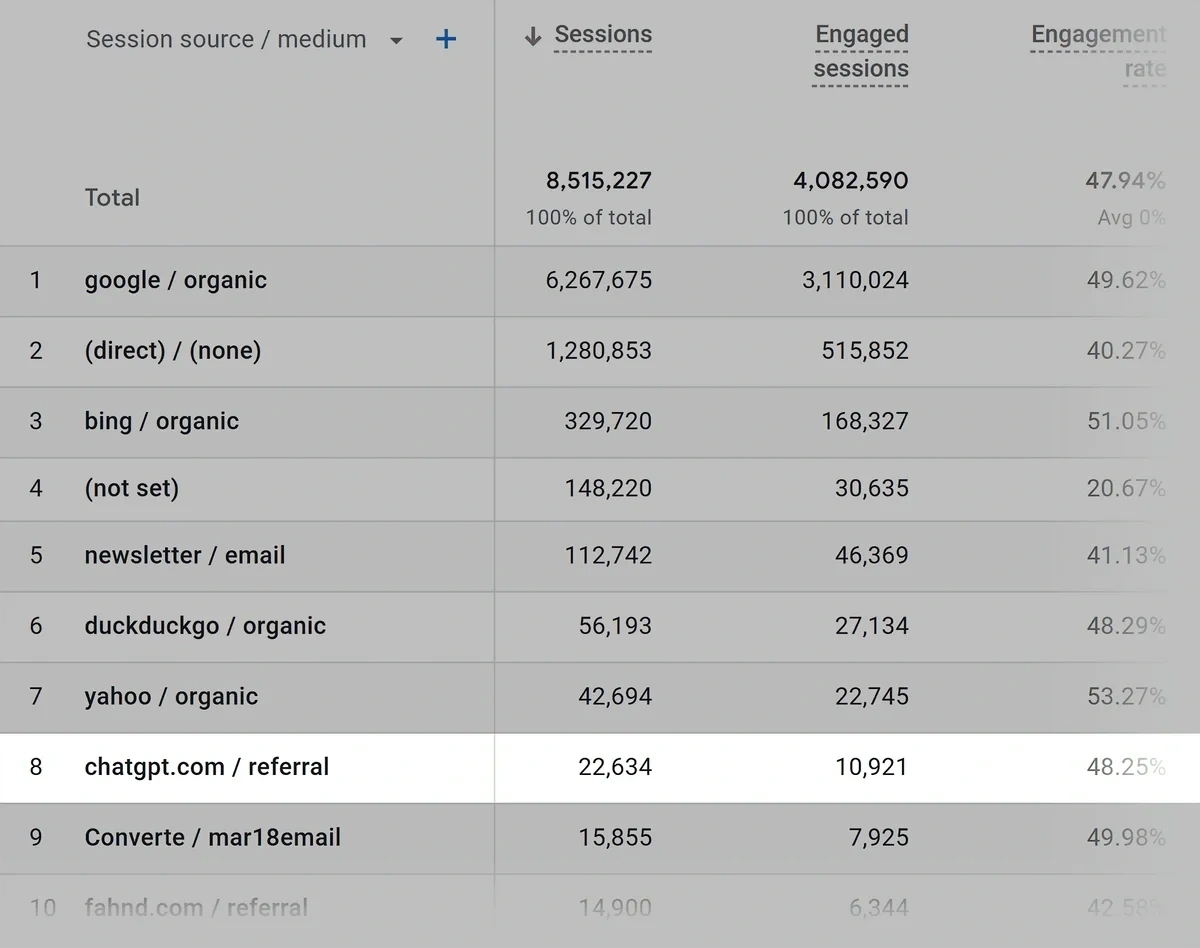

AI Referral Traffic and Conversions

Visibility from AI doesn’t always translate to actual website visits.

To track traffic from different AI platforms, you can use Google Analytics.

At this time, GA4 doesn’t offer AI tracking for AI tools by default. You need to set up a custom channel for AI platforms you care about (it’s not that complicated).

With the channel setup, you can track all metrics for AI platforms, including key events or conversions.

This way, you’ll know for sure if your AI search visibility is contributing to meaningful events on your site.

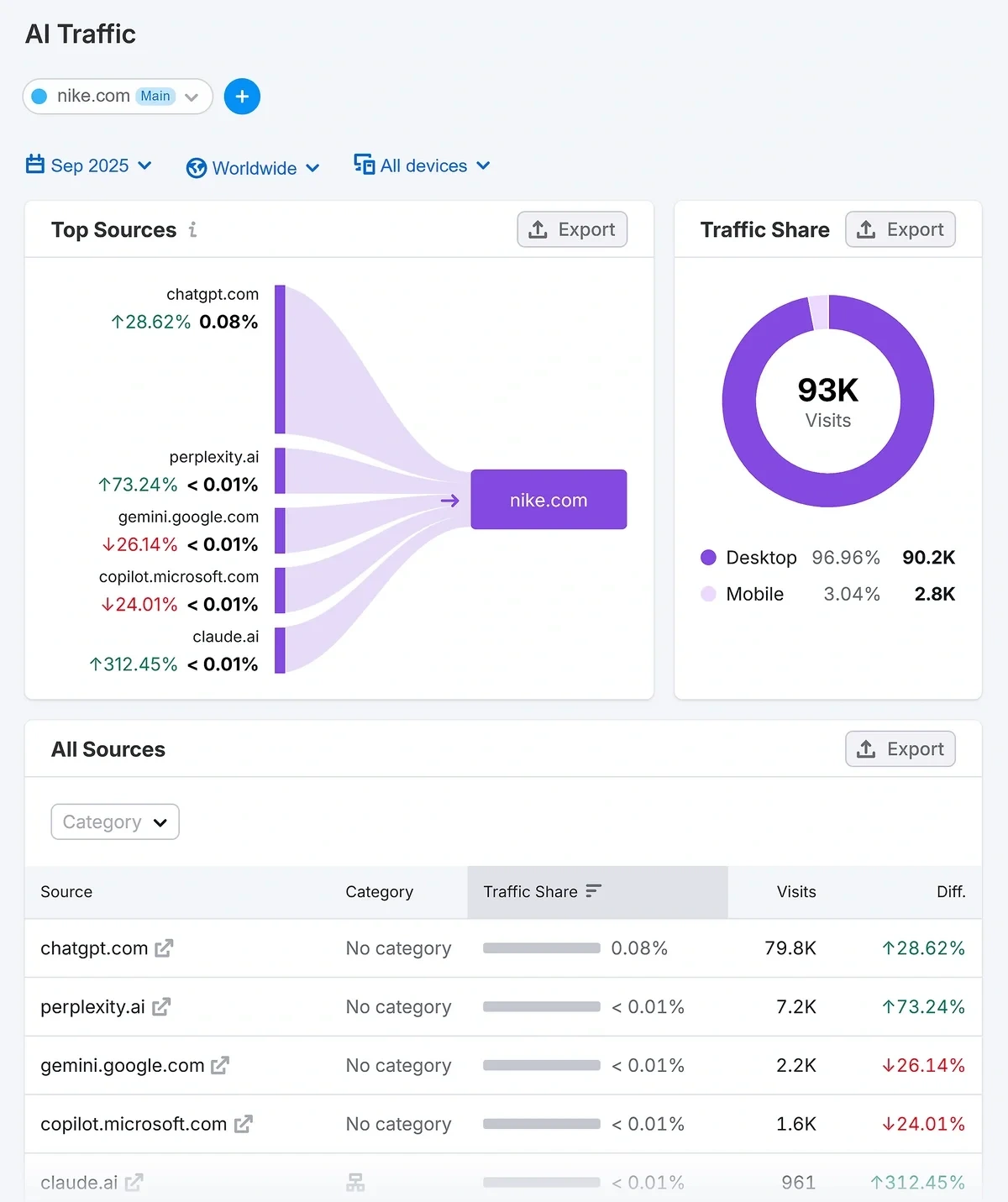

For a wider context, it helps to see how much traffic your competitors are generating from AI sources.

The Semrush AI traffic dashboard estimates traffic distribution by source for your domain along with competitors.

Look for the domains getting the lion’s share of AI traffic and analyze their content strategy for ideas. The goal is to take a prominent place in the traffic distribution so your AI visibility is always guaranteed.

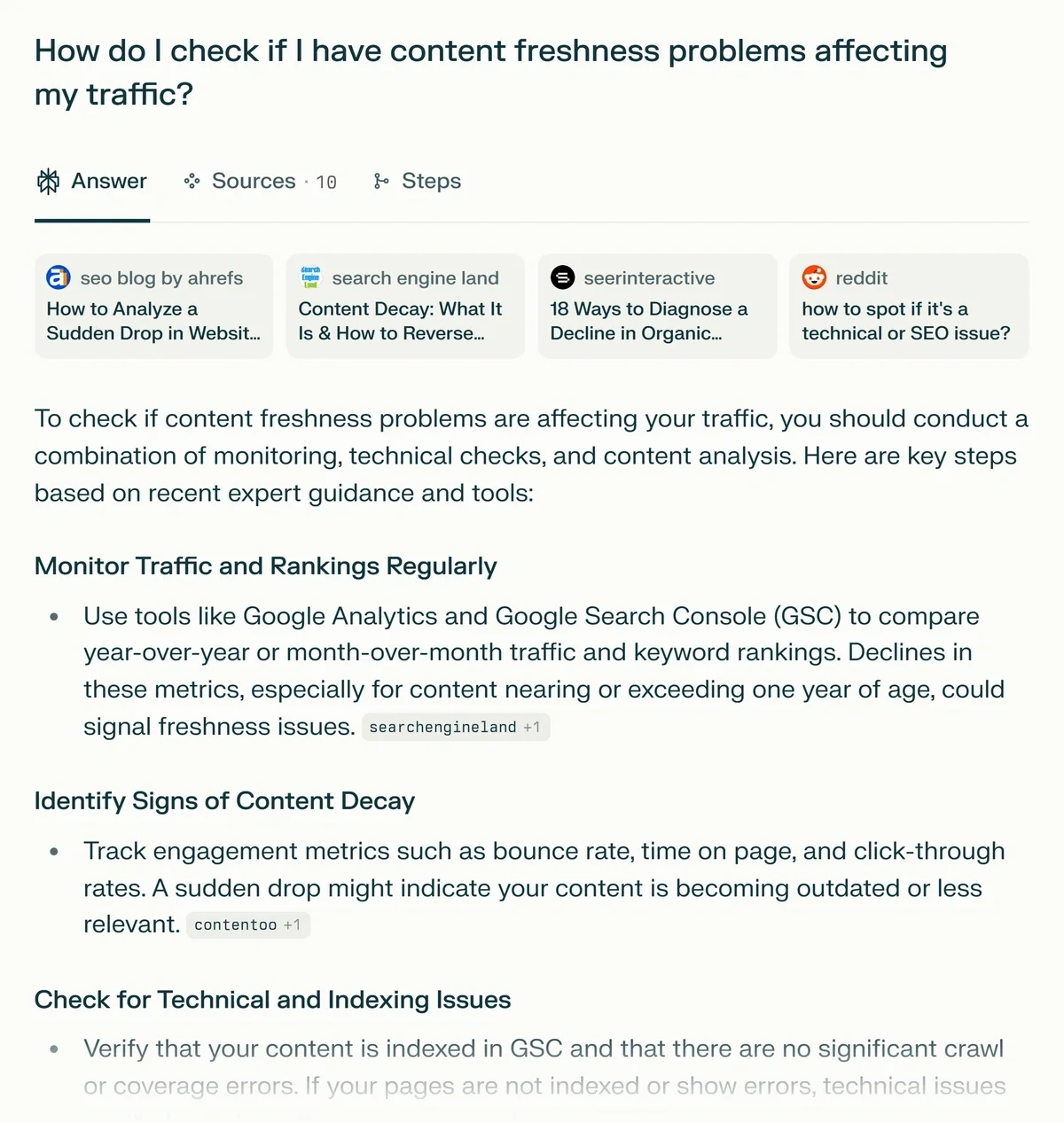

Content Freshness Is Key for AI Visibility

Maintaining fresh content is one of the top generative engine optimization strategies for AI visibility. That’s because search engines favor fresh content in much the same way as classic Google SERPs.

When you think about it, the preference for fresh content in SEO is a no-brainer:

People want to consume the latest content, and search engines mirror this inclination. It’s as simple as that.

Estimate the potential traffic impact of updating your content

Monthly search volume for your target keyword

When was this content last significantly updated?

New research is continuing to find strong evidence in favor of content freshness being a factor that AI algorithms use to choose and rank content in their answers.

Perplexity uses a “time_decay_rate” parameter in its new-style feed Discover.

Perplexity likely ignores articles that have decayed beyond a certain threshold, depending on the nature of the user’s query.

Your best chance of making it into Perplexity’s Discover feed is when your content is newly published. The pattern is similar to Google Discover rankings.

It’s also a reminder to update content regularly to slow down decay, especially if it’s a time-sensitive topic or news with a short life.

Perplexity isn’t alone in leaning toward recency in content.

ChatGPT’s source code includes an interesting line: “use_freshness_scoring_profile”. It’s a strong hint that ChatGPT prioritizes new content too.

In fact, there’s an excellent new study confirming this. Researchers injected fake publication dates into passages.

They found that newer passages consistently outrank older ones in all the LLMs they investigated (ChatGPT, Llama, and Qwen).

The bias is so strong that LLMs can flip their ranking decisions based on timestamps alone.

If an LLM preferred a certain passage originally between two equally relevant ones, it suddenly started promoting the newer passage after adding dates about 25% of the time.

The strategy implication is clear: update often and maintain freshness.

High-quality content with recent information (and fresh timestamps to validate it) is a powerful combination for winning competitive advantage in AI visibility.

Dominate AI Search Presence Now

The first crucial step in optimizing for AI search engine rankings is to get a lay of the land.

This was relatively easy when you had only Google’s top 10 blue links to contend with.

The personalized nature of every unique AI conversation prevents that.

You will need a comprehensive tool to track rankings by running hundreds of queries across different LLMs.

This is why a high-quality analysis tool matters even more for LLM optimization than it does for traditional SEO.

Use Semrush Enterprise AIO to find where you stand in AI answers compared to competitors and create a strategy based on real data.

Stop Guessing, Start Growing 🚀

Use real-time topic data to create content that resonates and brings results.

Exploding Topics is owned by Semrush. Our mission is to provide accurate data and expert insights on emerging trends. Unless otherwise noted, this page’s content was written by either an employee or a paid contractor of Semrush Inc.

Share

Newsletter Signup

By clicking “Subscribe” you agree to Semrush Privacy Policy and consent to Semrush using your contact data for newsletter purposes

Written By

Osama is an experienced writer and SEO strategist at Exploding Topics. He brings over 8 years of digital marketing experience, spe... Read more