Get Advanced Insights on Any Topic

Discover Trends 12+ Months Before Everyone Else

How We Find Trends Before They Take Off

Exploding Topics’ advanced algorithm monitors millions of unstructured data points to spot trends early on.

Keyword Research

Performance Tracking

Competitor Intelligence

Fix Your Site’s SEO Issues in 30 Seconds

Find technical issues blocking search visibility. Get prioritized, actionable fixes in seconds.

Powered by data from

73% Fear Their AI Prompts Going Public, But Most Don’t Know It’s Already Happening

Nearly three-quarters of AI users fear their prompts could be exposed. Yet most have no idea it’s already possible.

In the third installment of a series of consumer AI surveys, Exploding Topics has taken a deep dive into the issue of privacy, and what users are willing to share with artificial intelligence.

The results have unearthed significant fears about AI privacy, including data breaches, the sale of personal data, prompts being made public, and government access to personal data.

But users’ understanding of the current state of privacy risks is far from complete. And even when they are made aware of existing dangers, only 28.35% express an intention to reduce their AI usage.

Our original research also reveals new insights into the most trusted AI companies, attitudes toward employer AI tools, and the extent to which users trust AI with confidential or personal information.

Fast facts

- Prompt privacy: 73% of AI users are at least somewhat concerned about their prompts being made public

- AI Mode risks: Only around 4 in 10 users know that Google AI Mode prompts are visible to website owners

- Data security: 63.04% of AI users fear a data breach

- Privacy assumptions: 39.9% of users suspect companies may save or review conversations with AI chatbots

- Sharing secrets: 52.13% of respondents have told AI something they would not tell a human

- Workplace attitudes: Just 22.2% of workplace AI users are uncomfortable at the prospect of employer oversight

- Proceed with caution: 65.8% of people will not reduce AI usage in the face of privacy concerns, though they may be more careful

- Favored companies: People trust Google the most to respect their privacy, while DeepSeek fares worst

The basics: How many people are using AI?

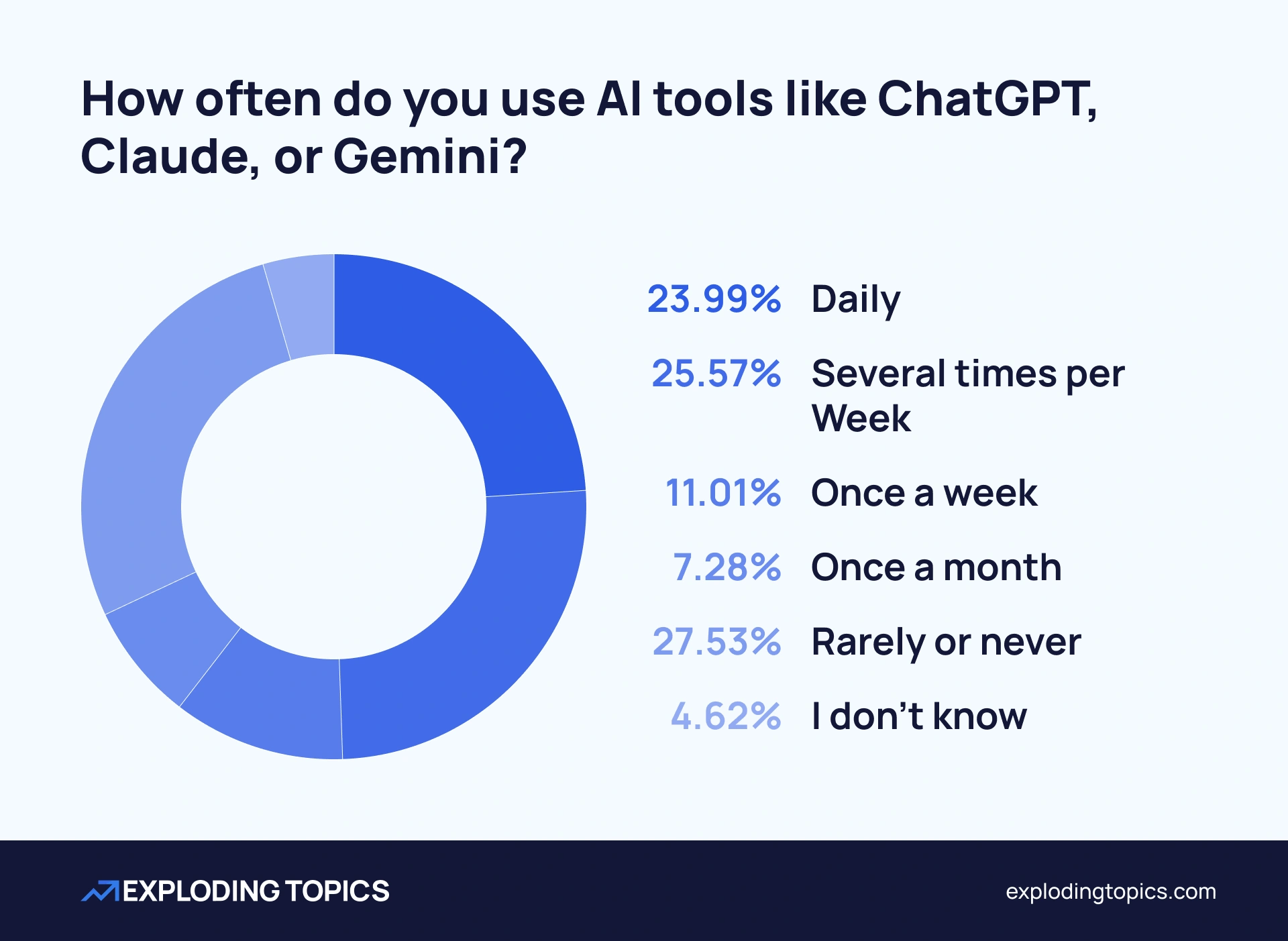

This was a survey of how AI users feel about their privacy. So we first had to assess how many of our 1000+ respondents actually used AI tools.

This in itself provided some interesting data. 27.53% of respondents reported “rarely” (less than once per month) or “never” using AI tools like ChatGPT, Claude, or Gemini.

This group was then skipped for the remainder of the survey. But it’s possible that some of these non-users are “conscientious objectors” on privacy grounds — a recent BYU study found that privacy risks accounted for 16.5% of generative AI “non-use”.

In our study, women (33.59%) were more likely than men (21.40%) to report rarely or never using AI tools. Men were also significantly more likely to report daily usage.

Meanwhile, AI usage declined with age. 59.76% of over-60s reported “rarely” or “never” using AI tools, compared to just 10.7% of respondents aged 18-29.

AI privacy in the news

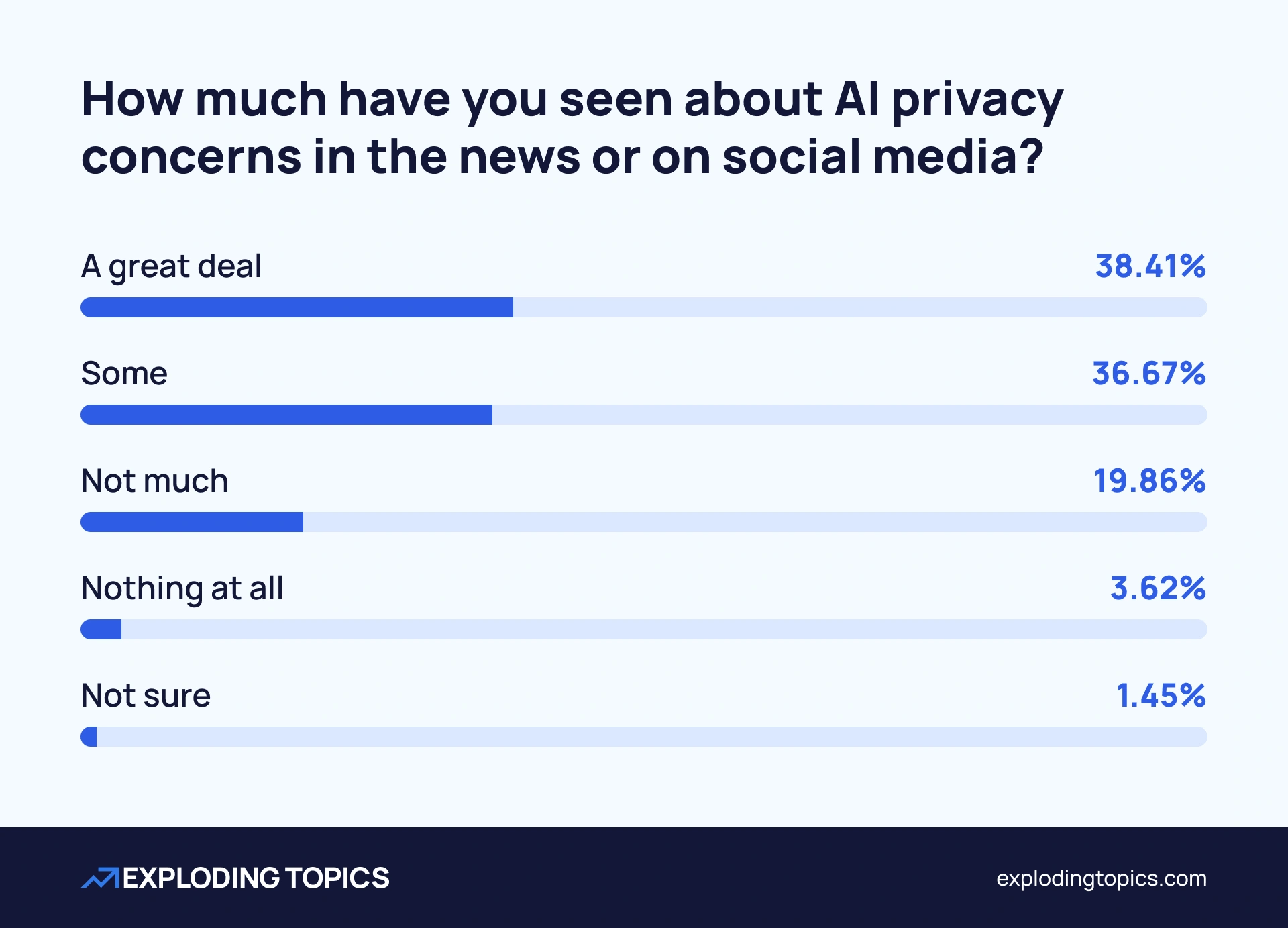

Our remaining questions were posed specifically to AI users. Only 3.62% reported hearing “nothing” about AI privacy concerns in the news or on social media.

A significant majority of respondents (75.08%) had seen “some” or “a great deal” about AI privacy concerns. While men were somewhat more likely than women to have seen these reports, news and social media coverage has broadly reached all groups.

However, when asked about specific AI privacy cases that have attracted some publicity, the majority of respondents were in the dark.

(It’s worth noting that questions were asked before the advent of ChatGPT ads, which have attracted significant privacy discussion, not to mention a Superbowl ad by Anthropic).

The Meta AI “Discover” Feed

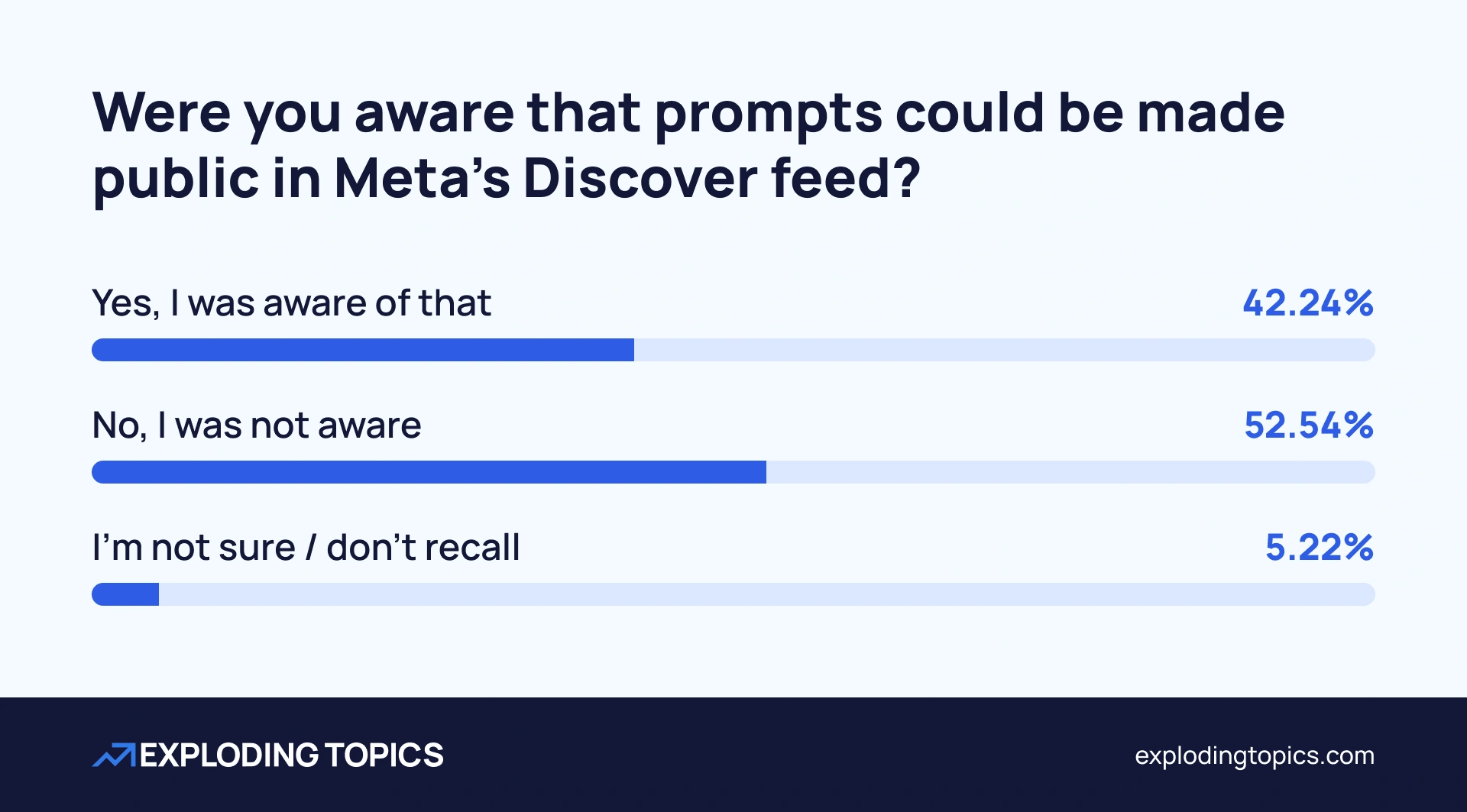

The Meta AI app was launched on April 29, promising “a new way to access your AI assistant”. It also introduced something known as the Discover feed.

This allows users to browse other people’s AI chatbot conversations, provided they have been shared. But given the personal nature of some of the chats that appear in the feed, there are legitimate concerns about whether all users are really aware of what they are sharing.

According to Wired, users have encountered medical information, home addresses, and details pertaining to pending court cases in Meta’s Discover feed. But our survey found that the majority of AI users remain unaware of this feature.

42.24% of respondents were aware that prompts could be made public in Meta’s Discover feed. But 52.54% were not aware, with a further 5.22% unsure.

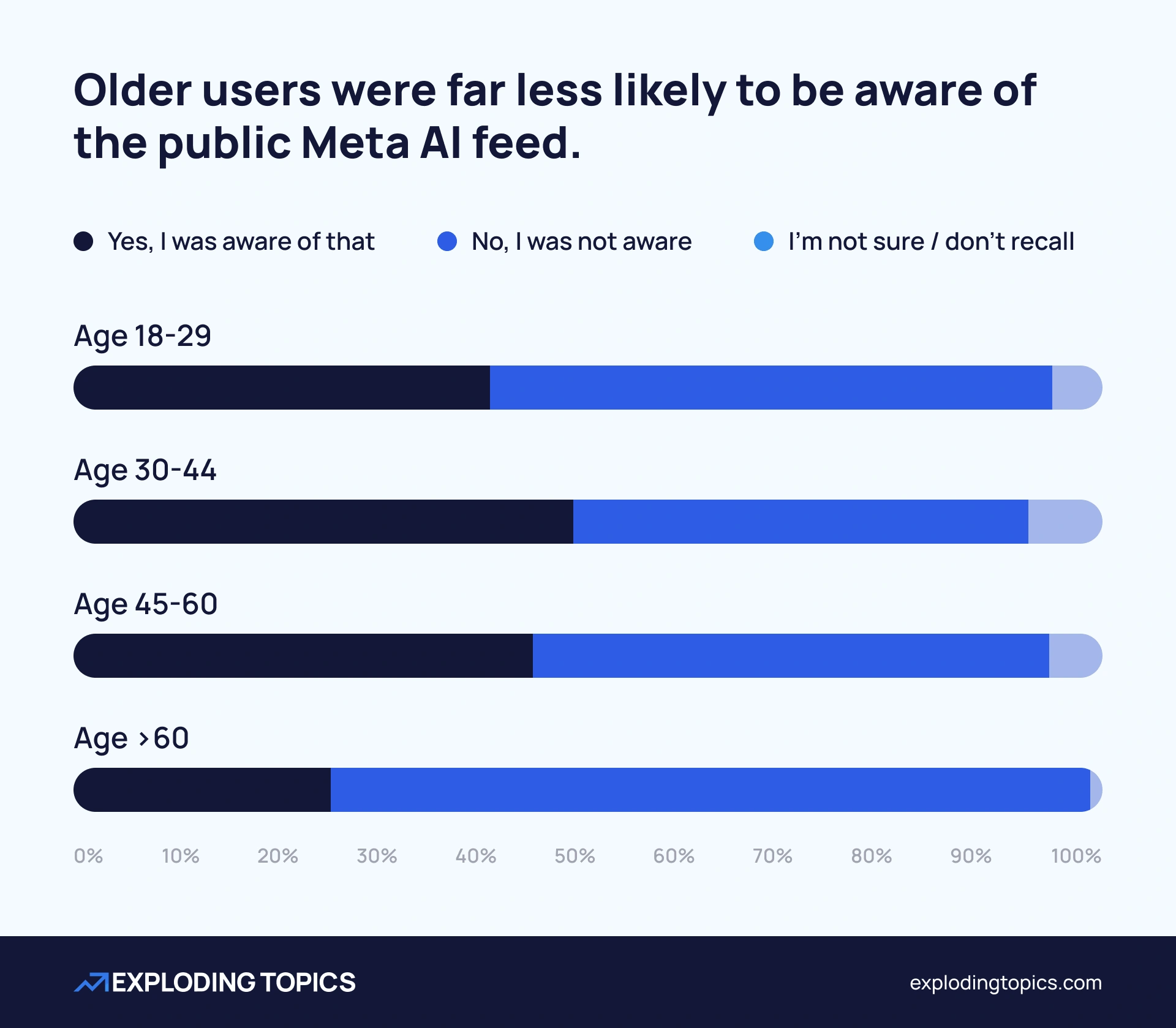

Older users were far less likely to be aware of the public feed. Among AI users over 60, 73.81% reported being unaware that prompts could be made public in Meta AI.

Meta has now added a pop-up getting users to confirm their decision when they opt to make a chat discoverable. But there are question marks over whether that will be sufficient to protect the privacy of potentially vulnerable older users.

Google AI Mode

Google’s AI Mode is fast becoming a popular way of searching. Our first AI survey found that despite skepticism over accuracy, users are opting for Google’s AI toolkit due to its convenience.

In the current survey, 57.18% of respondents reported that they have tried Google’s AI Mode search feature.

But knowledge of potential privacy risks was once again lacking.

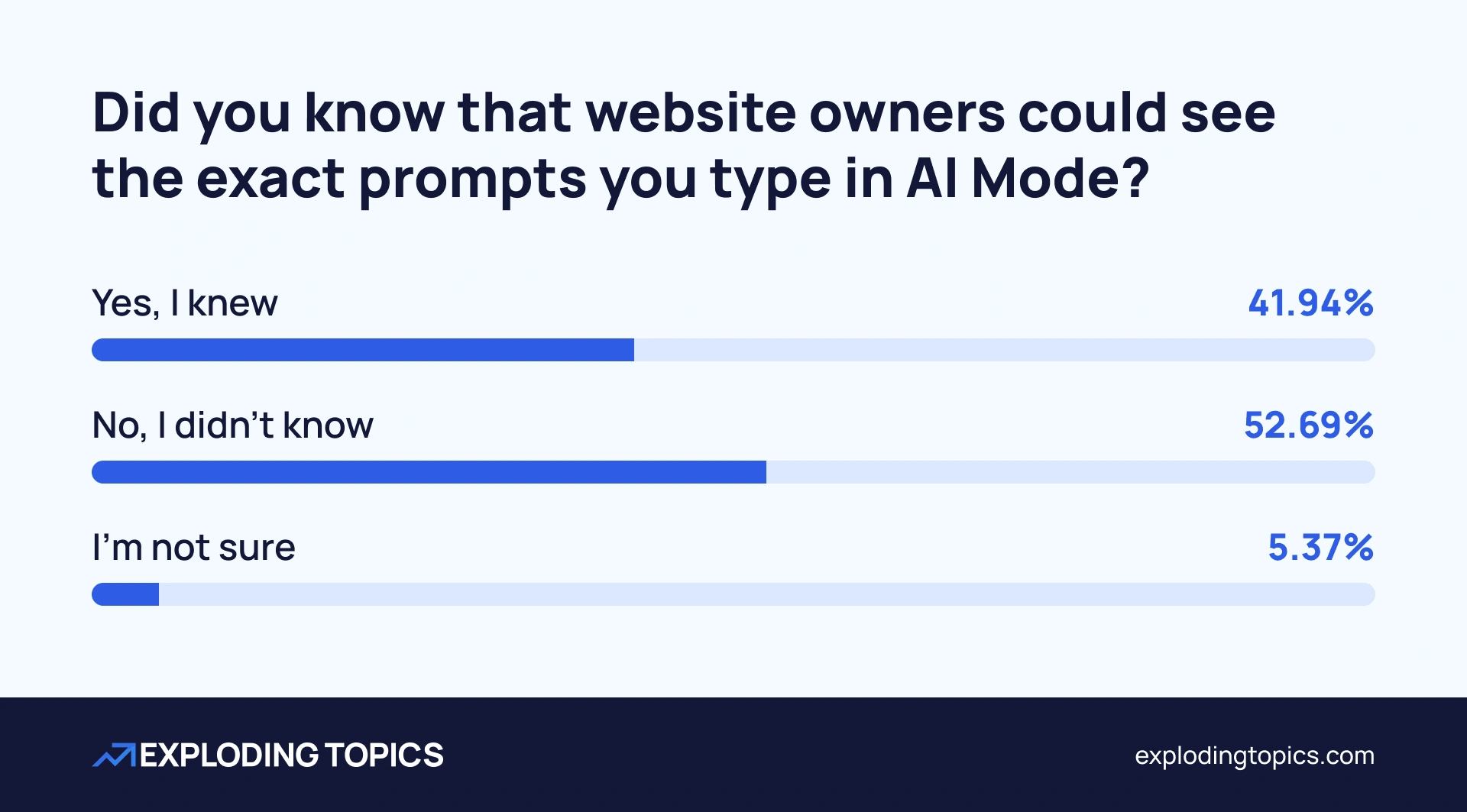

Google Search Console allows webmasters to track the AI Mode queries that lead to impressions and clicks. We asked respondents if they were aware that website owners could view their exact prompts.

Only 41.94% were aware that Google AI Mode prompts could be visible to others. 52.69% did not know, with a further 5.37% unsure.

Over-60s (70.24%) were once again most likely to be unaware of the privacy risk. But more than half of users aged 18-29 and 45-60 were also unaware that Google AI Mode prompts could be seen by website owners.

Google AI Mode is also joining ChatGPT in trialling ads below AI responses, which could raise further privacy questions.

Major fears about AI prompts being made public

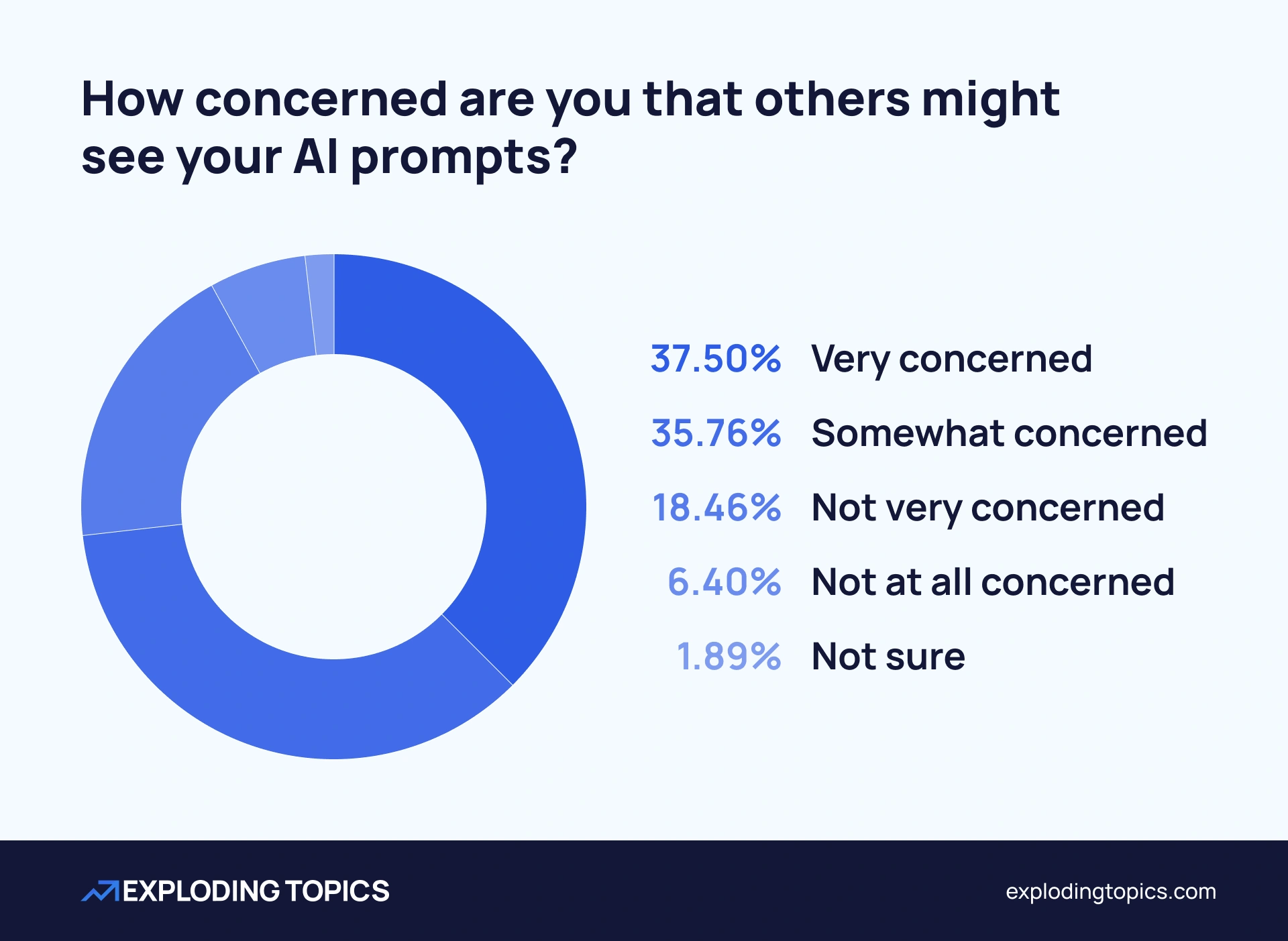

Despite being unaware of potential privacy concerns in Meta Discover and Google AI Mode, the vast majority of AI users are concerned about their prompts being made public.

73.26% are at least somewhat concerned that others might see their AI prompts.

Of those, 37.5% are very concerned.

Only 6.4% of AI users are not at all concerned, while 18.46% are not very concerned.

This widespread concern is not surprising when you consider the kind of content that people are sharing with AI.

In our second AI survey, we found 37.1% of people using the technology for health advice, 42.4% using it for financial planning, and 34.48% turning to AI for relationship or personal advice.

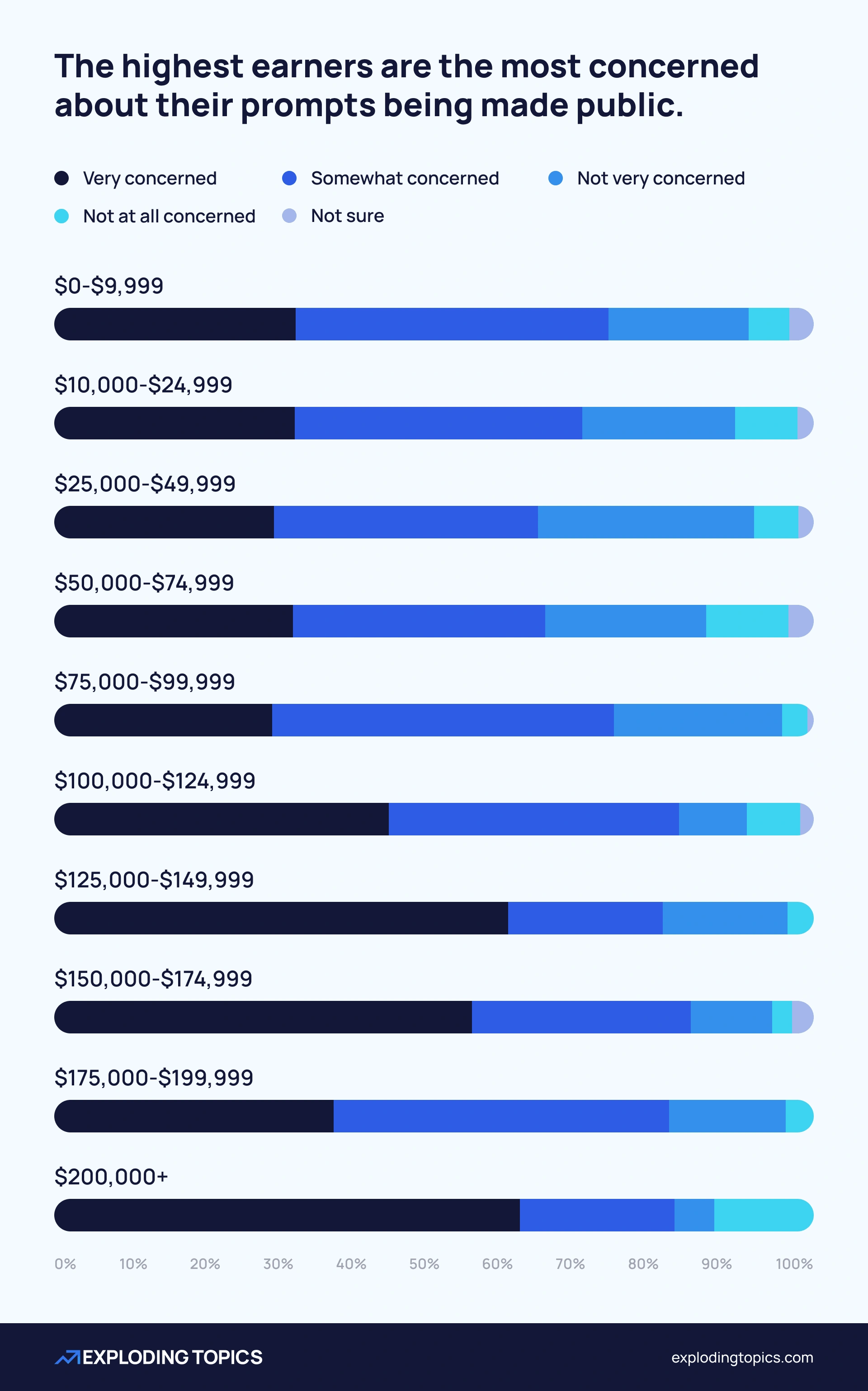

Meanwhile, this survey found that the highest earners are the most concerned about their prompts being made public.

61.54% of those with a household income of $200,000 or higher are very concerned that others might see their AI prompts. A further 20.51% are somewhat concerned.

AI users are generally more worried about their personal prompts being exposed than prompts they have used for work.

33.87% of respondents were most worried about privacy in the personal or home use scenario. 23.55% said the professional setting concerned them more, while 33.43% expressed equal concern.

Men (37.23%) are more likely than women (29.81%) to worry specifically about the privacy of their personal AI conversations.

AI privacy concerns go beyond prompt exposure

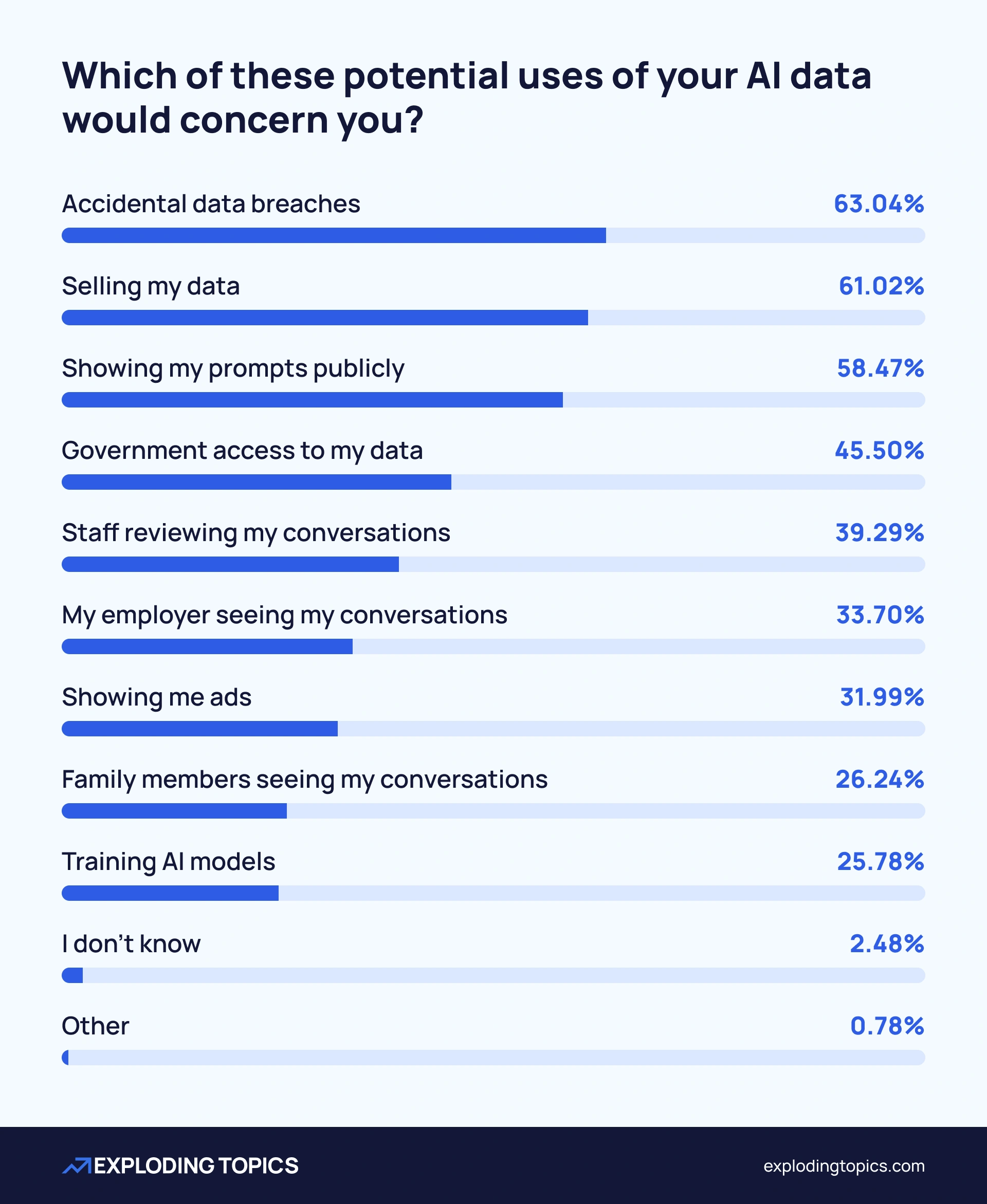

AI privacy concerns do not start and end with the exposure of user prompts. In fact, AI users have even bigger fears.

Users are most concerned about how AI organizations will handle their data.

63.04% expressed worry about accidental data breaches, while 61.02% feared their data would be sold. Together, these were the two leading concerns.

Major companies like OpenAI explicitly state that they do not sell user data. However, they may use it to train future models.

As for data breaches, a recent Business Digital Index study found that 5 out of 10 major LLM providers have suffered at least one breach. It recorded over 1,000 incidents involving OpenAI.

These are unconfirmed, and not acknowledged by OpenAI. However, the overall cybersecurity landscape gives AI users legitimate cause for concern: across the board, there were 4.6 million victims of a data breach per day in 2024.

Prompt exposure was the next-biggest concern about AI data, followed by government access to data, and staff review of conversations.

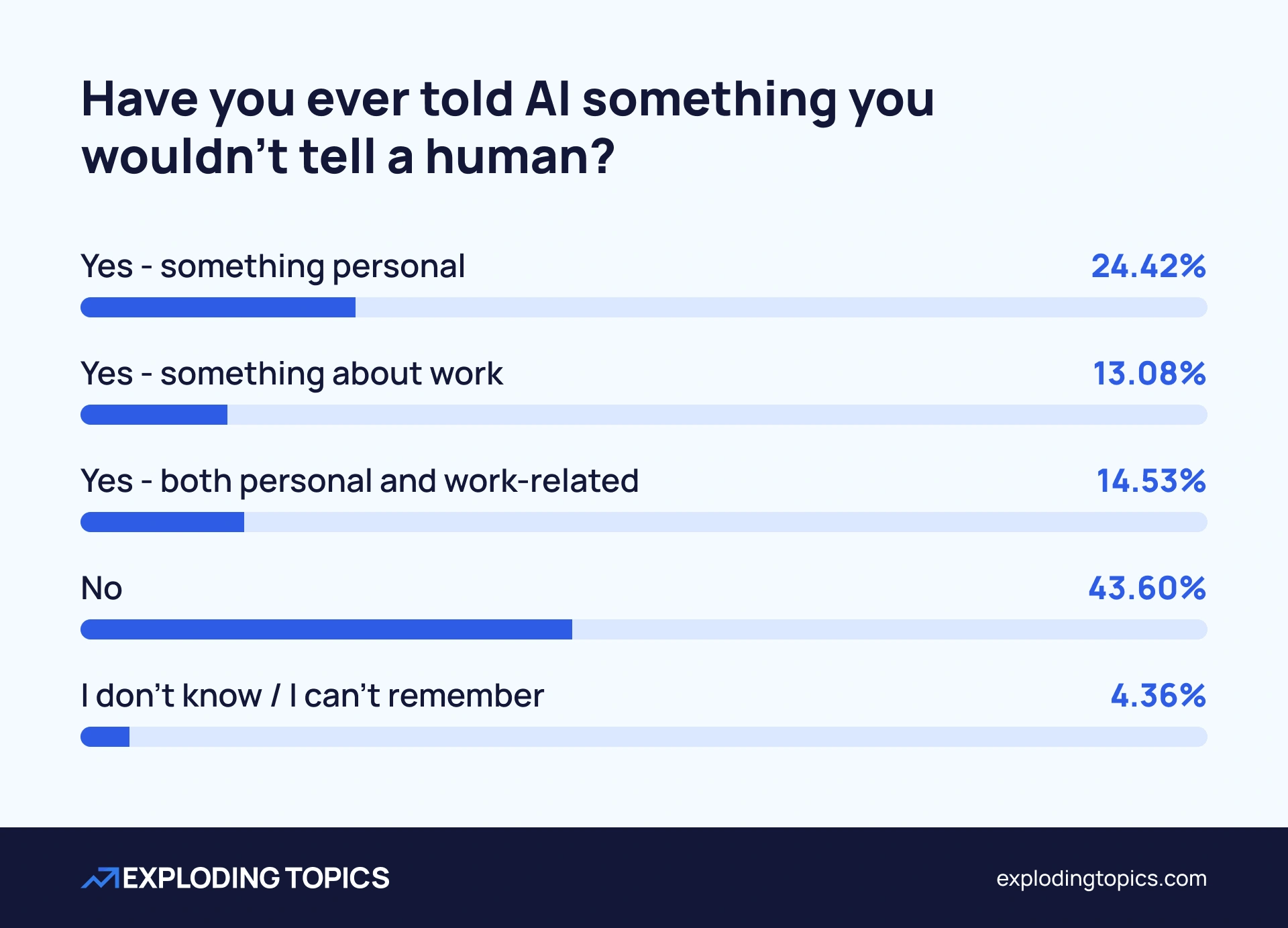

AI chatbots being trusted with secrets

In spite of these significant privacy fears, AI users continue to trust the technology with sensitive, personal data.

52.13% of people have told AI something that they would not tell a human.

Sharing personal confidences is more common than divulging professional secrets, although more than 1 in 4 people have revealed something private about work to an AI chatbot.

24.42% have only shared something personal, 13.08% have only shared something about work, and 14.63% have shared both kinds of information.

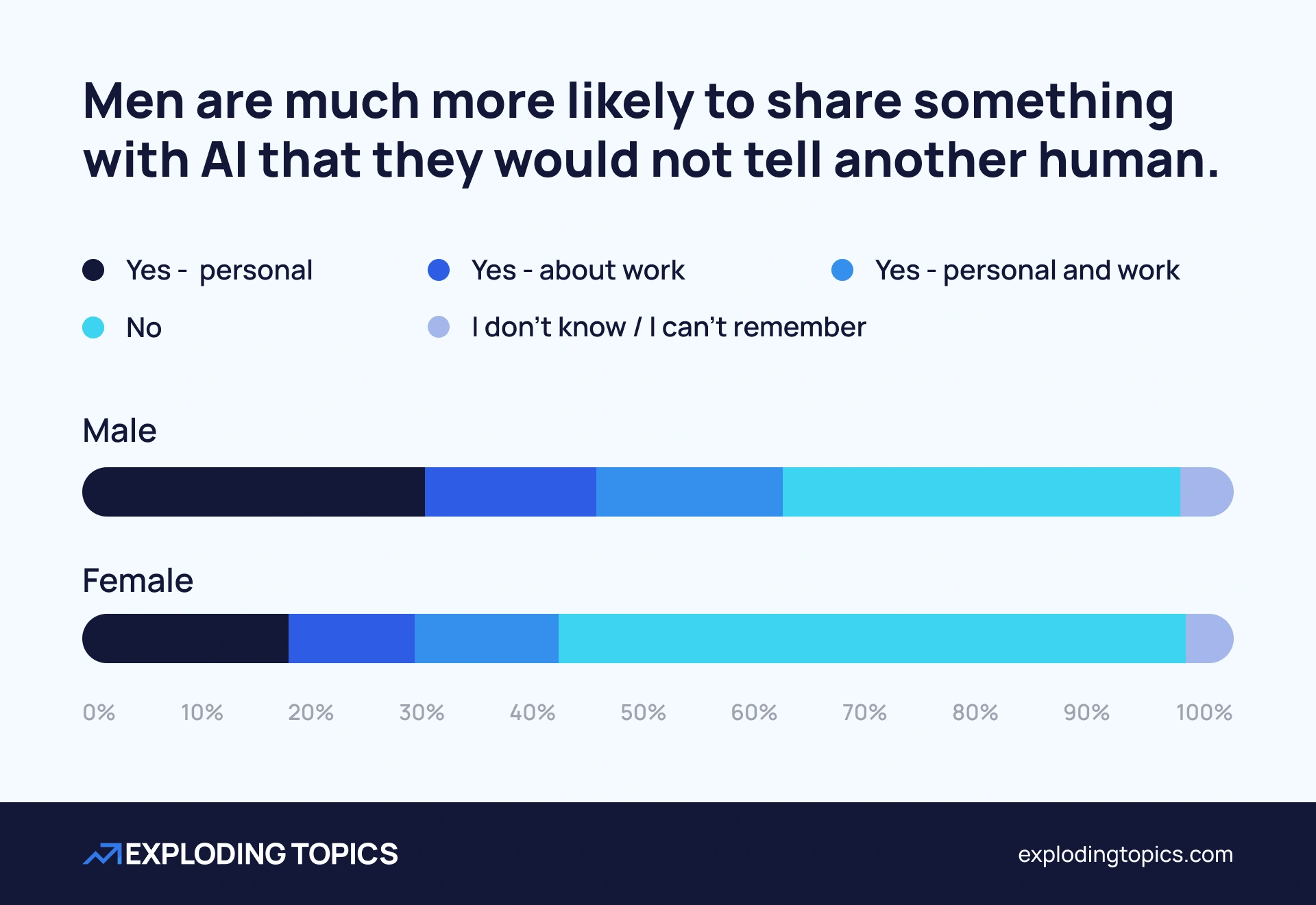

Men are much more likely than women to share something with AI that they would not tell another human. 60.22% of men have divulged secrets to AI, compared to 41.65% of women.

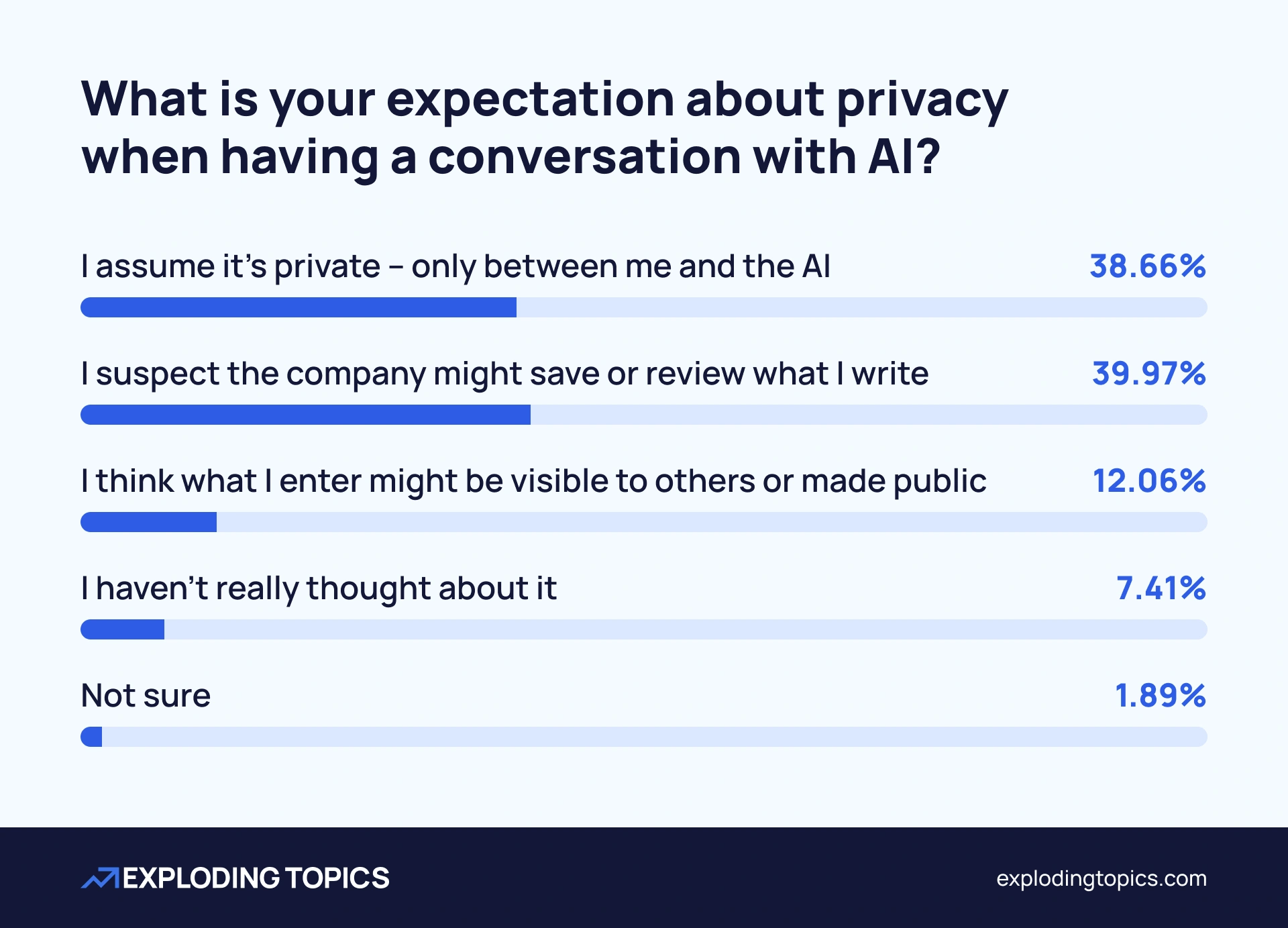

Expectations of privacy when using AI

AI users are sharing personal information with AI despite having only limited faith in privacy claims.

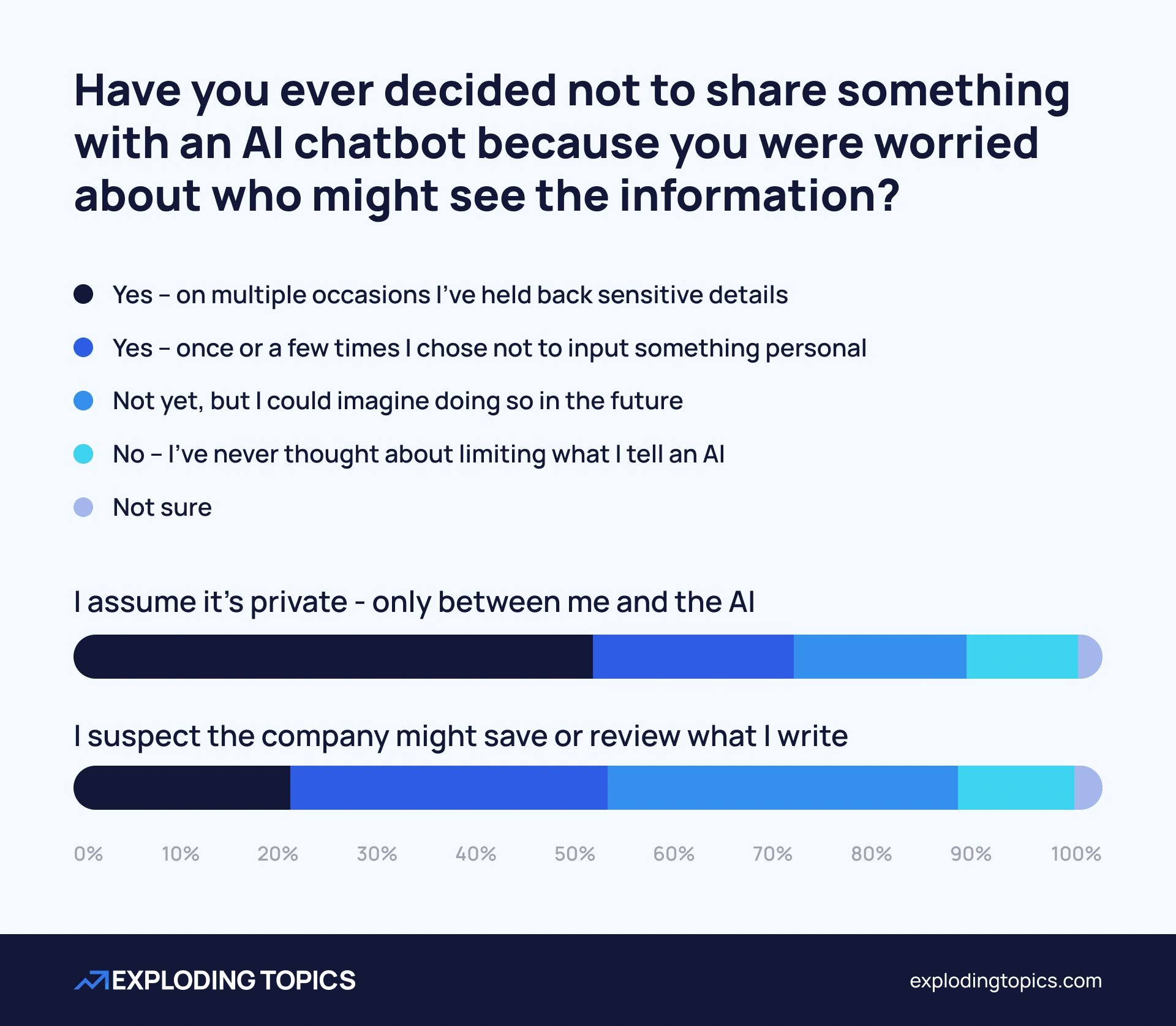

Fewer than 4 in 10 users (38.66%) assume that their conversations with AI are private.

A marginally larger group (39.97%) actively suspect that AI companies may save or review what they write. A further 12.06% think that their inputs might eventually be visible to others.

Interestingly, skepticism over privacy is most rife among the 18-29 age group, 49.18% of whom suspect that AI companies may be saving and/or reviewing what they write. Those aged 45-60 are most likely to assume that communications are private.

It’s also fascinating to note that those who are most concerned by the prospect of their prompts being made public are also most likely to assume that their conversations will be private.

Among those who said they were “very concerned” by the risk of others seeing their AI prompts, 64.34% also revealed that they assume the conversations they have with AI are kept entirely private.

Only 7.75% of the “very concerned” group thought that what they said to an AI chatbot might one day become public to others, with 23.64% suspecting oversight by the AI companies.

Modifying AI use due to privacy fears

From this, it’s tempting to conclude that the AI users sharing most freely with chatbots are only doing so because they assume it is safe and secure. But our data doesn’t bear that out.

On the whole, users are not routinely modifying their behaviors based on perceived privacy concerns.

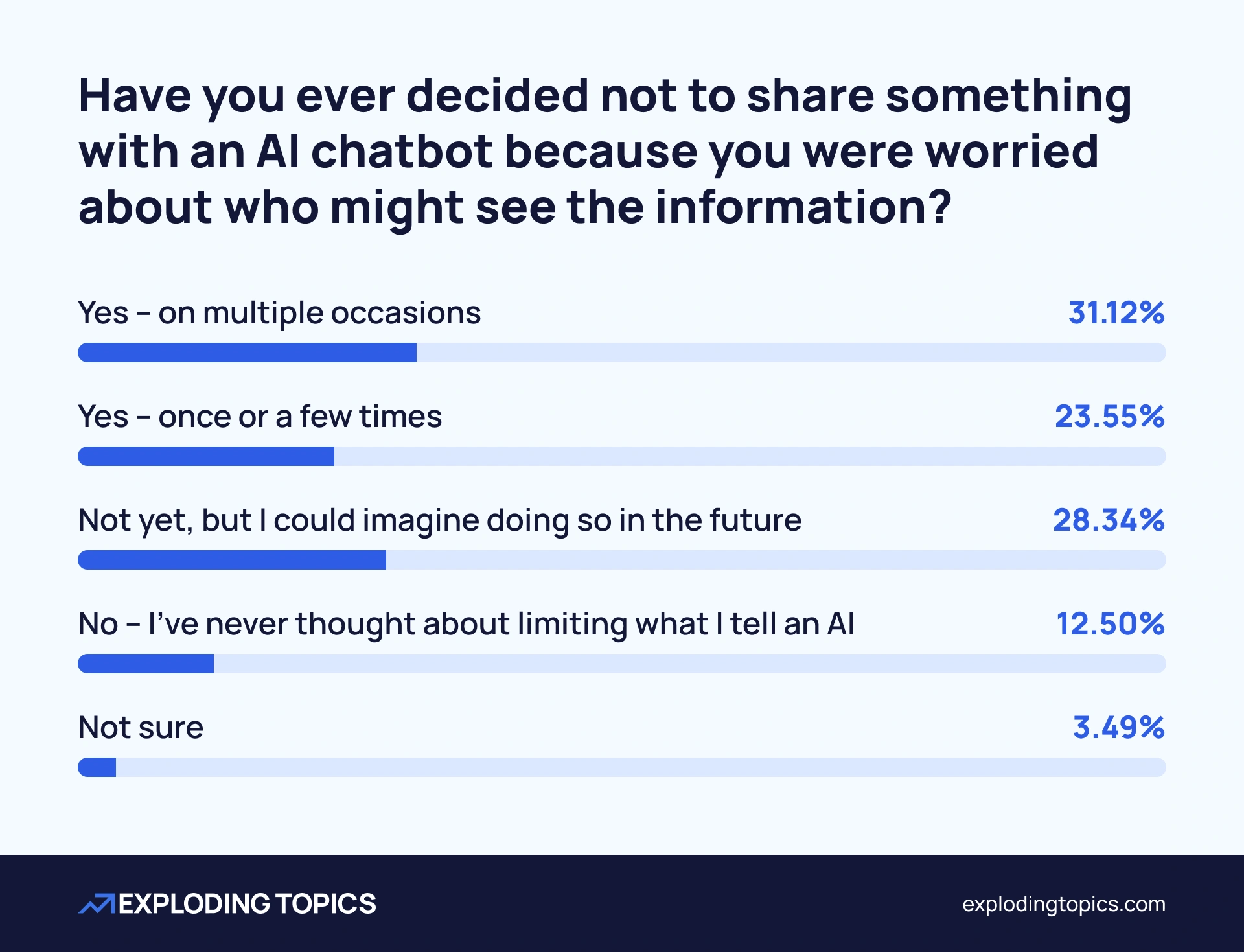

Just 32.12% of respondents report having held back sensitive details from AI on multiple occasions.

A further 23.55% have done so once or a few times.

28.34% have never held back any information, but would consider doing so in future. 12.5% have never considered limiting what they tell an AI.

Crucially, the respondents who suspect that their chats are probably being shared with AI companies are no more likely than others to have held back private information.

Only 20.36% of these privacy-skeptic AI users have held back sensitive information multiple times, versus 50.38% of the users who have an assumption of privacy. So those who doubt the security of their chats actually appear to be sharing more freely.

Moreover, being presented with new information about the state of AI privacy is not likely to significantly impact AI users’ behaviors.

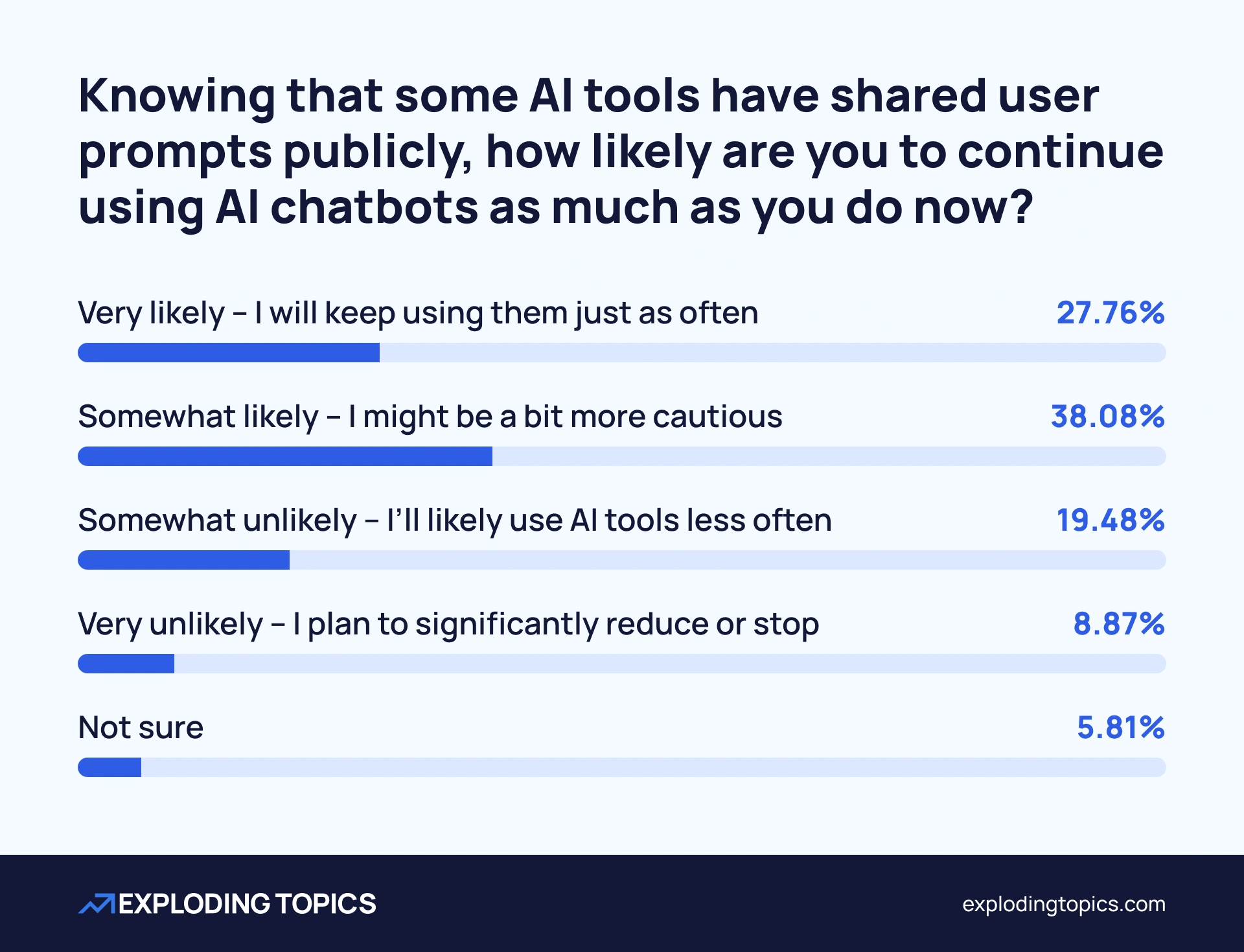

We asked our respondents how likely they would be to continue using AI chatbots the same amount, knowing that some AI tools have shared user prompts publicly or with website owners. Only a minority indicated that they would reduce their usage.

More than a quarter (27.76%) said that they were very likely to maintain their current usage despite privacy concerns. 38.08% said that they would continue almost as usual, although they might be “a bit more cautious”.

In other words, 65.84% of AI users have no intention of significantly reducing their usage even after being told about the real risk of their prompts being made public.

Just 8.87% said they now intended to significantly reduce or stop using AI chat tools altogether. Around 1 in 5 (19.48%) said that they would probably start to use AI tools less often as a result of privacy concerns.

There is a slightly different picture among those who reported previously being unaware of the Meta AI and Google AI Mode privacy concerns.

Among those who did not previously know about Meta’s public Discover feed, 37.95% said they would be at least somewhat unlikely to keep using AI chatbots at their current levels.

Likewise, 39.78% of those who did not know about Google AI Mode searches being visible to webmasters expressed some kind of inclination to reduce their AI usage going forward. But that still leaves a majority who intended to maintain their existing usage.

In short, while many AI users intend to proceed with a little more caution when exposed to the reality of privacy fears, it is rarely enough to convince people to halt or even substantially reduce their usage.

Which LLMs are most trusted on privacy?

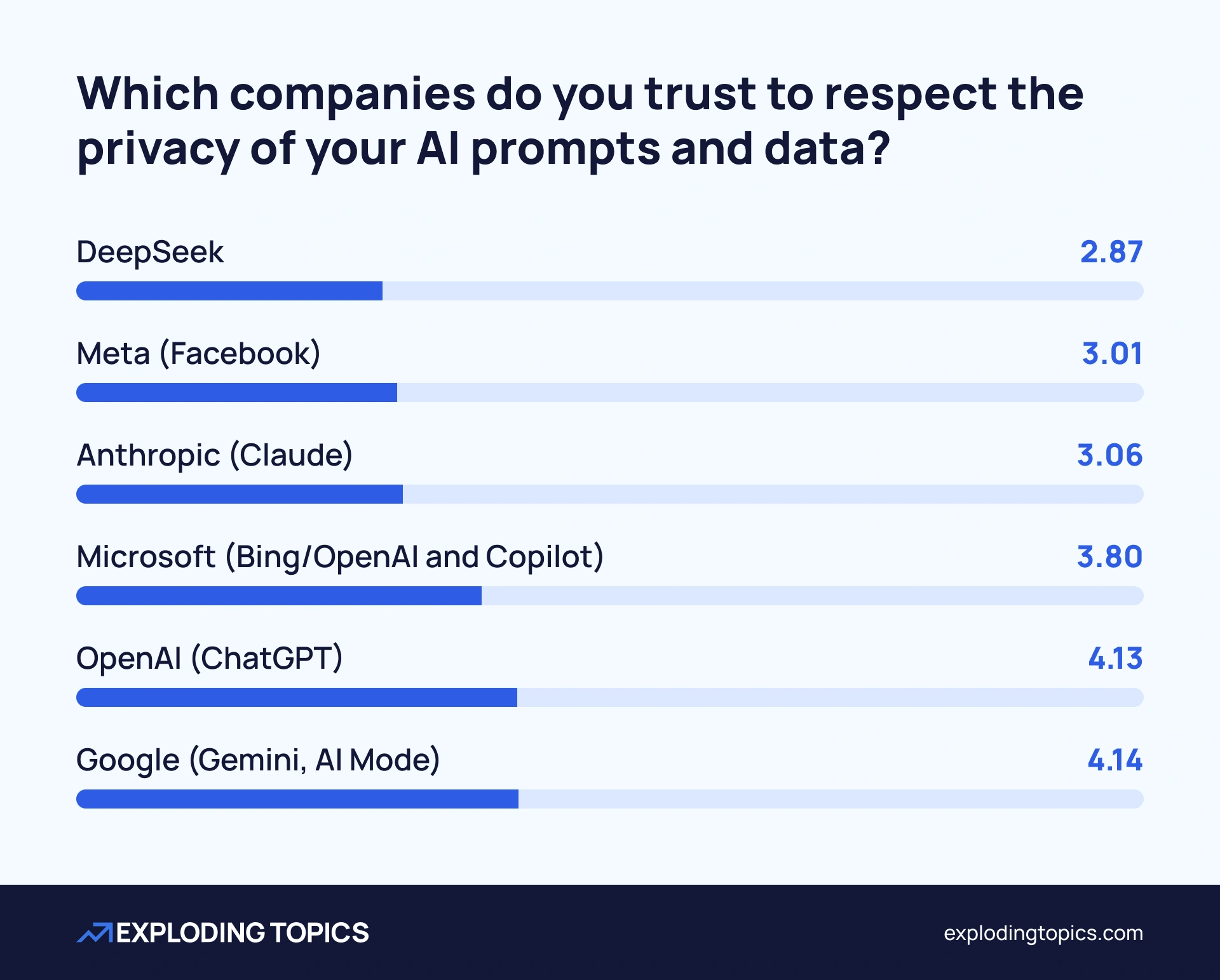

Privacy concerns are not felt equally across all AI chatbots. While only 20.78% of respondents say that they trust any of the major tech companies “a lot”, Google is the most-trusted of the major LLM providers.

We asked respondents to rank OpenAI, Google, Microsoft, Anthropic, Meta, and DeepSeek from 1-6, based on how much they trust them to respect the privacy of AI prompts and data. Over two-thirds (66.73%) placed Google among its top 3, with 25% putting it top.

OpenAI, maker of ChatGPT, was placed first by the highest number of respondents (30.51%). But it was also placed lower down by a greater number of respondents than Google.

Scores were calculated based on a weighting system, with higher rankings worth more “points”, and the total number of points then divided by the number of respondents:

Chinese AI tool DeepSeek is the least-trusted of the six AI tools, with more than half of respondents placing it fifth or sixth.

AI users are next-least trusting of Meta. It was ranked in last place by 27.02% of respondents, marginally higher than the 26.65% who put DeepSeek last.

AI privacy in the workplace

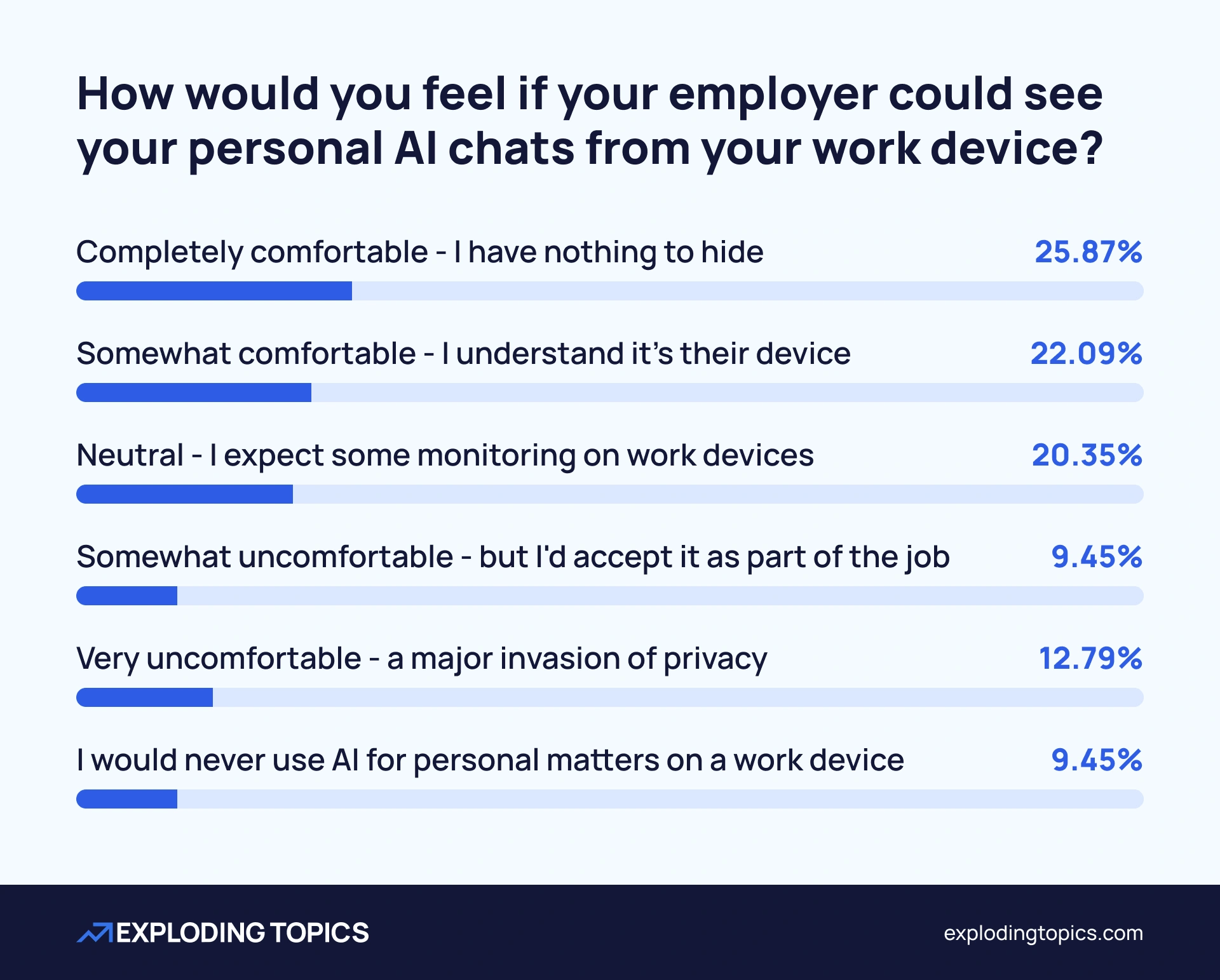

AI in the workplace comes with an extra layer of potential privacy concerns, given the risk that employers might be able to access chats. Even so, overall fears about AI privacy tend to be lower in professional settings.

Only a little more than 1 in 10 people (12.79%) would consider it a major invasion of privacy if an employer viewed their personal AI chats on a work device.

The prevailing attitude is one of acceptance that monitoring is legitimate on work devices.

25.87% reported that they would feel completely comfortable with their employers viewing their AI chats, as they have nothing to hide.

A further 22.09% said they would be somewhat comfortable, and another 20.35% would feel neutral — with both groups acknowledging that some degree of oversight is expected on work devices.

Interestingly, only 9.45% of respondents said they would never use AI for personal matters on a work device.

So most users are at least occasionally having personal chats with AI on their work devices, but concerns about monitoring are low.

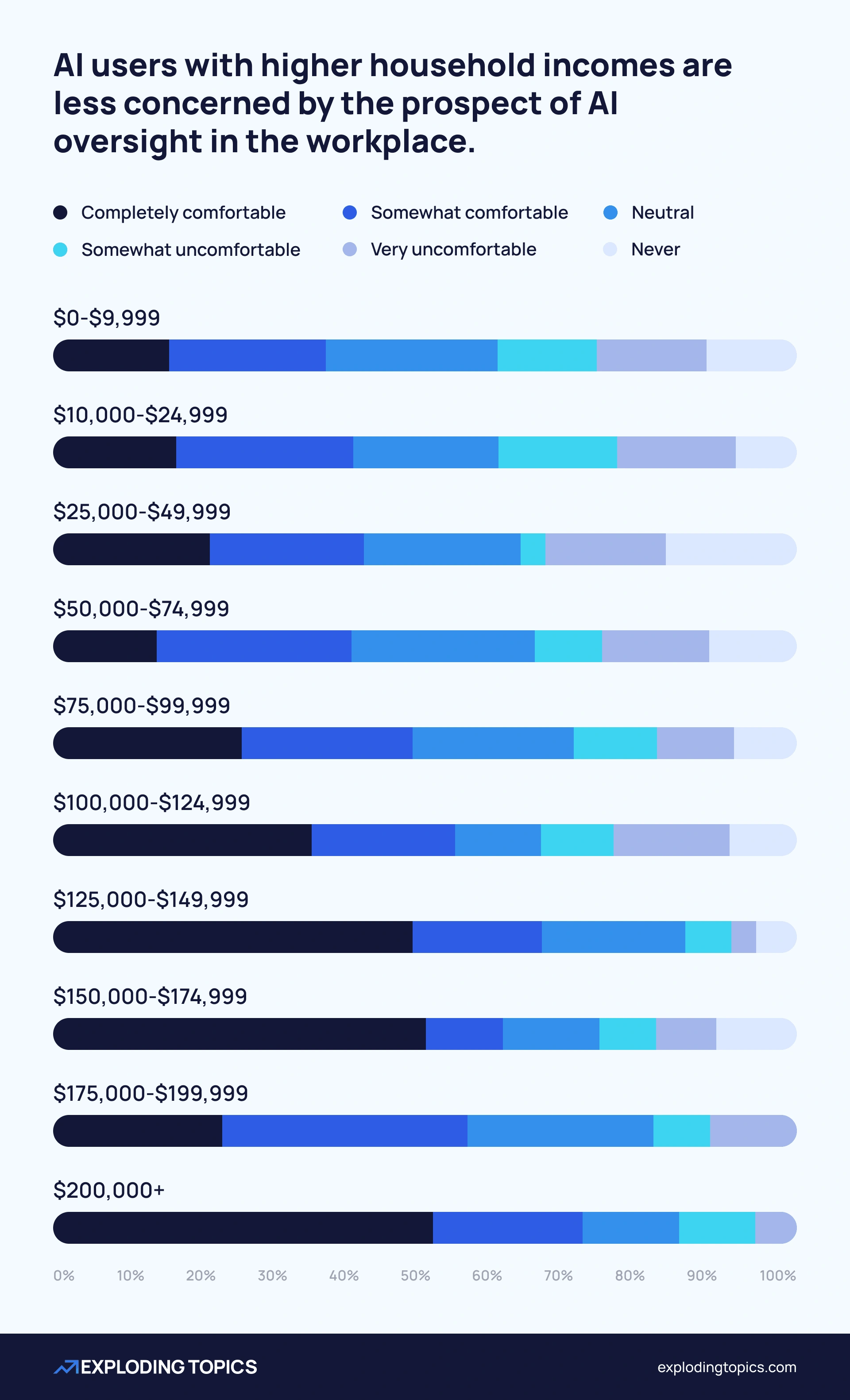

In general, AI users with higher household incomes are less concerned by the prospect of AI oversight in the workplace.

More than half of AI users with household incomes above $200,000 would be completely comfortable with their employers having access to personal chats conducted on workplace devices, versus just 15% of the lowest income group.

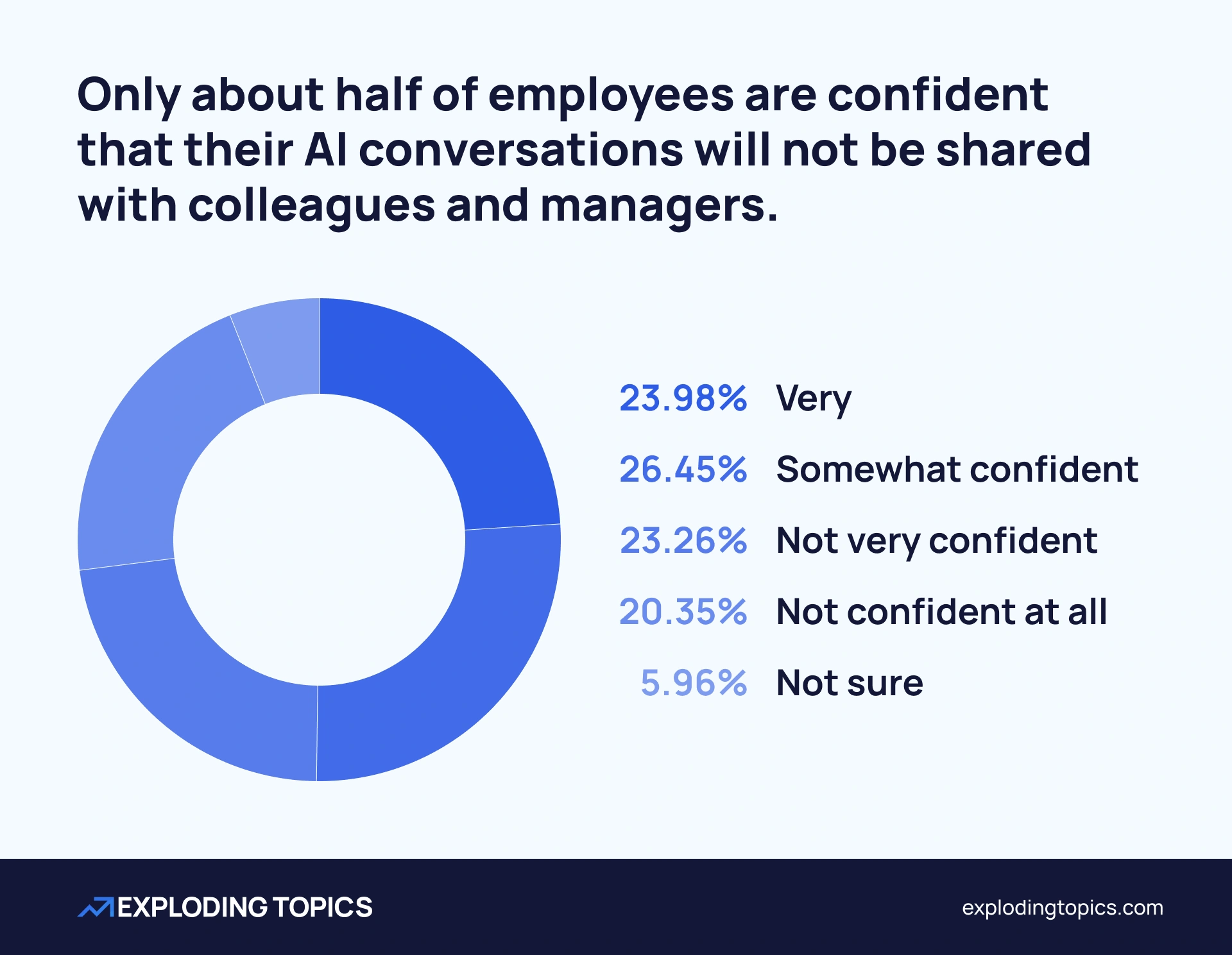

Trust in employers to handle AI data responsibly is mixed

When looking specifically at AI tools provided by an employer, a significant number of employees do not trust that their conversations will be private.

Only about half of employees are confident that their AI conversations will not be shared with colleagues and managers.

23.98% report being very confident in the knowledge that conversations with workplace AI chatbots will stay private. Another 26.45% are somewhat confident.

On the other hand, 23.26% are not very confident, while 20.35% are not confident at all — in other words, they would expect their conversations to be shared.

Respondents in the West North Central region exhibited the least faith in their employers, with 62.16% either not very or not at all confident that workplace AI chats would remain private and secure.

The Mid-Atlantic region saw the highest trust in employers, with only 33.02% lacking confidence that their AI chats would be kept private.

Build a winning strategy

Get a complete view of your competitors to anticipate trends and lead your market

Privacy fears are very real, but they won’t stop the AI train

Our research leaves no room for doubt: the average AI user is certainly worried about their privacy when using the technology.

However, we are seeing a recurring theme in our AI surveys: convenience is king.

Although many users seem under-informed about real, active AI privacy risks, they only have a limited appetite to reconsider their AI usage even once made aware of the dangers.

Indeed, users who assume that their chats will not remain private are among the most likely to share personal information with AI.

When offering an AI tool, making convincing privacy guarantees will undoubtedly appeal to potential users. But as long as it makes their lives easier, either at work or at home, then there is a fair chance they will use it anyway.

With AI clearly not going anywhere, it is essential for brands to adapt to the landscape. The Semrush AI SEO Toolkit lets you monitor AI mentions and optimize for LLMs, while also harnessing AI for powerful business insights.

Sign up for a Semrush free trial today to try 55+ tools for SEO, content marketing, and competitor research.

Stop Guessing, Start Growing 🚀

Use real-time topic data to create content that resonates and brings results.

Exploding Topics is owned by Semrush. Our mission is to provide accurate data and expert insights on emerging trends. Unless otherwise noted, this page’s content was written by either an employee or a paid contractor of Semrush Inc.

Share

Newsletter Signup

By clicking “Subscribe” you agree to Semrush Privacy Policy and consent to Semrush using your contact data for newsletter purposes

Written By

James is a Journalist at Exploding Topics. After graduating from the University of Oxford with a degree in Law, he completed a... Read more