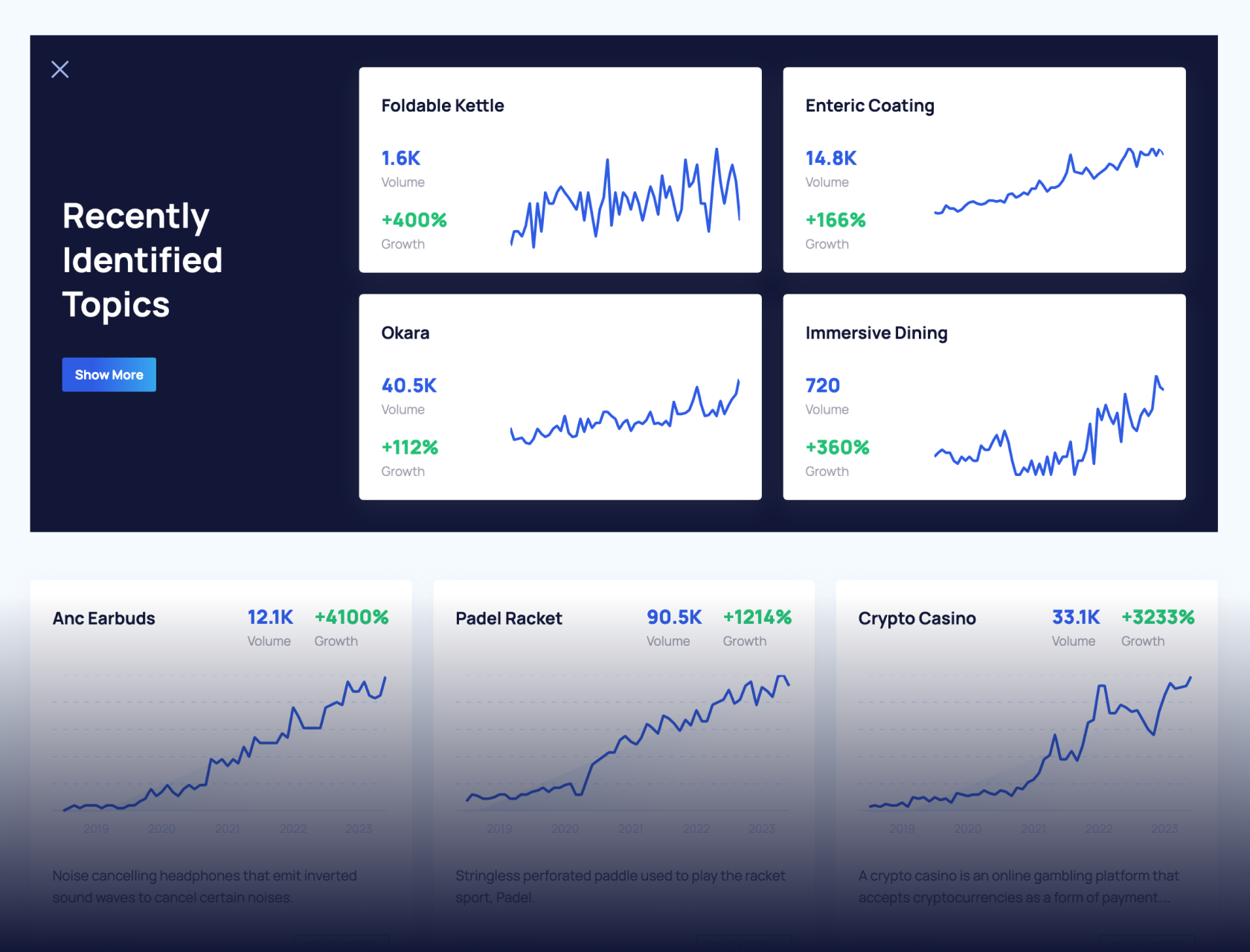

Get Advanced Insights on Any Topic

Discover Trends 12+ Months Before Everyone Else

How We Find Trends Before They Take Off

Exploding Topics’ advanced algorithm monitors millions of unstructured data points to spot trends early on.

Keyword Research

Performance Tracking

Competitor Intelligence

Fix Your Site’s SEO Issues in 30 Seconds

Find technical issues blocking search visibility. Get prioritized, actionable fixes in seconds.

Powered by data from

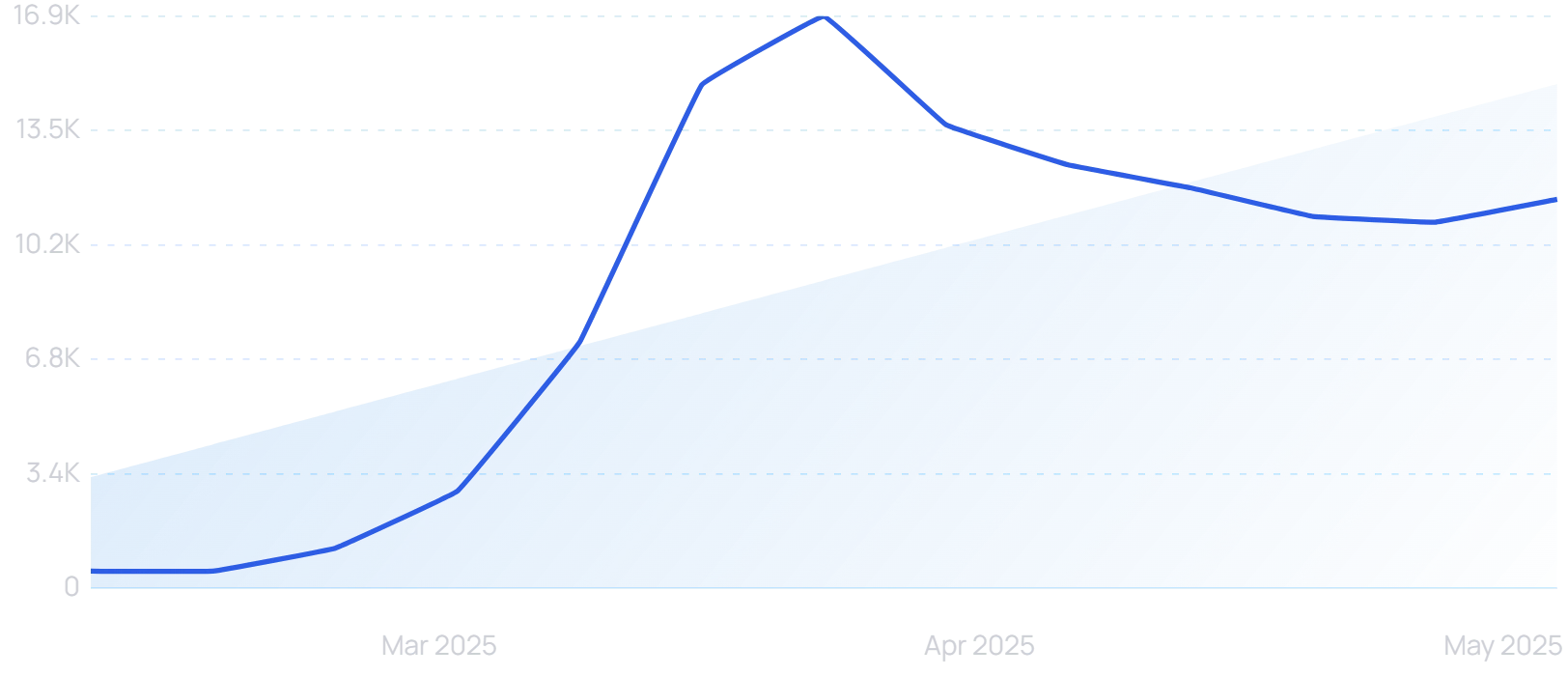

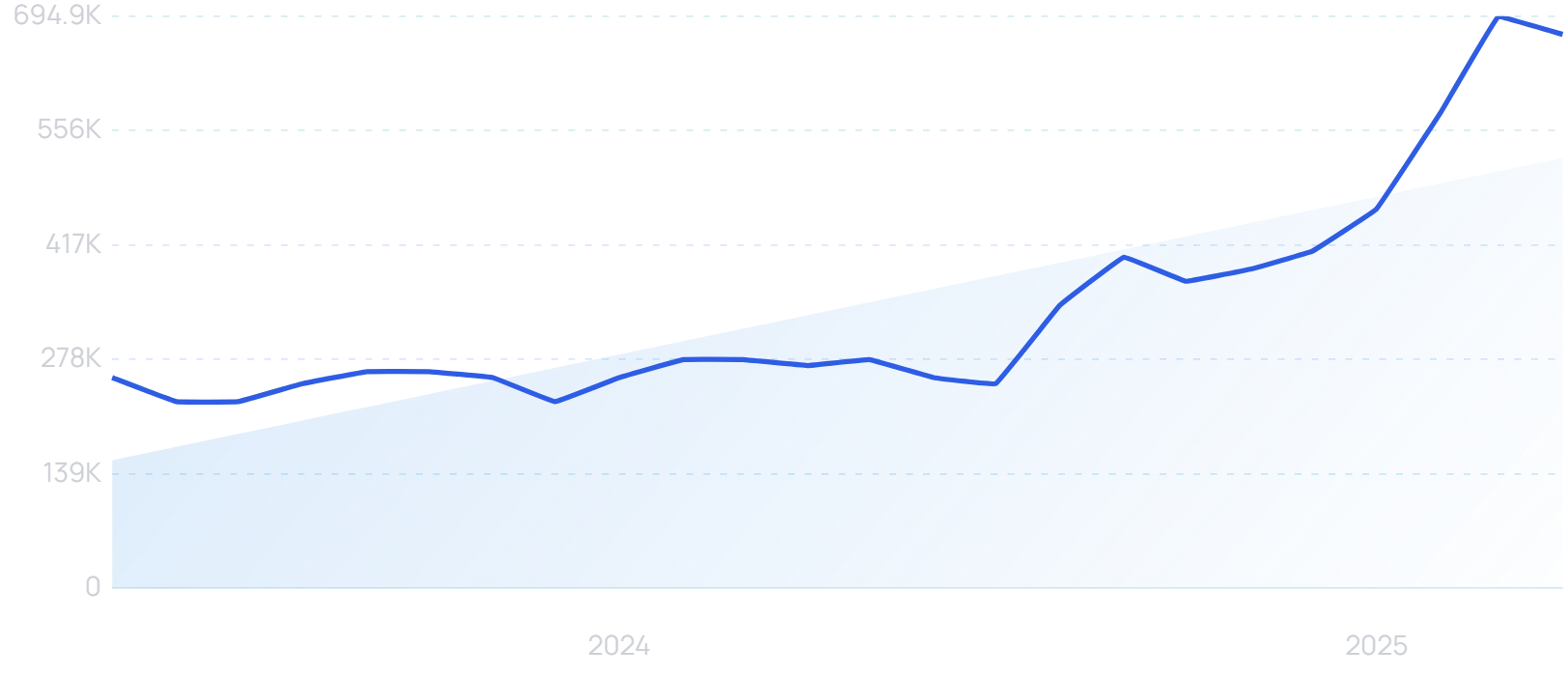

“Vibe coding” surges as a quarter of Y Combinator startups now get AI to write their code

“Vibe coding” is the latest artificial intelligence buzzword. It reflects the ever-improving capacity of AI to function as a genuinely useful software development tool.

“Vibe coding” searches are up 6700% in the last 3 months.

Simply put, the idea is that large language models like ChatGPT allow programmers to write code without having to really engage their skills. They can work off “vibes”, getting large language models (LLMs) to generate the code from simple plain-language prompts.

Increasingly, this technique now produces workable code. And when there are bugs, the AI can often identify and fix them.

Taken in a broader sense, vibe coding unlocks new possibilities for those with little or no programming experience, too. As long as they know what result they want to produce, anyone can ask an LLM for the required code.

What does this mean for the software development industry? Is vibe coding really up to complex, enterprise-scale projects, or is it just something to play around with on the side? I’ll explore all that and more — starting with a surprising figure to emerge from the latest Y Combinator cohort.

Vibe coding takes over at Y Combinator

Y Combinator has funded over 5,000 startups, which now have a combined valuation of over $600 billion. That figure is boosted by a handful of heavyweights like Stripe, Airbnb, and DoorDash.

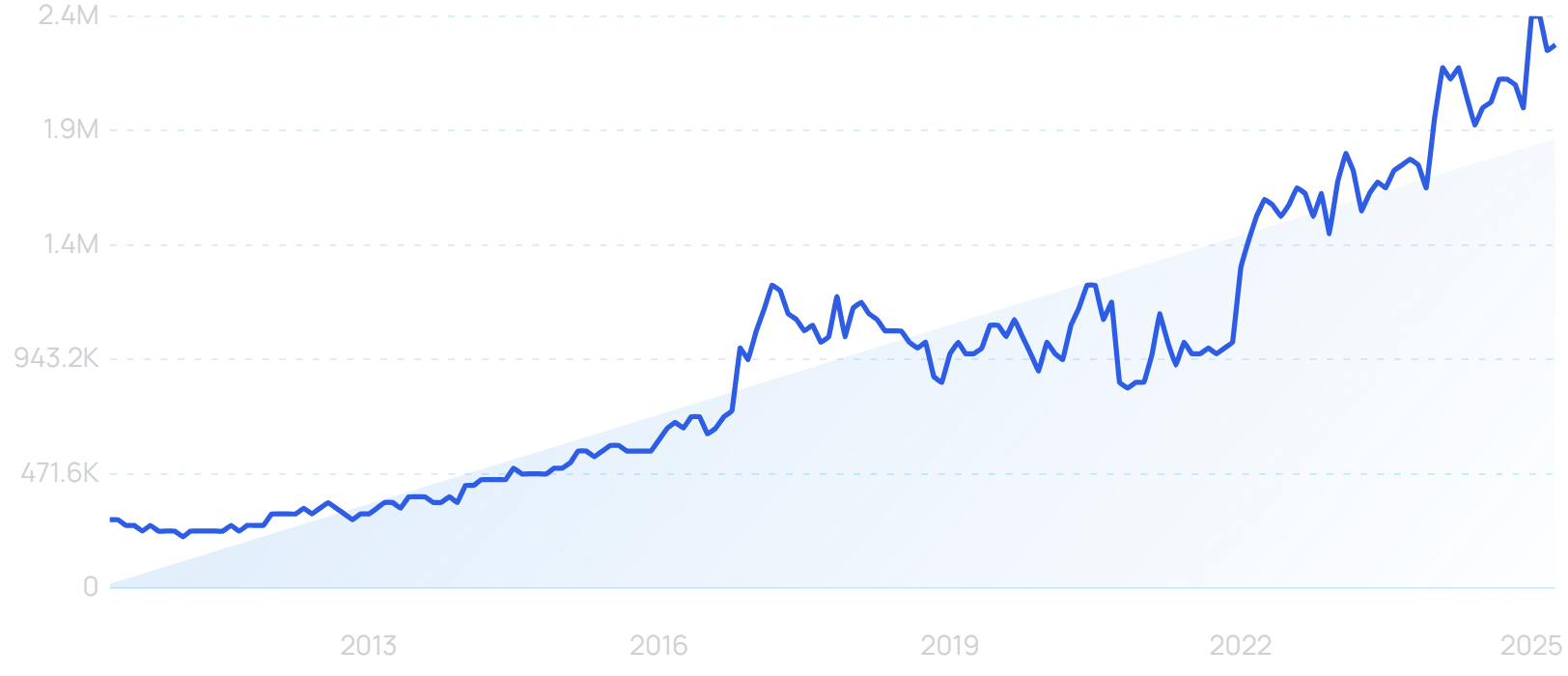

“Stripe, Inc.” is a Y Combinator success story. Searches are up 900% in 15 years.

Airbnb now receives 91.8 million monthly visits. Stripe's total payment volume hit $1.4 trillion in 2024. DoorDash has at least 37 million monthly active consumers.

But none of these platforms would be any more than ideas without code. They are all good ideas — frictionless payments, short-term home lets, any food delivered — but they all rely on their underlying software to function.

Yet among the latest Y Combinator cohort, many of the startups have left the coding almost entirely to AI.

Around a quarter of those accepted into the accelerator use AI to write 95% or more of their code.

Admittedly, it is not an entirely representative sample, with roughly 80% of this cohort’s startups operating within the AI industry themselves. But it’s still a vote of faith that those working with AI trust the technology enough to write the code.

And Y Combinator CEO Garry Tan says that the reduction in required coding manpower has produced unprecedented growth within the latest group of startups. “It’s not just the number one or two companies,” he revealed. “The whole batch is growing 10% week on week.”

How good is AI coding?

If Y Combinator startups are “vibe coding” in the real world, then it certainly appears as if AI has reached the point where it is a legitimate programming tool. But what objective measures are available?

Measuring success

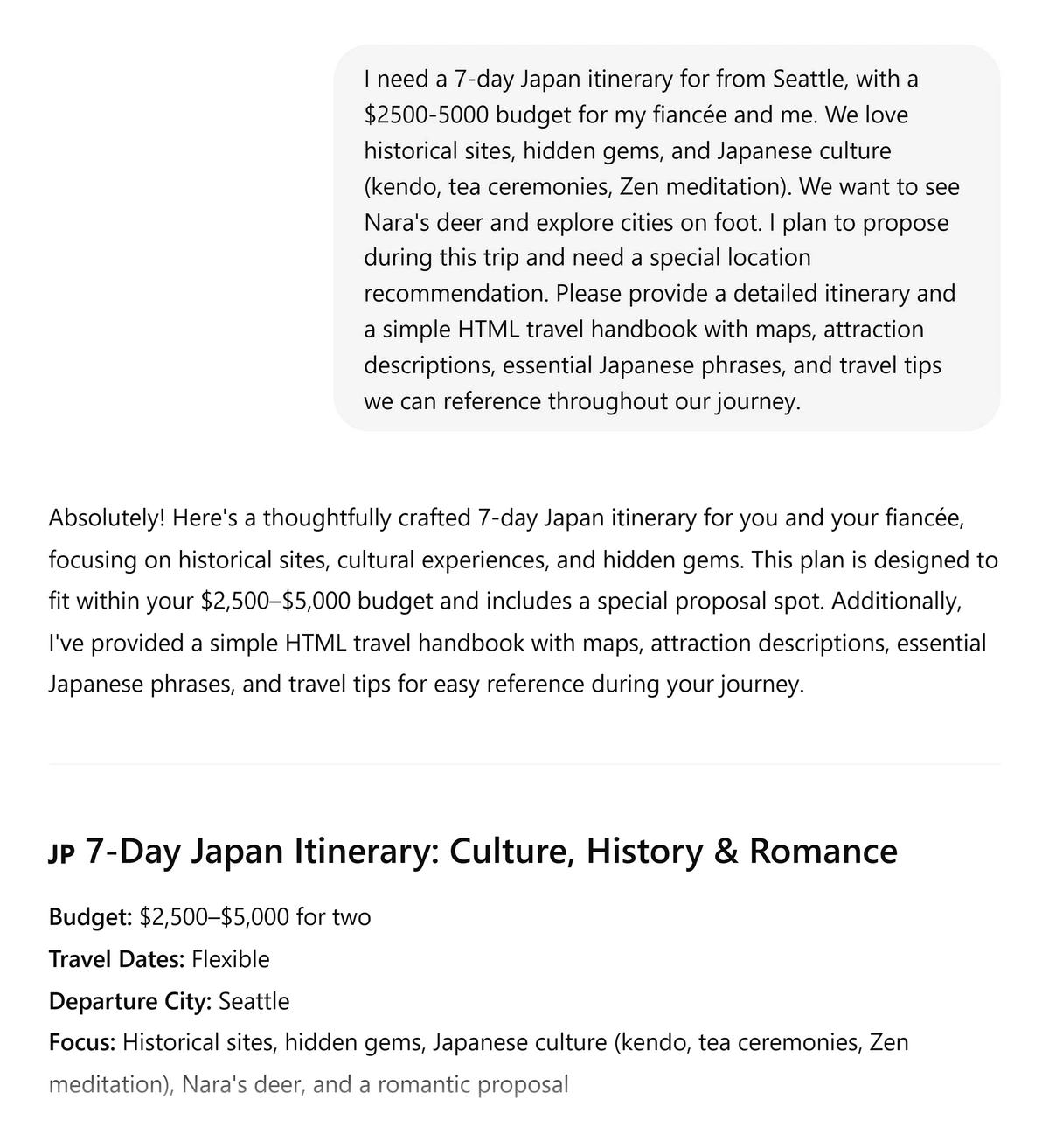

AI models are constantly being subjected to “benchmarks”.

AI benchmarks are standardized tests that measure how well different AI models complete coding or problem-solving tasks.

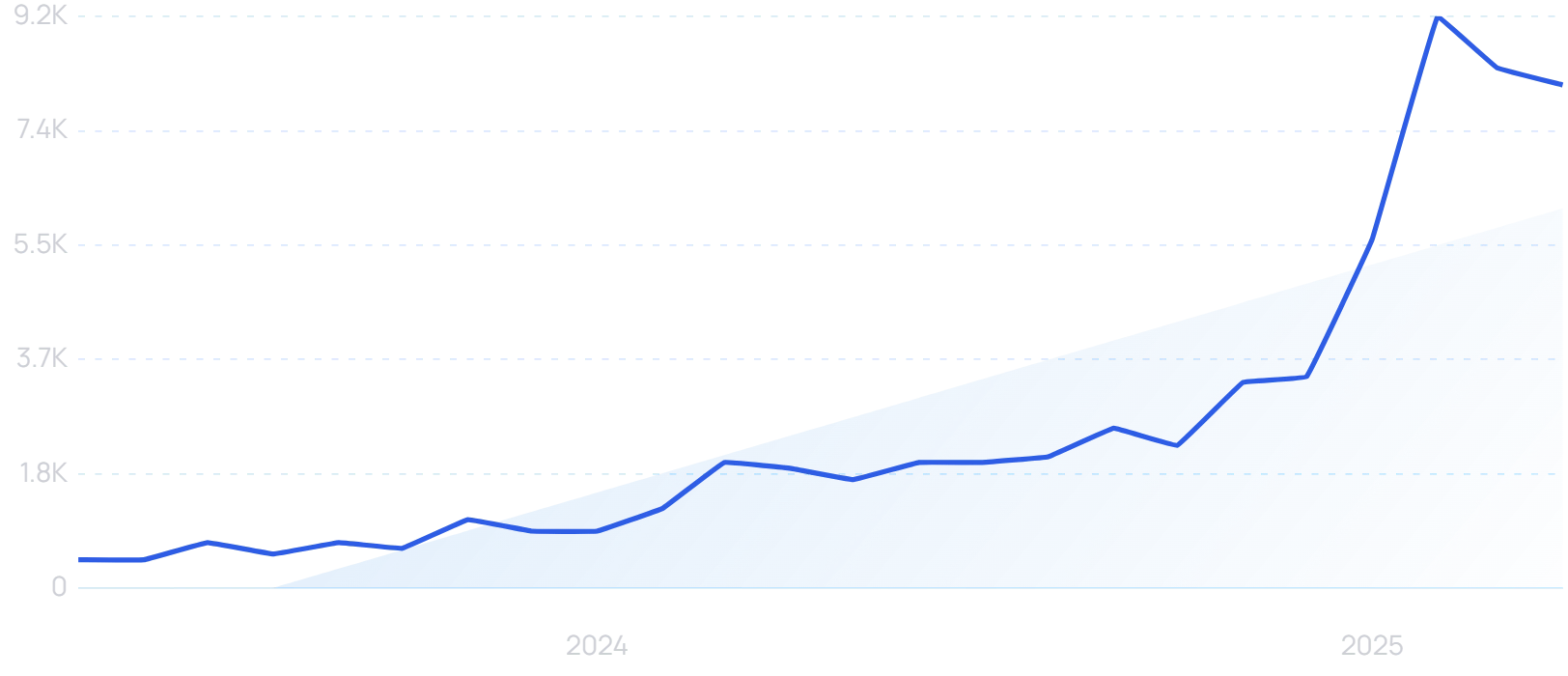

“AI benchmarks” searches have risen by 1660% in the last 2 years.

Benchmarks are helpful for selecting the best LLMs to use within a particular context (such as coding). But they also theoretically give an idea of the general state of AI sophistication within that specific context.

For example, a coding benchmark test might contain 100 programming problems. LLMs are not being measured directly against one another, but against how many of those problems they can solve — so as the technology advances, that will be reflected in higher scores across the board.

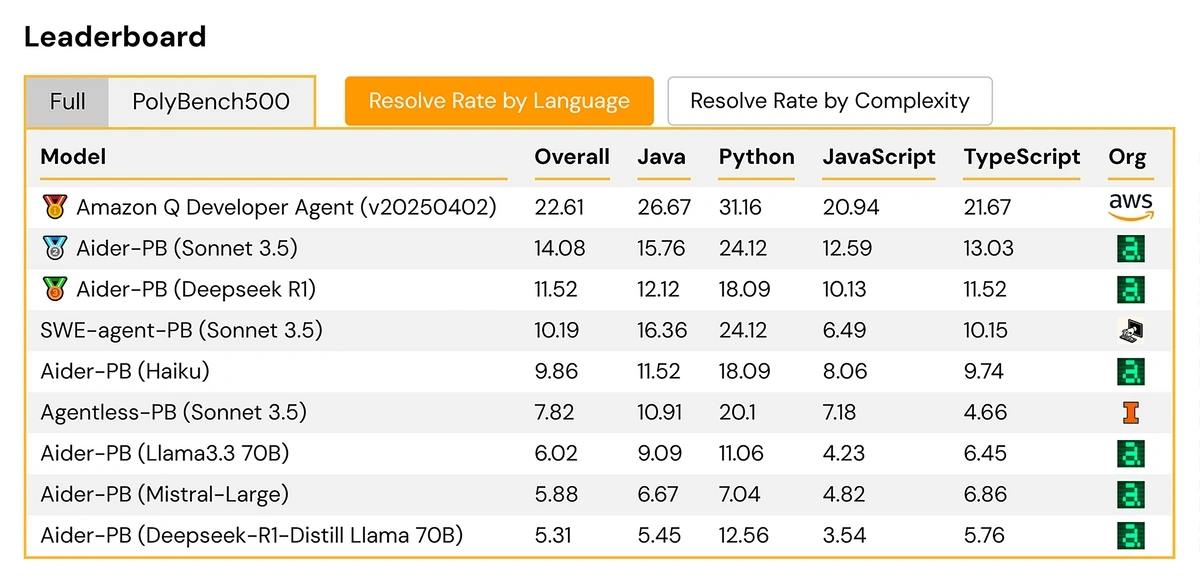

Back in 2023, the best AI models were passing around 5% of the challenges posed by SWE-Bench, a popular coding benchmark. Today, that figure is now above 60%.

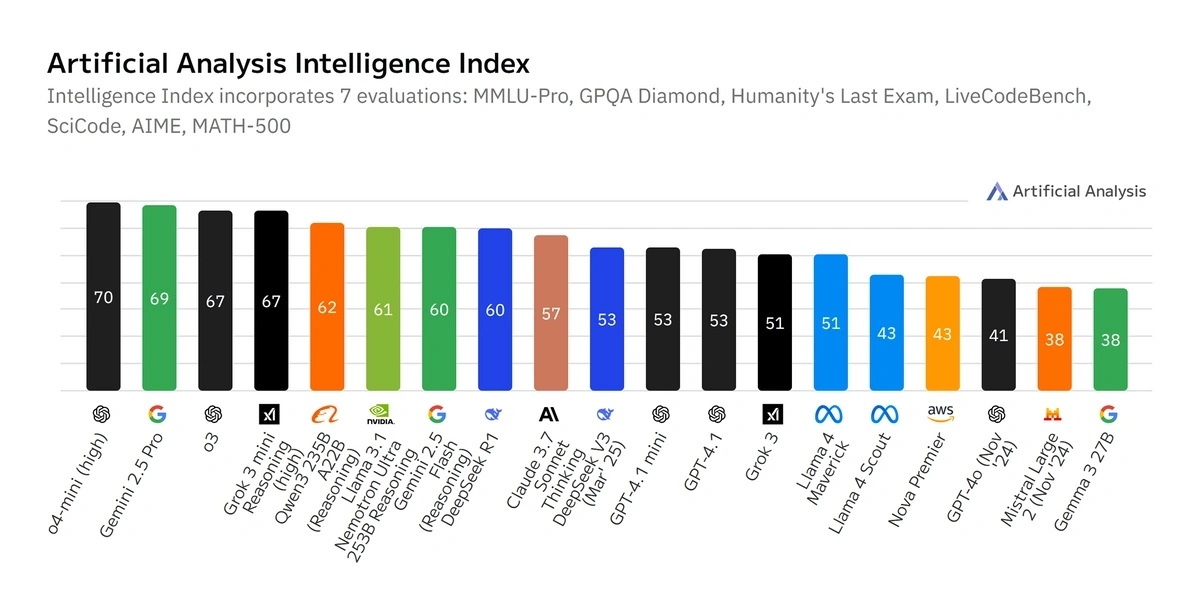

Artificial Analysis operates a variety of its own AI benchmarks. The top-scoring LLMs on its Math Index score 96, whereas the top models on the Coding Index score significantly lower.

Intuitively, this should imply that AI performs better on math problems than coding problems. However, benchmarking raises a new problem: how do you benchmark the benchmarks?

The top AI model on the Artificial Analysis Coding Index scores 63. But on SWE-PolyBench, a benchmark recently devised by Amazon, the top model has a resolve rate of just 22.61.

Naturally, the benchmark scores are entirely dependent on the difficulty of the tasks being set. And while any good benchmark will try to include a variety of problem types within its set of tests, it is inevitable that certain challenges will favor certain LLMs.

But as long as you’re aware of the tendency for moving goalposts, benchmarks are really useful for showing the progress in AI coding — and they paint the picture of a field advancing rapidly.

A layman’s test

I have essentially zero coding skills. Breaking up my many essay subjects, I did take a high school computing class — but while I may not have had any AI assistance, I did rely heavily on being able to look over the shoulder of my friend Sam.

So what can vibe coding do for me, someone who has long since forgotten how to build even the most basic application? Benchmarks provide a far more scientific model, but it’s useful to see AI coding in action.

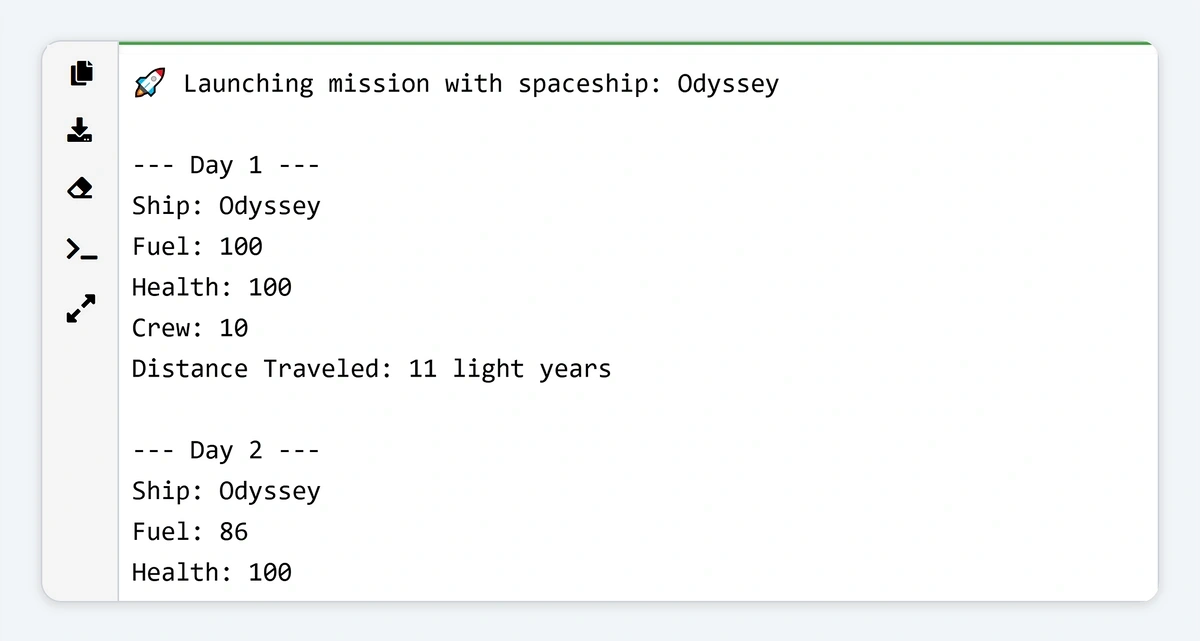

I asked ChatGPT to give me the code to simulate a space exploration scenario. It didn’t need any more details than that:

I copied this code into Python. While the result won’t be winning any awards, it produced a functioning text-based space adventure.

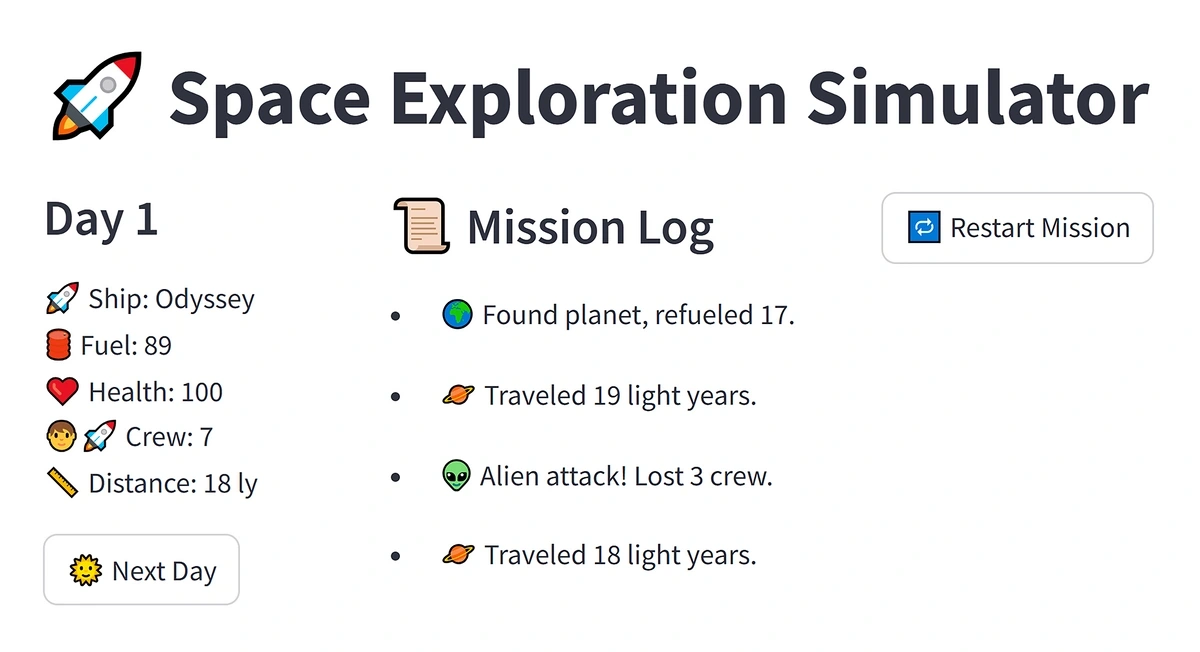

ChatGPT then asked if I would like an upgraded version of the simulation, before asking if I wanted to incorporate graphic elements.

I kept accepting its suggestions. Each time, the code it provided worked without issue.

So it’s certainly possible to create a program of some description without requiring any coding knowledge whatsoever. Anyone can start vibe coding.

AI code debugging

More complex code is still likely to require some human expertise. For one thing, programmers can give far better prompts than I can — and there’s also that 5% of code that even the most AI-friendly Y Combinator startups still handle themselves.

Crucially, there’s also the issue of debugging code, one of the most important parts of a developer’s role. This remains a relative weakness of AI, but progress is being made.

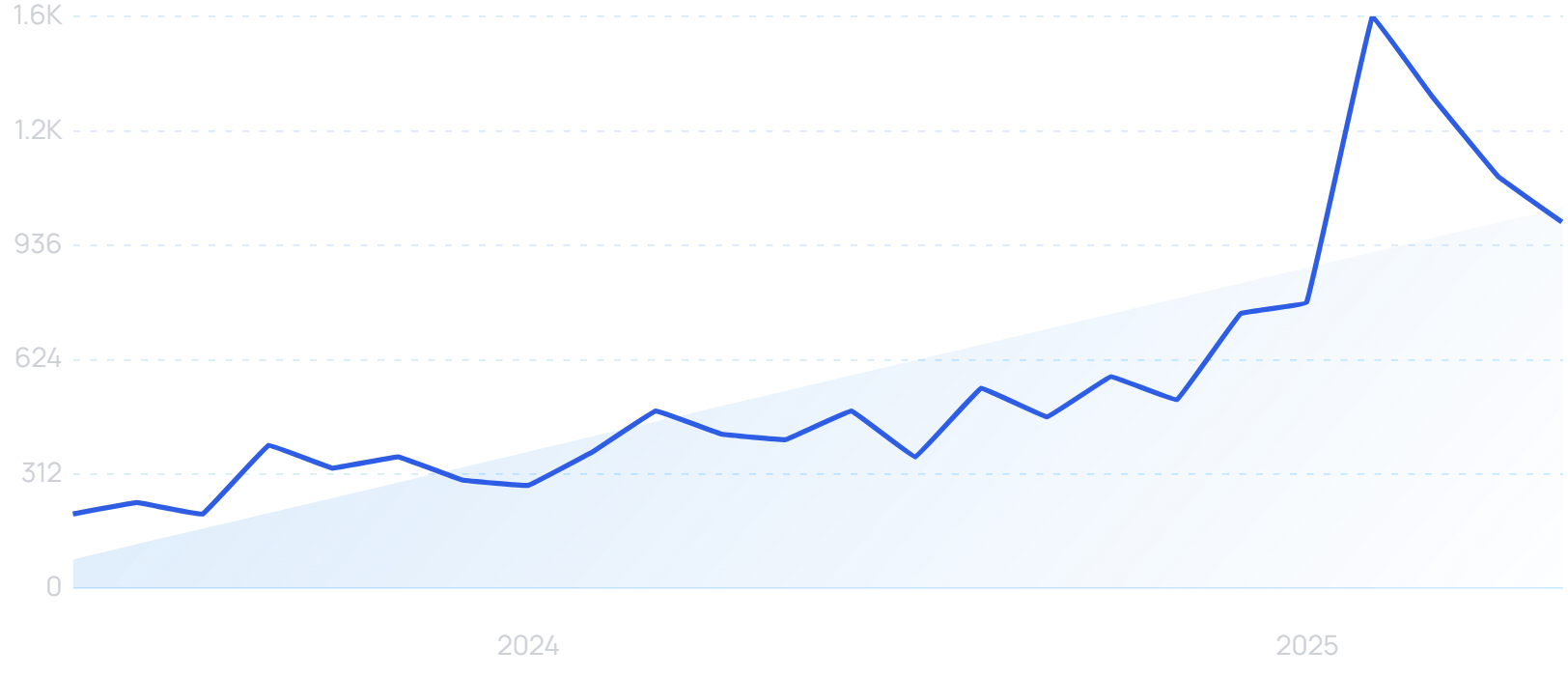

“AI debugging” searches are up 248% in the last 2 years.

If something had gone wrong with my AI-generated space exploration scenario, I simply wouldn’t have had the skills to fix the code. I would have been relying on the AI to fix its own mistakes.

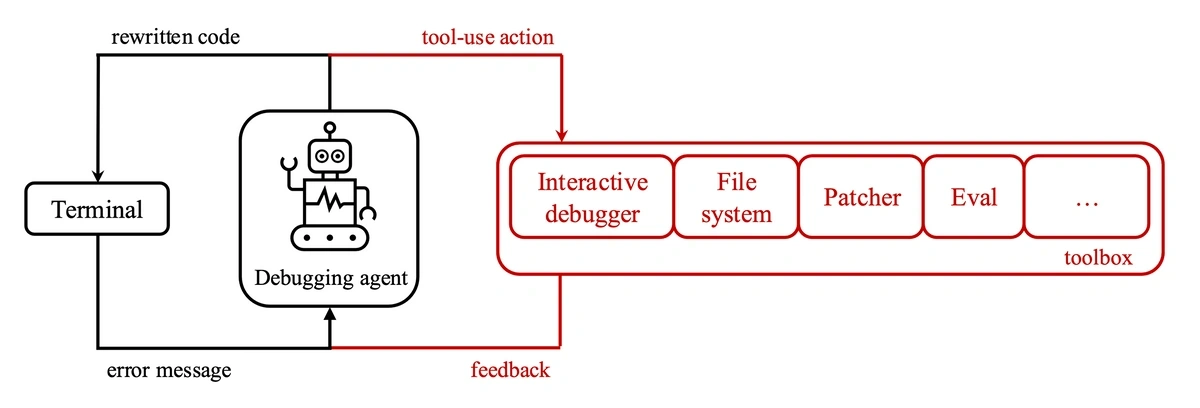

Microsoft recently released Debug-gym, with the stated goal of teaching AI how to debug code like programmers.

It moves AI assistants away from a reliance on error messages to propose solutions.

The section in red represents the extra processes introduced by Debug-gym. Early results have been promising.

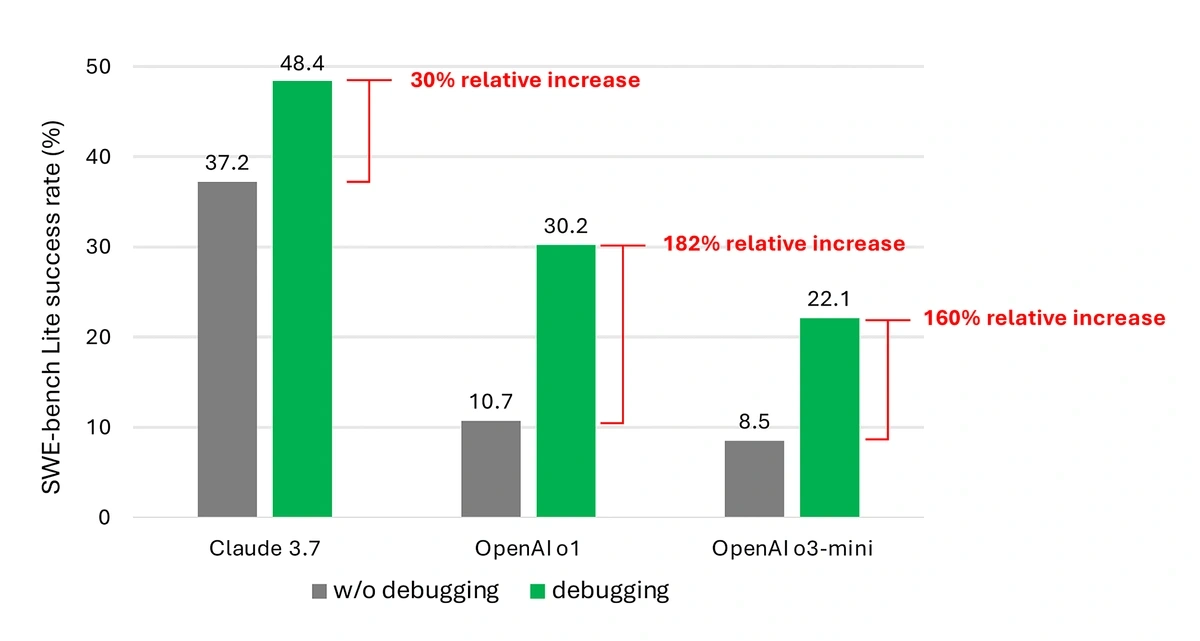

Using the SWE-bench Lite benchmark, Microsoft tested some major LLMs with and without Debug-gym. All saw major improvements (although even the higher scores still don’t necessarily suggest AI debugging is ready to perform at enterprise level).

Meanwhile, one of the consequences of vibe coding is that there is more code than ever before. That leaves more scope for errors, and more need for debugging tools.

Lightrun aims to tackle this challenge with its code observability platform.

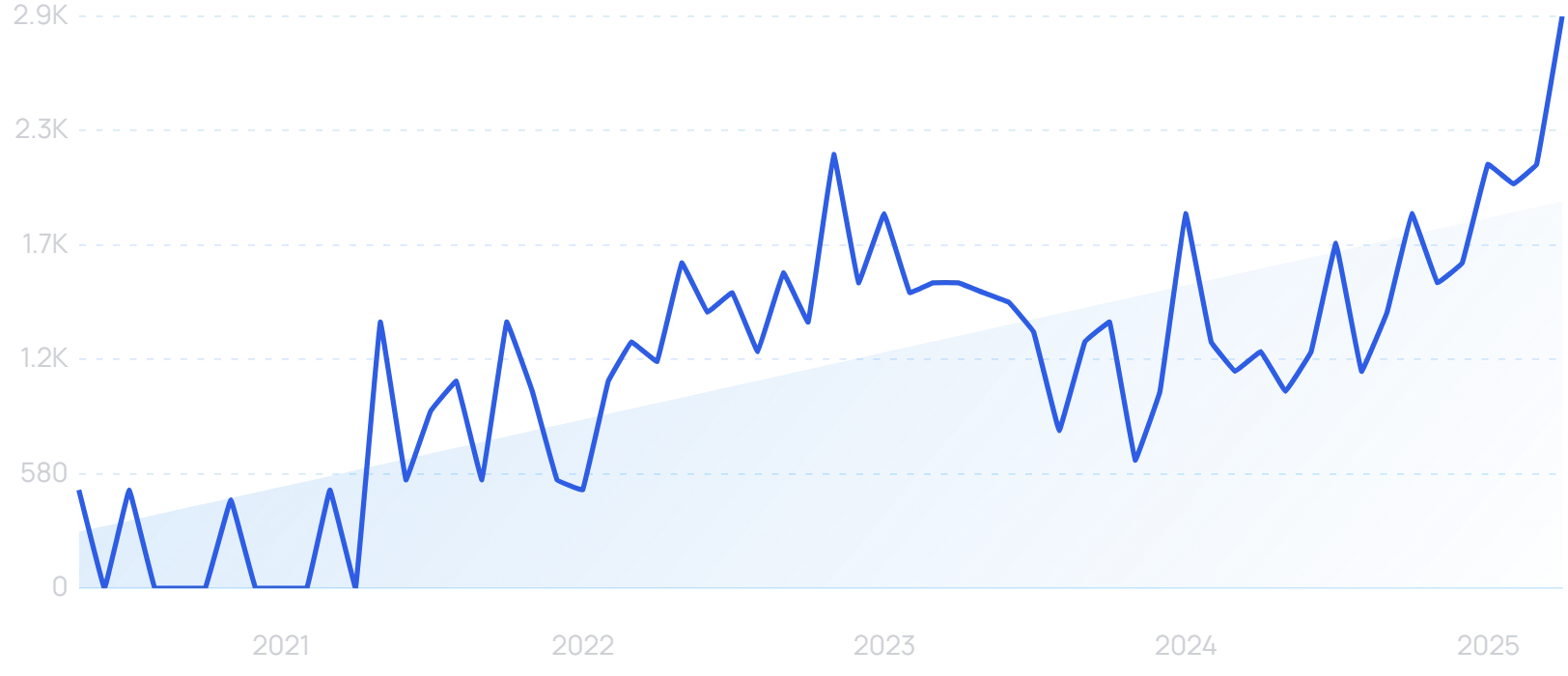

“Lightrun” searches are up 480% in the last 5 years.

Its software makes it easier for developers to spot and fix potential issues in code, regardless of whether it was generated by a human or AI.

Lightrun claims that its platform delivers a 763% ROI over three years. Developer productivity can improve by as much as 20%.

It recently raised a $70 million Series B round. It counts Microsoft and Salesforce among its clients.

AI agents

We have clearly arrived at a point where AI is a very useful tool to assist humans. Whether you’re writing prose or code, you can get legitimately helpful inspiration and pointers from LLMs.

But vibe coding is one of the AI use cases that sails close to a significant question: can we trust AI to complete entire tasks for us, without supervision?

AI agents are designed to do just that, effectively acting as personal assistants to do things like book transport or order food.

“AI agent” searches are up 1113% in the last 2 years.

AI tools do now generally have the functionality to search the web, mitigating the issue of being trained on out-of-date information. “Computer use” modes can hand over mouse and keyboard access to AI as well.

Even so, the success rate of these agents is relatively patchy. When trusting AI with things like payment details, the fact that “hallucinations” remain a risk is distinctly alarming.

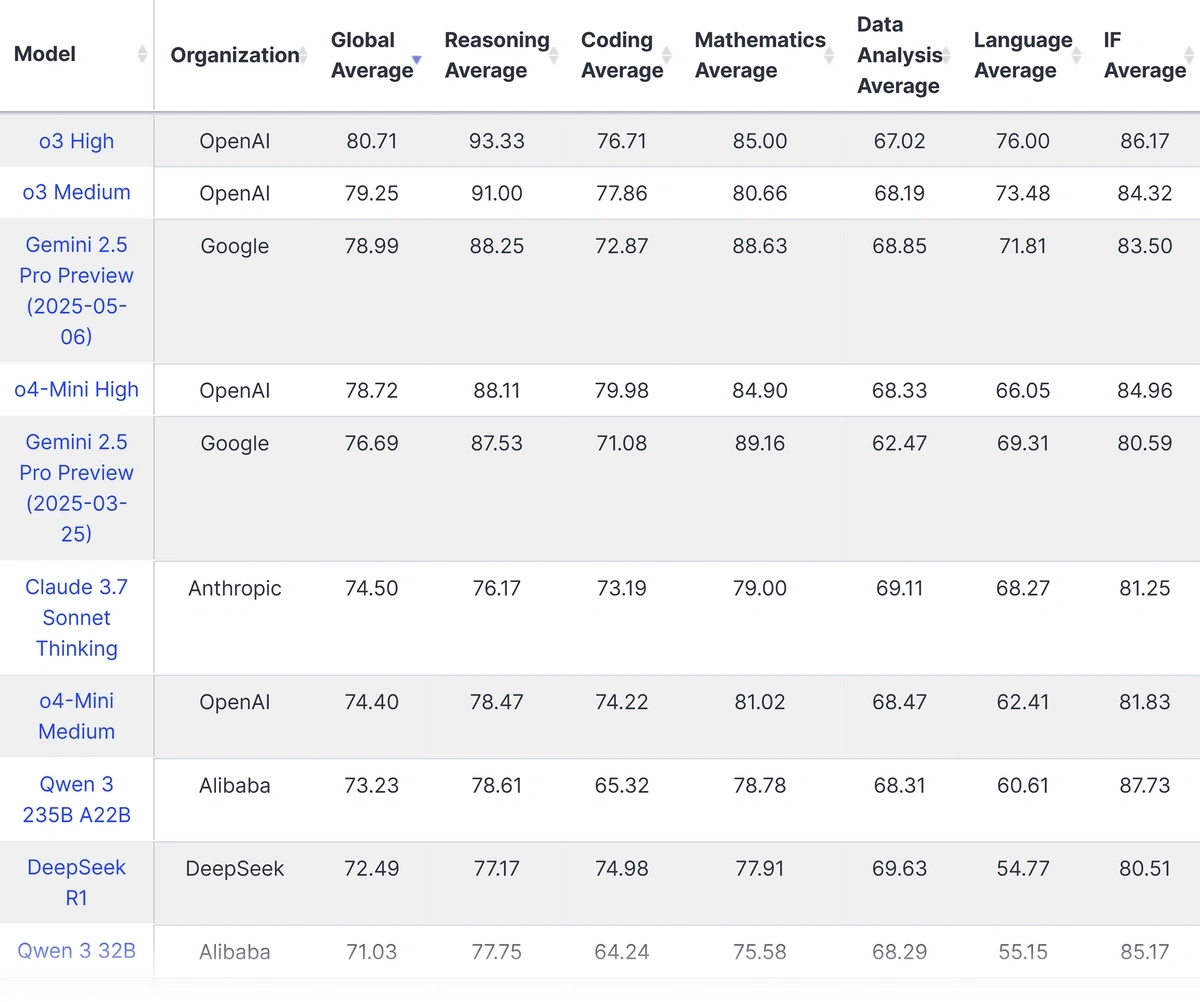

Manus is one of the most recent attempts to create a functioning “general AI agent”.

“Manus” had a sharp search spike when it launched its early preview. Interest is now rising steadily.

It ranks as state-of-the-art on a popular benchmark for general AI assistants. Having said that, it’s hard to pinpoint exactly what it does that differs from something like ChatGPT.

Co-founder Yichao Ji says that Manus “bridges the gap between conception and execution”. But most of the examples it provides, like creating an itinerary for a trip to Japan or constructing an interactive course about a scientific principle, still primarily present information rather than acting on it in any real-world way.

In the trip to Japan example, I gave ChatGPT the same prompt, and received very comparable results.

And when tested on tangible tasks like booking flights or reserving tables, Manus repeatedly failed.

Fully functioning AI agents are likely somewhere on the horizon. But for now, the technology is best as an assistant to human endeavor, not a replacement.

The best vibe coding tools

Provided you recognize its limitations, vibe coding can be a very useful way of getting AI assistance to start or refine a project. But what are the best tools for the job?

The best vibe coding LLMs

ChatGPT o4-Mini High comes out on top across multiple coding benchmarks.

In fact, OpenAI models occupy the top 5 positions in Live Bench’s coding benchmark. The next-best model is DeepSeek R1.

The latest versions of Claude and Grok also score reasonably well.

The best third-party vibe coding tools

Not every vibe coding tool uses its own LLMs. In fact, some of the best tools in the industry take advantage of a variety of pre-existing models.

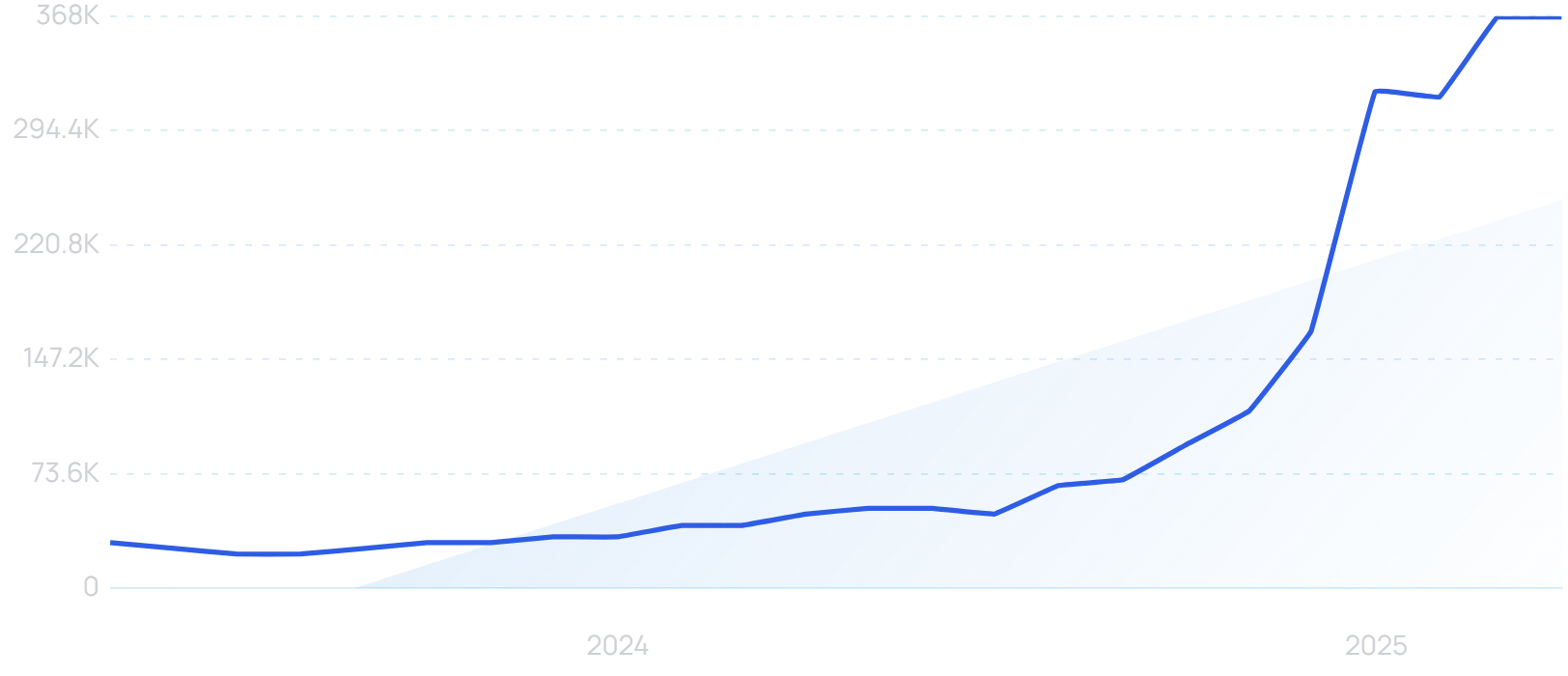

Cursor is an integrated development environment that provides auto-complete suggestions, smart rewrites, and AI advice while you’re coding.

“Cursor” searches are up 163% in 2 years.

Users can manually select from various basic and premium models. Different plans provide different tiers of access.

Cursor hit $100 million ARR in January. By March, it had reached $200 million.

GitHub Copilot recently launched its new Workspace platform, a developer environment that natively supports AI assistance.

It supports plain-language prompts throughout the code-building process, limiting the amount of technical expertise required. GitHub projects a future where it has 1 billion developers on its platform thanks to the reduced barriers to entry.

Vibe coding is the new normal

If you define it broadly enough, vibe coding is here to stay.

When building something, it’s still a good idea to have a firm grasp of your code — in fact, it’s necessary because AI will not always be able to debug errors.

And however much AI continues to advance, there will always be a need for humans who understand what goes on under the hood. A world full of software run on code that nobody can interpret is a recipe for disaster.

But it’s already a no-brainer to use AI as a coding assistant. It can dramatically improve efficiency for programmers, and unlock new possibilities for those who would not previously have been able to program anything at all.

Ultimately, you can draw a similar conclusion on most AI topics: you’d be foolish to grow reliant on it, but just as silly to reject technology that can be such a powerful tool in your favor.

That's why we're seeing the rise of related concepts like "vibe marketing".

Sign up for Semrush to access an array of time-saving AI and SEO tools for supercharging your marketing efforts.

Stop Guessing, Start Growing 🚀

Use real-time topic data to create content that resonates and brings results.

Exploding Topics is owned by Semrush. Our mission is to provide accurate data and expert insights on emerging trends. Unless otherwise noted, this page’s content was written by either an employee or a paid contractor of Semrush Inc.

Share

Newsletter Signup

By clicking “Subscribe” you agree to Semrush Privacy Policy and consent to Semrush using your contact data for newsletter purposes

Written By

James is a Journalist at Exploding Topics. After graduating from the University of Oxford with a degree in Law, he completed a... Read more